|

|

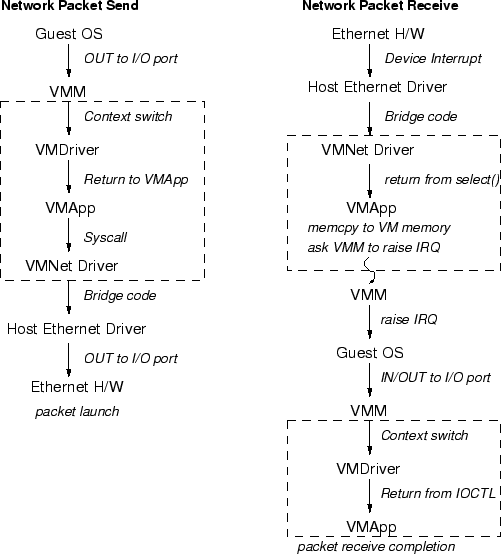

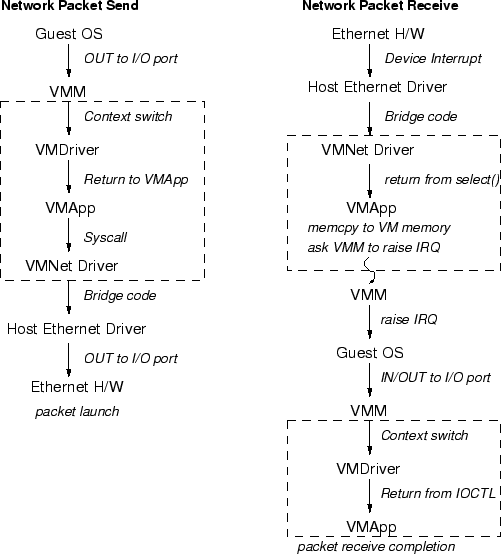

Figure 4 depicts the components involved when sending and receiving packets via the hosted virtual NIC emulation described above. The guest operating system runs the device driver for a Lance controller. The driver initiates packet transmissions by reading and writing a sequence of virtual I/O ports, each of which switches back to the VMApp to emulates the Lance port accesses. On the final OUT instruction of the sequence, the Lance emulation does a normal write() to the VMNet driver, which passes the packet onto the network via a host NIC and then the VMApp switches back to the VMM, which raises a virtual IRQ to notify the guest device driver the packet was sent.

Packet receives occur in reverse. The bridged host NIC delivers the packet to the VMNet. The VMApp periodically runs select() on its connection to the VMNet and read()s the packet and requests that the VMM raise a virtual IRQ when it discovers any incoming packets. The VMM posts the virtual IRQ and the guest's Lance driver issues a sequence of I/O accesses to acknowledge the receipt to the hardware.

The boxed regions of the figure indicate extra work introduced by virtualizing the port accesses that actually send and receive packets. There is additional work in handling the intermediate I/O accesses and the privileged instructions associated with handling a virtual IRQ. Of the intermediate accesses, the ones to the virtual Lance's address register are handled completely within the VMM and all accesses to the data register switch back to handling code in the VMApp.

This extra overhead consumes CPU cycles and increases the load on the CPU. The next section studies the effect of this extra overhead on I/O performance as well as CPU utilization. It breaks down the overheads along the boxed paths and describes overall time usage in the VMM and VMApp during the course of network activity.