|

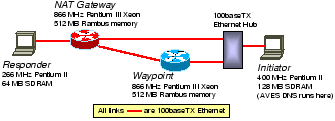

To measure the performance of our system, we set up a small 100 Mbps Ethernet testbed as shown in Figure 7. For data path performance, we instrumented the Linux kernel version 2.2.14 and our daemon software to measure, with the Pentium CPU cycle counter, the processing time of a packet in the waypoint and the NAT gateway (both in-bound and out-bound directions). We measured three quantities: (1) the total packet processing time from the moment netif_rx() was called by the Ethernet device driver after receiving a packet until the moment dev_queue_xmit() was called to pass a processed packet to the device driver for transmission; (2) the AVES daemon processing time from the moment a packet was received by a daemon socket until the moment before the processed packet was sent out on a socket; (3) the time spent on computing the MD5 authentication checksum in the AVES daemon. We sent UDP packets of varying sizes between the initiator and the responder and recorded the processing times. Our experiments show that all the processing times scale linearly as the packet size varies. Figure 8 shows the partial results, averaged over 10,000 packets, for the smallest (36 bytes) and largest (1464 bytes) packet sizes we have tried.

There are several noteworthy points. First, implementing our software in user-level adds a very significant overhead due to the memory copies and context switches. We can expect a kernel-level implementation of our software will have a total processing time very close to the AVES daemon processing time. Second, almost all the AVES daemon processing time is spent on computing the MD5 authentication checksum (note that no authentication is needed for out-bound packets at the NAT gateway). This overhead can be reduced if we only authenticate the packet headers but at the cost of lowered security. Finally, based on these measurements, our software can theoretically sustain a throughput of 233 Mbps with 1464 byte packets in our testbed.

We have also conducted end-to-end throughput experiments. When we sent 1464 byte packets from the initiator to the responder, the throughput was limited only by the link capacity, as the system achieved 96 Mbps with UDP and 80 Mbps with TCP. However, when we sent 48 byte packets, our software was only able to achieve 41 Mbps with TCP. This is actually higher than the calculated maximum of 19 Mbps based on the processing times measurements due to the amortization of kernel overheads over a sequence of packets. We expect the throughput with UDP to be slightly better; however, due to a device driver bug with the Intel EtherExpress Pro 100 network interface card, we were unable to send 48 byte UDP packets faster than 10 Mbps without causing the interface card to shutdown.

Next we measured the performance of the control path. Typically, the time required to resolve an AVES DNS name is dominated by the network delays of the DNS and AVES control messages. To factor out the network delays, we ran both the AVES-aware DNS server daemon and the waypoint daemon on the initiator machine. A program running on the initiator that repeatedly issued gethostbyname system calls for the responder was used to drive the system. We then measured the number of CPU cycles, including socket reads and writes, averaged over 20 requests, consumed by each control path component.

We found that the total time required to complete a gethostbyname system call was on average 357,000 cycles. This total time can be further broken down as follows. First, it took 142,000 cycles to process the DNS query at the AVES-aware DNS server daemon and send the SETUP message to the waypoint daemon. Second, the waypoint daemon took 71,000 cycles to process the SETUP message and send back the ACCEPT message. Finally, another 17,900 cycles were spent at the AVES-aware DNS name server daemon to process the ACCEPT message and send out the final DNS reply message. Computing the MD5 authentication checksum of an AVES control message took 3,100 cycles. Thus, the DNS query processing is the bottleneck. With a 400 MHz AVES-aware DNS server, at most 2,800 sessions can be set up per second. Of course if delayed binding is used, the protocol will impose a much stricter limit.