The goal of a clock scheduling algorithm is to try to predict or recognize a CPU usage pattern and then set the CPU clock speed sufficiently high to meet the (predicted) needs of that application. Although patterns in the utilization are more evident when using a 100ms sliding average for utilization, we found that averaging over such a long period of time caused us to miss our ``deadline''. In other words, the MPEG audio and video became unsynchronized and some others applications such as the speech synthesis engine had noticeable delays. This occurs because it takes longer for the system to realize it is becoming busy.

This delay is the reason that the studies of Govil et al. [6] and Weiser [7] argued that clock adjustment should examine a 10-50ms interval when predicting future speed settings. However, as Figure 3 shows, it is difficult to find any discernible pattern at the smaller time-scales. Like Govil et al., we also allowed speed setting to occur at any interval; Weiser et al. did not model having the scheduler interrupted while an application was running, but rather deferred clock speed changes to occur only when a process yielded or began executing in a quanta.

There are a number of possible speed-setting heuristics we could

examine; since we were focusing on implementable policies, we

primarily used the policies explored by

Pering et al. [5]. We also explored other

alternatives. One simple policy would determine the number of ``busy''

instructions during the previous ![]() 10ms scheduling quanta and

predict that activity in the next quanta would have the same

percentage of busy cycles. The clock speed would then be set to insure

enough busy cycles.

10ms scheduling quanta and

predict that activity in the next quanta would have the same

percentage of busy cycles. The clock speed would then be set to insure

enough busy cycles.

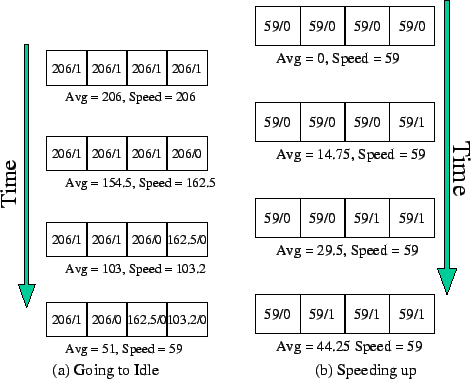

This policy sounds simple, but it results in exceptionally poor responsiveness, as illustrated in Figure 5. Figure 5(a) shows the speed changes that would occur when the application is moving from period of high CPU utilization to one of low utilization; the speed changes to 59MHz relatively quickly because we are adding in a large number of idle cycles each quanta. By comparison, when the application moves from an idle period to a fully utilized period, the simple speed setting policy makes very slow changes to the processor utilization and thus the processor speed increases very slowly. This occurs because the total number of non-idle instructions across the four scheduling intervals grows very slowly.

|