|

| |||||||||||||||||||||||||||||||||||||||||||||||||||

| ||||||||||||||||||||||||||||||||||||||||||||||||||||

|

NSDI '05 Paper

[NSDI '05 Technical Program]

Botz-4-Sale: Surviving Organized DDoS Attacks That Mimic Flash Crowds

Abstract- Recent denial of service attacks are mounted by professionals using Botnets of tens of thousands of compromised machines. To circumvent detection, attackers are increasingly moving away from bandwidth floods to attacks that mimic the Web browsing behavior of a large number of clients, and target expensive higher-layer resources such as CPU, database and disk bandwidth. The resulting attacks are hard to defend against using standard techniques, as the malicious requests differ from the legitimate ones in intent but not in content. We present the design and implementation of Kill-Bots, a kernel extension to protect Web servers against DDoS attacks that masquerade as flash crowds. Kill-Bots provides authentication using graphical tests but is different from other systems that use graphical tests. First, Kill-Bots uses an intermediate stage to identify the IP addresses that ignore the test, and persistently bombard the server with requests despite repeated failures at solving the tests. These machines are bots because their intent is to congest the server. Once these machines are identified, Kill-Bots blocks their requests, turns the graphical tests off, and allows access to legitimate users who are unable or unwilling to solve graphical tests. Second, Kill-Bots sends a test and checks the client's answer without allowing unauthenticated clients access to sockets, TCBs, and worker processes. Thus, it protects the authentication mechanism from being DDoSed. Third, Kill-Bots combines authentication with admission control. As a result, it improves performance, regardless of whether the server overload is caused by DDoS or a true Flash Crowd.

Denial of service attacks are

increasingly mounted by professionals in exchange for money or

material benefits (35). Botnets of thousands of

compromised machines are rented by the hour on IRC and used to DDoS

online businesses to extort money or obtain commercial

advantages (26,17,45). The DDoS business is

thriving; increasingly aggressive worms can infect up to 30,000 new

machines per day. These zombies/bots are then used for DDoS and other

attacks (17,43).

Recently, a Massachusetts businessman paid members of the computer

underground to launch organized, crippling DDoS attacks against three

of his competitors (35). The attackers used Botnets of

more than 10,000 machines. When the simple SYN flood failed, they

launched an HTTP flood, pulling large image files from the victim

server in overwhelming numbers. At its peak, the onslaught allegedly

kept the victim company offline for two weeks. In another instance,

attackers ran a massive number of queries through the victim's search

engine, bringing the server down (35).

| ||||||||||||||||||||||||

![\begin{figure}\centering

\includegraphics*[width=3in]{figures/states.eps}

\vskip -.05in\vskip -.0in

\end{figure}](img11.png) |

A couple of points are worth noting. First, the server behavior is unchanged in the NORMAL mode, and thus the system has no overhead in the common case of no attack. Second, an attack that forces Kill-Bots to switch back-and-forth between the two modes is harmless because the cost for switching is minimal. The only potential switching cost is the need to timeout very long connections that started in the NORMAL mode. Long connections that started in a prior SUSPECTED_ATTACK mode need not be timed out because their users have already been authenticated.

(a) Modifications to Server's TCP Stack: Upon the arrival of a new HTTP request, Kill-Bots sends a graphical test and validates the corresponding answer without allocating any TCBs, socket buffers, or worker processes on the server. We achieve this by a minor modification to the server TCP stack. As shown in Fig. 3, similarly to a typical TCP connection, a Kill-Bots server responds to a SYN packet with a SYN cookie. The client receives the SYN cookie, increases its congestion window to two packets, transmits a SYNACKACK and the first data packet that usually contains the HTTP request. In contrast to a typical connection, the Kill-Bots kernel does not create a new socket upon completion of the TCP handshake. Instead, the SYNACKACK is discarded because the first data packet from the client repeats the same acknowledgment sequence number as the SYNACKACK.

![\begin{figure}\centering

\includegraphics*[width=2.8in]{figures/HG_connect.eps}

\vskip -0.05in\vskip -.05in

\end{figure}](img17.png) |

When the server receives the

client's data packet, it checks whether it is a puzzle answer.

(An answer has an HTTP request of the form

GET /validate?answer=ANSWER![]() , where

, where ![]() is the puzzle id.)

If the packet is not an answer, the server replies with a

new graphical test, embedded in an HTML form

(Fig. 5). Our implementation uses CAPTCHA images

that fit in 1-2 packets. Then, the server immediately closes the

connection by sending a FIN packet and does not wait for the FIN ack.

On the other hand, the client packet could be a puzzle answer. When a

human answers the graphical test, the HTML form

(Fig. 5) generates an HTTP request GET

/validate?answer=ANSWER

is the puzzle id.)

If the packet is not an answer, the server replies with a

new graphical test, embedded in an HTML form

(Fig. 5). Our implementation uses CAPTCHA images

that fit in 1-2 packets. Then, the server immediately closes the

connection by sending a FIN packet and does not wait for the FIN ack.

On the other hand, the client packet could be a puzzle answer. When a

human answers the graphical test, the HTML form

(Fig. 5) generates an HTTP request GET

/validate?answer=ANSWER![]() that reports the answer to the server.

If the packet is an answer, the kernel checks the cryptographic

validity of the ANSWER (see (c) below). If the check succeeds, a

socket is established and the request is delivered to the application.

that reports the answer to the server.

If the packet is an answer, the kernel checks the cryptographic

validity of the ANSWER (see (c) below). If the check succeeds, a

socket is established and the request is delivered to the application.

Note the above scheme preserves TCP congestion control semantics, does not require modifying the client software, and prevents attacks that hog TCBs and sockets by establishing connections that exchange no data.

(b) One Test Per Session:

It would be inconvenient if legitimate users had to solve a puzzle for

every HTTP request or every TCP connection. The Kill-Bots

server gives an HTTP cookie to a user who solves the test

correctly. This cookie allows the user to re-enter the system for a

specific period of time, ![]() (in our implementation,

(in our implementation, ![]() min).

If a new HTTP request is accompanied by a cryptographically valid HTTP

cookie, the Kill-Bots server creates a socket and hands the request to

the application without serving a new graphical test.

min).

If a new HTTP request is accompanied by a cryptographically valid HTTP

cookie, the Kill-Bots server creates a socket and hands the request to

the application without serving a new graphical test.

(c) Cryptographic Support:

When the Kill-Bots server issues a puzzle, it creates a

Token as shown in Fig. 6. The token consists of a 32-bit

puzzle ID ![]() , a 96-bit random number

, a 96-bit random number ![]() , the 32-bit creation time

, the 32-bit creation time

![]() of the token, and a 32-bit collision-resistant hash of

of the token, and a 32-bit collision-resistant hash of ![]() ,

, ![]() ,

and

,

and ![]() along with the server secret. The token is embedded in the

same HTML form as the puzzle (Fig. 6) and sent to the

client.

along with the server secret. The token is embedded in the

same HTML form as the puzzle (Fig. 6) and sent to the

client.

When a user solves the puzzle, the browser reports the answer to the server along with the Kill-Bots token. The server first verifies the token by recomputing the hash. Second, the server checks the Kill-Bots token to ensure the token was created no longer than 4 minutes ago. Next, the server checks if the answer to the puzzle is correct. If all checks are successful, the server creates a Kill-Bots HTTP cookie and gives it to the user. The cookie is created from the token by updating the token creation time and recording the token in the table of valid Kill-Bots cookies. Subsequently, when a user issues a new TCP connection with an existing Kill-Bots cookie, the server validates the cookie by recomputing the hash and ensuring that the cookie has not expired, i.e., no more than 30 minutes have passed since cookie creation. The Kill-Bots server uses the cookie table to keep track of the number of simultaneous HTTP requests that belong to each cookie.

(d) Protecting Against Copy Attacks: What if the attacker solves a single graphical test and distributes the HTTP cookie to a large number of bots? Kill-Bots introduces a notion of per-cookie fairness to address this issue. Each correctly answered graphical test allows the client to execute a maximum of 8 simultaneous HTTP requests. Distributing the cookie to multiple zombies makes them compete among themselves for these 8 connections. Most legitimate Web browsers open no more than 8 simultaneous connections to a single server (20).

To deal with this issue, Kill-Bots distinguishes legitimate users from zombies by their reaction to the graphical test rather than their ability to solve it. Once the zombies are identified, they are blocked from using the server. When presented with a graphical test, legitimate users may react as follows: (1) they solve the test, immediately or after a few reloads; (2) they do not solve the test and give up on accessing the server for some period, which might happen immediately after receiving the test or after a few attempts to reload. The zombies have two options; (1) either imitate human users who cannot solve the test and leave the system after a few trials, in which case the attack has been subverted, or (2) keep sending requests though they cannot solve the test. However, by continuing to send requests without solving the test, the zombies become distinguishable from legitimate users, both human and machine.

In ![]() , Kill-Bots tracks how often a particular IP address has

failed to solve a puzzle. It maintains a Bloom filter (10)

whose entries are 8-bit counters.

Whenever a client is given a graphical puzzle, its IP address is

hashed and the corresponding entries in the Bloom filter are

incremented. In contrast, whenever a client comes back with a correct

answer, the corresponding entries in the Bloom filter are

decremented. Once all the counters corresponding to an IP address

reach a particular threshold

, Kill-Bots tracks how often a particular IP address has

failed to solve a puzzle. It maintains a Bloom filter (10)

whose entries are 8-bit counters.

Whenever a client is given a graphical puzzle, its IP address is

hashed and the corresponding entries in the Bloom filter are

incremented. In contrast, whenever a client comes back with a correct

answer, the corresponding entries in the Bloom filter are

decremented. Once all the counters corresponding to an IP address

reach a particular threshold ![]() (in our implementation

(in our implementation ![]() =32),

the server drops all packets from that IP and gives no further tests

to that client.

=32),

the server drops all packets from that IP and gives no further tests

to that client.

When the attack starts, the Bloom filter has no impact and users are

authenticated using graphical puzzles. Yet, as the zombies receive

more puzzles and do not answer them, their counters pile up. Once a

client has ![]() unanswered puzzles, it will be blocked. As more

zombies get blocked, the server's load will decrease and approach its

normal level. Once this happens the server no longer issues puzzles;

instead it relies solely on the Bloom filter to block requests from

the zombie clients. We call this mode

unanswered puzzles, it will be blocked. As more

zombies get blocked, the server's load will decrease and approach its

normal level. Once this happens the server no longer issues puzzles;

instead it relies solely on the Bloom filter to block requests from

the zombie clients. We call this mode ![]() . Sometimes the attack

rate is so high that even though the Bloom filter catches all attack

packets, the overhead of receiving the packets by the device driver

dominates. If the server notices that both the load is stable and the

Bloom filter is not catching any new zombie IPs, then the server

concludes that the Bloom filter has caught all attack IP addresses and

switches off issuing puzzles, i.e., the server switches to

. Sometimes the attack

rate is so high that even though the Bloom filter catches all attack

packets, the overhead of receiving the packets by the device driver

dominates. If the server notices that both the load is stable and the

Bloom filter is not catching any new zombie IPs, then the server

concludes that the Bloom filter has caught all attack IP addresses and

switches off issuing puzzles, i.e., the server switches to ![]() .

If subsequently the load increases, then the server resumes issuing

puzzles.

.

If subsequently the load increases, then the server resumes issuing

puzzles.

In our experiments, the Bloom filter detects and blocks all offending

clients within a few minutes. In general, the higher the attack rate,

the faster the Bloom filter will detect the zombies and block their

requests. A full description of the Bloom filter is in §5.

We detail Kill-Bots interaction with Web proxies/NATs in §8.

In (39), we have modeled a server that implements an authentication procedure in the interrupt handler. This is a standard location for packet filters and kernel firewalls (38,29,3). It allows dropping unwanted packets as early as possible. Our model is fairly general and independent of how the authentication is performed. The server may be checking client certificates, verifying their passwords, or asking them to solve a puzzle. Furthermore, we make no assumptions about the distribution or independence of the inter-arrival times of legitimate sessions, or of attacker requests, or of service times.

The model in (39) computes the optimal probability with which new clients should be authenticated. Below, we summarize these results and discuss their implications. Table 1 describes our variables.

When a request from an unauthenticated client arrives, the server

attempts to authenticate it with probability ![]() and drop it with

probability

and drop it with

probability ![]() . The optimal value of

. The optimal value of ![]() -i.e., the value

that maximizes the server's goodput (the CPU time spent on serving

HTTP requests) is:

-i.e., the value

that maximizes the server's goodput (the CPU time spent on serving

HTTP requests) is:

Also, compare the optimal goodput, ![]() , with the goodput of a server that implements

authentication without admission control (i.e.,

, with the goodput of a server that implements

authentication without admission control (i.e., ![]() ) given by:

) given by:

|

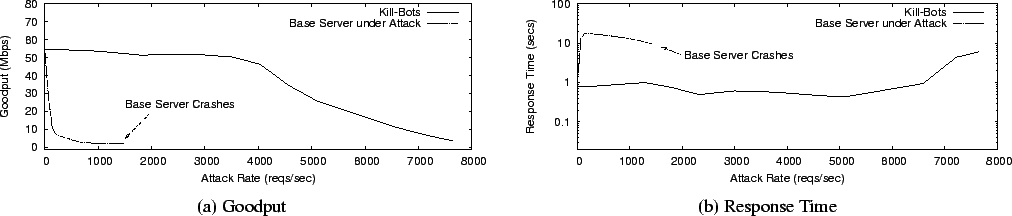

Fig. 7 illustrates the above results:

A Pentium-IV, 2.0GHz 1GB RAM, machine serves 2-pkt puzzles at a peak

rate of 6000/sec (![]() ). Assume, conservatively, that each

HTTP request fetches 15KB files (

). Assume, conservatively, that each

HTTP request fetches 15KB files (![]() ), that a user makes

), that a user makes

![]() requests in a session (

requests in a session (![]() ) and that the normal server

load is 50%. By substituting in

Eqs. 3, 4,

and 2, Fig. 7 compares the

goodput of a server that does not use authentication ( base server)

with the goodput of a server with authentication only (

) and that the normal server

load is 50%. By substituting in

Eqs. 3, 4,

and 2, Fig. 7 compares the

goodput of a server that does not use authentication ( base server)

with the goodput of a server with authentication only (![]() ),

and a server with both authentication and admission control

(

),

and a server with both authentication and admission control

(

![]() ).

The top graph shows that

authentication improves server goodput. The bottom graph shows the

additional improvement from admission control.

).

The top graph shows that

authentication improves server goodput. The bottom graph shows the

additional improvement from admission control.

![\begin{figure}\centering

\includegraphics*[width=2.5in]{figures/controller.eps}

\vskip -.05in\vskip -.1in

\end{figure}](img55.png) |

To deal with the above difficulty, Kill-Bots uses an adaptive scheme.

Based on simple measurements of the server's idle cycles, Kill-Bots

adapts the authentication probability ![]() to gradually approach

to gradually approach

![]() . Let

. Let

![]() denote the fraction of time

the server is idle, serving puzzles and serving HTTP requests

respectively.

We have:

denote the fraction of time

the server is idle, serving puzzles and serving HTTP requests

respectively.

We have:

However, the above adaptation rule is not as simple as it sounds. We

use Fig. 8 to show the relation between the

fraction of time spent on authenticating clients ![]() and that

spent serving HTTP requests

and that

spent serving HTTP requests ![]() . The line labeled ``Zero Idle

Cycles'' refers to the states in which the system is highly congested

. The line labeled ``Zero Idle

Cycles'' refers to the states in which the system is highly congested

![]() . The line labeled

``Underutilized'' refers to the case in which the system has some idle

cycles, i.e.,

. The line labeled

``Underutilized'' refers to the case in which the system has some idle

cycles, i.e.,

![]() . In this case, a fraction

. In this case, a fraction ![]() of all arrivals are served puzzles. The average time to serve a puzzle

is

of all arrivals are served puzzles. The average time to serve a puzzle

is

![]() . Thus, the fraction of time the server is serving

puzzles

. Thus, the fraction of time the server is serving

puzzles

![]() Further, an

Further, an ![]() fraction of legitimate sessions have their HTTP requests

served. Thus, the fraction of time the server serves HTTP is

fraction of legitimate sessions have their HTTP requests

served. Thus, the fraction of time the server serves HTTP is

![]() where

where

![]() is the per-request average service time, and

is the per-request average service time, and

![]() is the average number of requests in a

session. Consequently,

is the average number of requests in a

session. Consequently,

Next, we would like to decide how aggressively to adapt ![]() .

Substituting the values of

.

Substituting the values of ![]() and

and ![]() from the previous

paragraph in Eq. 5 yields:

from the previous

paragraph in Eq. 5 yields:

(a) Socially-engineered attack: In a socially-engineered attack, the adversary tricks a large number of humans to solving puzzles on his behalf. Recently, spammers employed this tactic to bypass graphical tests that Yahoo and Hotmail use to prevent automated creation of email accounts (4). The spammers ran a porn site that downloaded CAPTCHAs from the Yahoo/Hotmail email creation Web page, forced its own visitors to solve these CAPTCHAs before granting access, and used these answers to create new email accounts.

Kill-Bots is much more resilient to socially engineered attacks. In contrast to email account creation where the client is given an ample amount of time to solve the puzzle, puzzles in Kill-Bots expire 4 minutes after they have been served. Thus, the attacker cannot accumulate a store of answers from human users to mount an attack. Indeed, the attacker needs a continuous stream of visitors to his site to be able to sustain a DDoS attack. Further, Kill-Bots maintains a loose form of fairness among authenticated clients, allowing each of them a maximum of 8 simultaneous connections. To grab most of the server's resources, an attacker needs to maintain the number of authenticated malicious clients much larger than that of legitimate users. For this, the attacker needs to control a server at least as popular as the victim Web server. Such a popular site is an asset. It is unlikely that the attacker will jeopardize his popular site to DDoS an equally or less popular Web site. Furthermore, one should keep in mind that security is a moving target; by forcing the attacker to resort to socially engineered attacks, we made the attack harder and the probability of being convicted higher.

(b) Polluting the Bloom filter: The attacker may try to spoof his IP address and pollute the Bloom filter, causing Kill-Bots to mistake legitimate users as malicious. This attack however is not possible because SYN cookies prevent IP spoofing and Bloom filter entries are modified after the SYN cookie check succeeds (Fig. 10).

(c) Copy attacks: In a copy attack, the adversary solves one graphical puzzle, obtains the corresponding HTTP cookie, and distributes it to many zombies to give them access to the Web site. It might seem that the best solution to this problem is to include a secure one-way hash of the IP address of the client in the cookie. Unfortunately, this approach does not deal well with proxies or mobile users. Kill-Bots protects against copy attacks by limiting the number of in-progress requests per puzzle answer. Our implementation sets this limit to 8.

(d) Replay attacks: A session cookie includes a secure hash of the time it was issued and is only valid during a certain time interval. If an adversary tries to replay a session cookie outside its time interval it gets rejected. An attacker may solve one puzzle and attempt to replay the ``answer'' packet to obtain many Kill-Bots cookies. Recall that when Kill-Bots issues a cookie for a valid answer, the cookie is an updated form of the token (Fig 6). Hence, replaying the ``answer'' yields the same cookie.

(e) Database attack: The adversary might try to collect all possible puzzles and the corresponding answers. When a zombie receives a puzzle, it searches its database for the corresponding answer, and sends it back to the server. To protect from this attack, Kill-Bots uses a large number of puzzles and periodically replaces puzzles with a new set. Generation of the graphical puzzles is relatively easy (47). Further, the space of all possible graphical puzzles is huge. Building a database of these puzzles and their answers, distributing this database to all zombies, and ensuring they can search it and obtain answers within 4 minutes (lifetime of a puzzle) is very difficult.

(f) Concerns regarding in-kernel HTTP header processing:

Kill-Bots does not parse HTTP headers; it pattern matches the

arguments to the GET and the Cookie: fields against the

fixed string validate and against a 192-bit Kill-Bots cookie

respectively. The pattern-matching is done in-place, i.e. without

copying the packet and is cheap; ![]() s per

request (§6.1.2).

s per

request (§6.1.2).

(g) Breaking the CAPTCHA: Prior work on automatically solving simple CAPTCHAs exists (33), but such programs are not available to the public for security reasons (33). However, when one type of CAPTCHAs get broken, Kill-Bots can switch to a different kind.

(a) The Puzzle Managerconsists of two components. First, a user-space stub that asynchronously generates new puzzles and notifies the kernel-space portion of the Puzzle Manager of their locations. Generation of the graphical puzzles is relatively easy (1), and can either be done on the server itself in periods of inactivity (at night) or on a different dedicated machine. Also puzzles may be purchased from a trusted third party. The second component is a kernel-thread that periodically loads new puzzles from disk into the in-memory Puzzle Table.

(b) The Request Filter (RF) processes every incoming TCP packet addressed to port 80. It is implemented in the bottom half of the interrupt handler to ensure that unwanted packets are dropped as early as possible.

![\begin{figure}\centering

\begin{tabular}{c}

\includegraphics*[width=3.0in]{figur...

...n]{figures/flowchart2.eps}

\end{tabular}\vskip -.05in\vskip -.1in

\end{figure}](img91_2.png) |

Fig. 10 provides a flowchart representation of the RF

code. When a TCP packet arrives for port 80, the RF first checks

whether it belongs to an established connection in which case the

packet is immediately queued in the socket's receive buffer and left

to standard kernel processing. Otherwise the filter checks whether the

packet starts a new connection (i.e., is it a SYN?), in which case,

the RF replies with a SYNACK that contains a standard SYN cookie. If

the packet is not a SYN, the RF examines whether it contains any data;

if not, the packet is dropped without further processing. Next, the RF

performs two inexpensive tests in an attempt to drop unwanted packets

quickly. It hashes the packet's source IP address and checks whether

the corresponding entries in the Bloom filter have all exceeded ![]() unsolved puzzles, in which case the packet is dropped. Otherwise, the

RF checks that the acknowledgment number is a valid SYN cookie.

unsolved puzzles, in which case the packet is dropped. Otherwise, the

RF checks that the acknowledgment number is a valid SYN cookie.

If the packet passes all of the above checks, the RF looks for 3

different possibilities: (1) this might be the first data packet from

an unauthenticated client, and thus it goes through admission control

and is dropped with probability ![]() . If accepted, the RF sends

a puzzle and terminates the connection immediately; (2) this might be

from a client that has already received a puzzle and is coming back

with an answer. In this case, the RF verifies the answer and assigns

the client an HTTP cookie, which allows access to the server for a

period of time; (3) it is from an authenticated client that has a

Kill-Bots HTTP cookie and is coming back to retrieve more objects. If

none of the above is true, the RF drops this packet. These checks are

ordered according to their increasing cost to shed attackers as

cheaply as possible.

. If accepted, the RF sends

a puzzle and terminates the connection immediately; (2) this might be

from a client that has already received a puzzle and is coming back

with an answer. In this case, the RF verifies the answer and assigns

the client an HTTP cookie, which allows access to the server for a

period of time; (3) it is from an authenticated client that has a

Kill-Bots HTTP cookie and is coming back to retrieve more objects. If

none of the above is true, the RF drops this packet. These checks are

ordered according to their increasing cost to shed attackers as

cheaply as possible.

(c) The Puzzle Table maintains the puzzles available to be served to users. To avoid races between writes and reads to the table, we divide the Puzzle Table into two memory regions, a write window and a read window. The Request Filter fetches puzzles from the read window, while the Puzzle Manager loads new puzzles into the write window periodically in the background. Once the Puzzle Manager loads a fresh window of puzzles, the read and write windows are swapped atomically.

(d) The Cookie Table maintains the number of concurrent connections for each HTTP cookie (limited to 8).

(e) The Bloom Filter

counts unanswered puzzles for each IP address, allowing the Request

Filter to block requests from IPs with more than ![]() unsolved

puzzles. Our implementation sets

unsolved

puzzles. Our implementation sets ![]() . Bloom filters are

characterized by the number of counters

. Bloom filters are

characterized by the number of counters ![]() and the

number of hash functions

and the

number of hash functions ![]() that map keys onto counters. Our

implementation uses

that map keys onto counters. Our

implementation uses ![]() and

and ![]() . Since a potentially large

set of keys (32-bit IPs), are mapped onto much smaller storage (N

counters), Bloom filters are essentially lossy. This means that there

is a non-zero probability that all

. Since a potentially large

set of keys (32-bit IPs), are mapped onto much smaller storage (N

counters), Bloom filters are essentially lossy. This means that there

is a non-zero probability that all ![]() counters corresponding to a

legitimate user pile up to

counters corresponding to a

legitimate user pile up to ![]() due to collisions with

zombies. Assuming

due to collisions with

zombies. Assuming ![]() distinct zombies

and uniformly random hash functions, the probability a legitimate

client is classified as a zombie is approximately

distinct zombies

and uniformly random hash functions, the probability a legitimate

client is classified as a zombie is approximately

![]() . Given our choice of

. Given our choice of ![]() and

and ![]() ,

this probability for 75,000 zombies is

,

this probability for 75,000 zombies is ![]() .

.

(b) Modeling Request Arrivals: Legitimate clients generate requests by replaying HTTP traces collected at the CSAIL web-server and a Debian mirror. Multiple segments of the trace are played simultaneously to control the load generated by legitimate clients. A zombie issues requests at a desired rate by randomly picking a URI (static/dynamic) from the content available on the server.

(c) Experiment Setup: We evaluate Kill-Bots in the wide-area network using the setup in Fig. 11. The Web server is connected to a 100Mbps Ethernet. We launch CyberSlam attacks from 100 different nodes on PlanetLab using different port ranges to simulate multiple attackers per node. Each PlanetLab node simulates up to 256 zombies--a total of 25,600 attack clients. We emulate legitimate clients on machines connected over the Ethernet, to ensure that any difference in their performance is due to the service they receive from the Web server, rather than wide-area path variability.

(d) Emulating Clients: We use WebStone2.5 (2) to emulate both legitimate Web clients and attackers. WebStone is a benchmarking tool that issues HTTP requests to a web-server given a specific distribution over the requests. We extended WebStone in two ways. First, we added support for HTTP sessions, cookies, and for replaying requests from traces. Second, we need the clients to issue requests at specific rate independent of how the web-server responds to the load. For this, we rewrote WebStone's networking code using libasync (28), an asynchronous socket library.

(a) Goodput of legitimate clients: The number of bytes per

second delivered to all legitimate client applications. Goodput

ignores TCP retransmissions and is averaged over 30s windows.

(b) Response times of legitimate clients: The elapsed time

before a request is completed or timed out. We timeout incomplete

requests after 60s.

(c) Cumulative number of legitimate requests dropped: The total

number of legitimate requests dropped since the beginning of the

experiment.

Table 2 shows our microbenchmarks. The overhead for

issuing a graphical puzzle is ![]() s (process http header

+serve puzzle), which means that the CPU can issue puzzles faster than

the time to transmit a 1100B puzzle on our 100Mb/s Ethernet. However,

the authentication cost is dominated by standard kernel code for

processing incoming TCP packets, mainly the interrupts (

s (process http header

+serve puzzle), which means that the CPU can issue puzzles faster than

the time to transmit a 1100B puzzle on our 100Mb/s Ethernet. However,

the authentication cost is dominated by standard kernel code for

processing incoming TCP packets, mainly the interrupts (![]() s per packet (23), about 10 packets per TCP

connection). Thus, the CPU is the bottleneck for authentication and as

shown in §6.4, performing admission control based on CPU

utilization is beneficial.

s per packet (23), about 10 packets per TCP

connection). Thus, the CPU is the bottleneck for authentication and as

shown in §6.4, performing admission control based on CPU

utilization is beneficial.

Note also that checking the Bloom filter is much cheaper than other

operations including the SYN cookie check.

Hence, for incoming requests, we perform the Bloom filter check before

the SYN cookie check (Fig. 14). In ![]() , the Bloom

filter drops all zombie packets; hence performance is limited by the

cost for interrupt processing and device driver access. We conjecture

that using polling drivers (23,30) will improve

performance at high attack rates.

, the Bloom

filter drops all zombie packets; hence performance is limited by the

cost for interrupt processing and device driver access. We conjecture

that using polling drivers (23,30) will improve

performance at high attack rates.

|

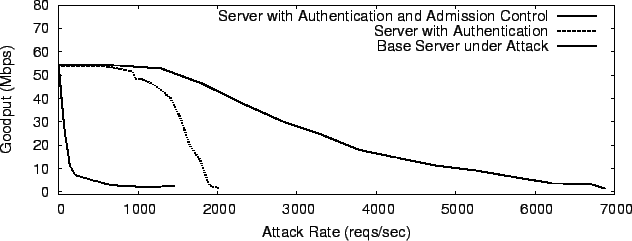

Fig. 12 compares the performance of Kill-Bots with a base (i.e., unmodified) server, as the attack request rate increases. Fig. 12a shows the goodput of both servers. Each point on the graph is the average goodput of the server in the first twelve minutes after the beginning of the attack. A server protected by Kill-Bots endures attack rates multiple orders of magnitude higher than the base server. At very high attack rates, the goodput of the Kill-Bots server decreases as the cost of processing interrupts becomes excessive. Fig. 12b shows the response time of both web servers. The average response time experienced by legitimate users increases dramatically when the base server is under attack. In contrast, the average response time of users accessing a Kill-Bots server is unaffected by the ongoing attack.

![\begin{figure}\begin{tabular}{c}

\includegraphics[width=3.0in]{figures/1.6sum_gp...

...c) Admission probability}

\end{tabular}\vskip -.05in\vskip -.15in

\end{figure}](img97_2.png) |

Fig. 13 shows the dynamics of Kill-Bots during a

CyberSlam attack, with

![]() req/s. The figure also shows

the goodput and mean response time with no attackers, as a reference.

The attack begins at

req/s. The figure also shows

the goodput and mean response time with no attackers, as a reference.

The attack begins at ![]() s and ends at

s and ends at ![]() s. At the beginning

of the attack, the goodput decreases (Fig. 13a) and the

mean response time increases (Fig. 13b). Yet, quickly the

admission probability decreases (Fig. 13c), causing the

mean response time to go back to its value when there is no

attack. The goodput however stays low because of the relatively high

attack rate, and because many legitimate users do not answer puzzles.

After a few minutes, the Bloom filter catches all zombie IPs, causing

puzzles to no longer be issued (Fig. 13c). Kill-Bots now

moves to

s. At the beginning

of the attack, the goodput decreases (Fig. 13a) and the

mean response time increases (Fig. 13b). Yet, quickly the

admission probability decreases (Fig. 13c), causing the

mean response time to go back to its value when there is no

attack. The goodput however stays low because of the relatively high

attack rate, and because many legitimate users do not answer puzzles.

After a few minutes, the Bloom filter catches all zombie IPs, causing

puzzles to no longer be issued (Fig. 13c). Kill-Bots now

moves to ![]() and performs authentication based on just the Bloom

filter. This causes a large increase in

goodput (Fig. 13a) due to both the admission of users who

were earlier unwilling or unable to solve CAPTCHAs and the reduction

in authentication cost. In this experiment, despite the ongoing

CyberSlam attack, Kill-Bots' performance in

and performs authentication based on just the Bloom

filter. This causes a large increase in

goodput (Fig. 13a) due to both the admission of users who

were earlier unwilling or unable to solve CAPTCHAs and the reduction

in authentication cost. In this experiment, despite the ongoing

CyberSlam attack, Kill-Bots' performance in ![]() (

(![]() onwards), is close to that of a server not under attack. Note that

the normal load significantly varies with time and the adaptive

controller (Fig. 13c) reacts to this load

onwards), is close to that of a server not under attack. Note that

the normal load significantly varies with time and the adaptive

controller (Fig. 13c) reacts to this load

![]() , keeping response times low, yet providing

reasonable goodput.

, keeping response times low, yet providing

reasonable goodput.

![\begin{figure}\begin{tabular}{c}

\includegraphics[width=3.0in]{figures/f3tp.eps}...

...probability at the server}

\end{tabular}\vskip -.05in\vskip -.2in

\end{figure}](img98_2.png) |

Fig. 14 compares the performance of the base server against

its Kill-Bots mirror during the Flash Crowd event. The figure shows

the dynamics as functions of time. Each point in each graph is an

average measurement over a 30s interval. We first show the total

throughput of both servers in Fig. 14a. Kill-Bots has

slightly lower throughput for two reasons. First, Kill-Bots attempts

to operate at ![]() =12% idle cycles rather than at zero idle

cycles. Second, Kill-Bots uses some of the bandwidth to serve puzzles.

Fig. 14b reveals that the throughput figures are misleading;

though Kill-Bots has a slightly lower throughput than the base server,

its goodput is substantially higher (almost 100% more). This

indicates that the base server wasted its throughput on

retransmissions and incomplete transfers. Fig. 14c provides

further supporting evidence-Kill-Bots drastically reduces the avg.

response time.

=12% idle cycles rather than at zero idle

cycles. Second, Kill-Bots uses some of the bandwidth to serve puzzles.

Fig. 14b reveals that the throughput figures are misleading;

though Kill-Bots has a slightly lower throughput than the base server,

its goodput is substantially higher (almost 100% more). This

indicates that the base server wasted its throughput on

retransmissions and incomplete transfers. Fig. 14c provides

further supporting evidence-Kill-Bots drastically reduces the avg.

response time.

That Kill-Bots improves server performance during Flash Crowds might

look surprising. Although all clients in a Flash Crowd can answer the

graphical puzzles, Kill-Bots computes an admission probability

![]() such that the system only admits users it can serve. In

contrast, a base server with no admission control accepts additional

requests even when overloaded. Fig. 14d supports this

argument by showing how the admission probability

such that the system only admits users it can serve. In

contrast, a base server with no admission control accepts additional

requests even when overloaded. Fig. 14d supports this

argument by showing how the admission probability ![]() changes

during the Flash Crowd event to allow the server to shed away the

extra load.

changes

during the Flash Crowd event to allow the server to shed away the

extra load.

![\begin{figure}\includegraphics[width=3in]{figures/f3drops.eps}

\vskip -.05in\vskip -.15in

\end{figure}](img103_2.png) |

Finally, Fig. 15 shows the cumulative number of dropped requests and dropped sessions during the Flash Crowd event for both the base server and the Kill-Bots server. Interestingly, the figure shows that Kill-Bots drops more sessions but fewer requests than the base server. The base server accepts new sessions more often than Kill-Bots but keeps dropping their requests. Kill-Bots drops sessions upon arrival, but once a session is admitted it is given a Kill-Bots cookie which allows it access to the server for 30min.

Note that Flash Crowds is just one example of a scenario in which Kill-Bots only needs to perform admission control. Kill-Bots can easily identify such scenarios-high server load but few bad bloom entries. Kill-Bots decouples authentication from admission control by no longer issuing puzzles; instead every user that passes the admission control check gets a Kill-Bots cookie.

|

In §3.2, using a simple model, we showed that authentication is not enough, and good performance requires admission control. Fig. 16 provides experimental evidence that confirms the analysis. The figure compares the goodput of a version of Kill-Bots that uses only puzzle-based authentication, with a version that uses both puzzle-based authentication and admission control. We turn off the Bloom filter in these experiments because we are interested in measuring the goodput gain obtained only from admission control. The results in this figure are fairly similar to those in Fig. 7; admission control dramatically increases server resilience and performance.

The attacker might try to increase the severity of the attack by

prolonging the time until the Bloom filter has discovered all attack

IPs and blocked them, i.e., by delaying

transition from ![]() to

to ![]() . To do so, the attacker uses

the zombie IP addresses slowly, keeping fresh IPs for as long as

possible. We show that the attacker does not gain much by doing so.

Indeed, there is a tradeoff between using all zombie IPs quickly to

create a severe attack for a short period vs. using them slowly to

prolong a milder attack.

. To do so, the attacker uses

the zombie IP addresses slowly, keeping fresh IPs for as long as

possible. We show that the attacker does not gain much by doing so.

Indeed, there is a tradeoff between using all zombie IPs quickly to

create a severe attack for a short period vs. using them slowly to

prolong a milder attack.

![\begin{figure}\begin{tabular}{c}

\includegraphics[width=3.0in]{figures/1.5tp.eps...

... time of legitimate users}

\end{tabular}\vskip -.1in\vskip -.15in

\end{figure}](img107_2.png) |

Fig. 17 shows the performance of Kill-Bots under two

attack strategies; A fast strategy in which the attacker introduces a

fresh zombie IP every 2.5 seconds, and a slow strategy in which the

attacker introduces a fresh zombie IP every 5 seconds. In this

experiment, the total number of zombies in the Botnet is 25000

machines, and the aggregate attack rate is constant and fixed at

![]() req/s. The figure shows that the fast attack

strategy causes a short but high spike in mean response time, and a

substantial reduction in goodput that lasts for a short interval

(about 13 minutes), until the Bloom filter catches the zombies. On the

other hand, the slow strategy affects performance for a longer

interval (

req/s. The figure shows that the fast attack

strategy causes a short but high spike in mean response time, and a

substantial reduction in goodput that lasts for a short interval

(about 13 minutes), until the Bloom filter catches the zombies. On the

other hand, the slow strategy affects performance for a longer

interval (![]() 25 min) but has a milder impact on goodput and response

time.

25 min) but has a milder impact on goodput and response

time.

We compute two types of results. First, we filter out requests from known robots, using the User-Agent field, and compute the fraction of clients who answered our puzzles. We find that 55% of all clients answered the puzzles. It is likely that some of the remaining requests are also from robots but don't use well-known User-Agent identifiers, so this number underestimates the fraction of humans that answered the puzzles. Second, we distinguish between clients who check only the group's main page and leave the server, and those who follow one or more links. We call the latter interested surfers. We would like to check how many of the interested surfers answered the graphical puzzle because these users probably bring more value to the Web site. We find that 74% of interested users answer puzzles. Table 3 summarizes our results. These results may not be representative of users in the Internet, as the behavior of user populations may differ from one server to another.

(a) Denial of Service: Much prior work on DDoS describes specific attacks (e.g., SYN flood (36), Smurf (11), reflector attacks (34) etc.), and presents detection techniques or countermeasures. In contrast to Kill-Bots, prior work focuses on lower layers attacks and bandwidth floods. The backscatter technique (31) detects DDoS sources by monitoring traffic to unused segments of the IP address space. Traceback (40) uses in-network support to trace offending packets to their source. Many variations to the traceback idea detect low-volume attacks (41,5,49). Others detect bandwidth floods by mis-match in the volumes of traffic (16) and some (27) pushback filtering to throttle traffic closer to its source. Anderson et al. (7) propose that routers only forward packets with capabilities. Juels and Brainard (8) first proposed computational client puzzles as a SYN flood defense. Some recent work uses overlays as distributed firewalls (21,6). Clients can only access the server through the overlay nodes, which filter packets. The authors of (32) propose to use graphical tests in the overlay. Their work is different from ours because Kill-Bots uses CAPTCHAs only as an intermediate stage to identify the offending IPs. Further, Kill-Bots combines authentication with admission control and focusses on efficient kernel implementation.

(b) CAPTCHAs: Our authentication mechanism uses graphical tests or CAPTCHAs (47). Several other reverse Turing tests exist (37,14,22). CAPTCHAs are currently used by many online businesses (e.g. Yahoo!, Hotmail).

(c) Flash Crowds and Server Overload: Prior work (18,48) shows that admission control improves server perfomance under overload. Some admission control schemes (15,46) manage OS resources better. Others (20) persistently drop TCP SYN packets in routers to tackle Flash Crowds. Still others (44,42) shed extra load onto an overlay or a peer-to-peer network. Kill-Bots couples admission control with authentication.

Second, Kill-Bots has a few parameters that we have assigned values

based on experience. For example, we set the Bloom filter threshold

![]() because even legitimate users may drop puzzles due to

congestion or indecisiveness and should not be punished. There is

nothing special about 32, we only need a value that is neither too big

nor too small. Similarly, we allow a client that answers a CAPTCHA a

maximum of 8 parallel connections as a trade-off between the improved

performance gained from parallel connections and the desire to limit

the loss due to a compromised cookie.

because even legitimate users may drop puzzles due to

congestion or indecisiveness and should not be punished. There is

nothing special about 32, we only need a value that is neither too big

nor too small. Similarly, we allow a client that answers a CAPTCHA a

maximum of 8 parallel connections as a trade-off between the improved

performance gained from parallel connections and the desire to limit

the loss due to a compromised cookie.

Third, Kill-Bots assumes that the first data packet of the TCP connection will contain the GET and Cookie lines of the HTTP request. In general the request may span multiple packets, but we found this to happen rarely.

Forth, the Bloom filter needs to be flushed eventually since compromised zombies may turn into legitimate clients. The Bloom filter can be cleaned either by resetting all entries simultaneously or by decrementing the various entries at a particular rate. In the future, we will examine which of these two strategies is more suitable.

This document was generated using the LaTeX2HTML translator Version 2002-2-1 (1.70)

Copyright © 1993, 1994, 1995, 1996,

Nikos Drakos,

Computer Based Learning Unit, University of Leeds.

Copyright © 1997, 1998, 1999,

Ross Moore,

Mathematics Department, Macquarie University, Sydney.

The command line arguments were:

latex2html -split 0 -no_navigation -white -show_section_numbers XXX

The translation was initiated by Srikanth Kandula on 2005-03-28

|

This paper was originally published in the

Proceedings of the 2nd Symposium on Networked Systems Design and Implementation,

May 2–4, 2005, Boston, MA, USA Last changed: 2 May 2005 aw |

|