|

||||||||||||||

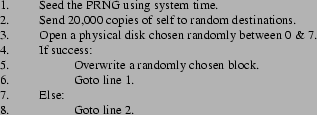

where Why Witty fails to scan the entire address space. The first and somewhat surprising outcome from investigating how Witty constructs random destination addresses is the observation that Witty fails to scan the entire IP address space. This means that, while Witty spread at a very high speed (infecting 12,000 hosts in 75 minutes), due to a subtle error in its use of pseudo-random numbers about 10% of vulnerable hosts were never infected with the worm. To understand this flaw in full detail, we first visit the motivation for the use of only the top 16 bits of the 32 bit results returned by Witty's LC PRNG. This was recommended by Knuth [8], who showed that the high order bits are ``more random'' than the lower order bits returned by the LC PRNG. Indeed, for this very reason, several implementations of the rand() function, including the default C library of Windows and SunOS, return a 15 bit number, even though their underlying LC PRNG uses the same parameters as the Witty worm and produces 32 bit numbers. However, this advice was taken out of context by the author of the Witty worm. Knuth's advice applies when uniform randomness is the desired property, and is valid only when a small number of random bits are needed. For a worm trying to maximize the number of infected hosts, one reason for using random numbers while selecting destinations is to avoid detection by intrusion detection systems that readily detect sequential scans. A second reason is to maintain independence between the portions of the address-space scanned by individual infectees. Neither of these reasons actually requires the kind of ``good randomness'' provided by following Knuth's advice of picking only the higher order bits. As discussed in § 2, for specific values of the parameters We enumerated Witty's entire ``orbit'' and found that there are 431,554,560 32-bit numbers that can never be generated. This corresponds to 10.05% of the IP address space that was never scanned by Witty. On further investigation, we found these unscanned addresses to be fairly uniformly distributed over the 32-bit address space of IPv4. Hence, it is reasonable to assume that approximately the same fraction of the populated IP address space was missed by Witty. In other words, even though the portions of IP address space that are actually used (populated) are highly clustered, because the addresses that Witty misses are uniformly distributed over the space of 32-bit integers, it missed roughly the same fraction of address among the set of IP addresses in actual use. Observing that

Witty does not visit some addresses at all, one might ask whether it

visits some addresses more frequently than others. Stated more formally,

given that the period of Witty's PRNG is

During the first 75 minutes of the release of the Witty worm, the CAIDA telescope saw 12,451 unique IP addresses as infected. Following the above discussion, we classified these addresses into three classes. There were 10,638 (85.4%) addresses that were scanned just once in an orbit, i.e., addresses that experienced a normal scan rate. Another 1,409 addresses (11.3%) were scanned twice in an orbit, hence experiencing twice the normal growth rate. A third class of 404 (3.2%) addresses belonged to the set of addresses never scanned by the worm. At first blush one might wonder how these latter could possibly appear, but we can explain their presence as reflecting inclusion in an initial ``hit list'' (see below), operating in promiscuous mode, or aliasing due to multi-homing, NAT or DHCP. Figure 3 compares the growth curves for the three classes of addresses. Notice how the worm spreads faster among the population of machines that experience double the normal scan rate. 1,000 sec from its release, Witty had infected half of the doubly-scanned addresses that it would infect in the first 75 min. On the other hand, in the normally-scanned population, it had only managed to infect about a third of the total victims that it would infect in 75 min. Later in the hour, the curve for the doubly-scanned addresses is flatter than that for the normally-scanned ones, indicating that most of the victims in the doubly-scanned population were already infected at that point. The curve for infectees whose source address was never scanned by Witty is particularly interesting. Twelve of the never-scanned systems appear in the first 10 seconds of the worm's propagation, very strongly suggesting that they are part of an initial hit-list. This explains the early jump in the plot: it's not that such machines are overrepresented in the hit-list, rather they are underrepresented in the total infected population, making the hit-list propagation more significant for this population. Another class of never-scanned infectees are those passively monitoring a network link. Because these operate in promiscuous mode, their ``cross section'' for becoming infected is magnified by the address range routed over the link. On average, these then will become infected much more rapidly than normal over even doubly-scanned hosts. We speculate that these infectees constitute the remainder of the early rise in the appearance of never-scanned systems. Later, the growth rate of the never-scanned systems substantially slows, lagging even the single-scanned addresses. Likely these remaining systems reflect infrequent aliasing due to multihoming, NAT, or DHCP. Identifying

Patient Zero. Along with ``Can all addresses

be reached by scans?'', another question to ask is ``Do all sources

indeed travel on the PRNG orbit?'' Surprisingly, the answer is No.

There is a single Witty source that consistently fails to follow the

orbit. Further inspection reveals that the source (i) always

generates addresses of the form We argue that these peculiarities add up to a strong likelihood that this unique host reflects Patient Zero, the system used by the attacker to seed the worm initially. Patient Zero was not running the complete Witty worm but rather a (not fully tested) tool used to launch the worm. To our knowledge, this represents the first time that Patient Zero has been identified for a major worm outbreak. (The only related case of which we are aware was the Melissa email virus [3], where the author posted the virus to USENET as a means of initially spreading his malcode, and was traced via USENET headers.) We have conveyed the host's IP address (which corresponds to a European retail ISP) to law enforcement. If all Patient Zero

did was send packets of the form

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

||||||||||||||||||||||||||||||||||||||||||||

Table 1 lists the top-level domains for the unique

second-level domains that demonstrated ![]() % divergence

in estimated effective bandwidth. Owing to its connection to

Internet-2, the CAIDA telescope saw packets from .edu with significantly

fewer losses than the Wisconsin telescope, which in turn had a better

reachability from hosts in the .net and .com domains. Clearly,

telescopes are not ``ideal'' devices, with perfectly balanced

connectivity to the rest of the Internet, as implicitly assumed by

extrapolation-based techniques. Rather, what a telescope sees during an

event of large enough volume to saturate high-capacity Internet links

is dictated by its specific location on the Internet topology. This

finding complements that of [4],

which found that the (low-volume) background radiation seen at

different telescopes likewise varies significantly with location, beyond

just the bias of some malware to prefer nearby addresses when scanning.

% divergence

in estimated effective bandwidth. Owing to its connection to

Internet-2, the CAIDA telescope saw packets from .edu with significantly

fewer losses than the Wisconsin telescope, which in turn had a better

reachability from hosts in the .net and .com domains. Clearly,

telescopes are not ``ideal'' devices, with perfectly balanced

connectivity to the rest of the Internet, as implicitly assumed by

extrapolation-based techniques. Rather, what a telescope sees during an

event of large enough volume to saturate high-capacity Internet links

is dictated by its specific location on the Internet topology. This

finding complements that of [4],

which found that the (low-volume) background radiation seen at

different telescopes likewise varies significantly with location, beyond

just the bias of some malware to prefer nearby addresses when scanning.

6 Deducing the seed

![\includegraphics[scale=0.3]{perm5.eps}](img73.png) Fig 10 (a) [Sequence of packets generated at the infectee. ] ![\includegraphics[scale=0.3]{perm6.eps}](img74.png) Fig 10 (b) [Packets seen at the telescope. Notice how packets immediately before or after a failed disk-write are separated by ![\includegraphics[scale=0.3]{perm91.eps}](img75.png) Fig 10 (c)[Translating these special intervals back by multiples of 20,000 gives bounds on where the seed can lie.] |

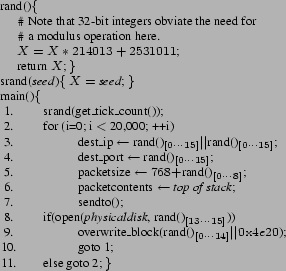

Cracking the seeds -- System uptime. We now describe how we can use the telescope observations to deduce the exact values of the seeds used to (re)initialize Witty's PRNG. Recall from Fig. 2 that the Witty worm attempts to open a disk after every 20,000 packets, and reseeds its PRNG on success. To get a seed with reasonable local entropy, Witty uses the value returned by the Get_Tick_Count system call, a counter set to zero at boot time and incremented every millisecond.

In § 4 we have developed the capability to reverse-engineer the state of the PRNG at an infectee from packets received at the telescope. Additionally, Eqns 1 and 2 give us the ability to crank the PRNG forwards and backwards to determine the state at preceding and successive packets. Now, for a packet received at the telescope, if we could identify the precise number of calls to the function rand between the reseeding of the PRNG and the generation of the packet, simply cranking the PRNG backwards the same number of steps would reveal the value of the seed. The difficulty here is that for a given packet we do not know which ``generation'' it is since the PRNG was seeded. (Recall that we only see a few of every thousand packets sent.) We thus have to resort to a more circuitous technique.

We split the description of our approach into two parts: a technique for identifying a small range in the orbit (permutation sequence) of the PRNG where the seed must lie, and a geometric algorithm for finding the seeds from this candidate set.

Identifying a

limited range within which the seed must lie. Figure 10 shows a graphical view of our technique for

restricting the range where the seed can potentially lie. Figure 10(a) shows the sequence of packets as generated at

the infectee. The straight line at the top of the figure represents the

permutation-space of the PRNG, i.e., the sequence of numbers ![]() as generated by the PRNG. The

second horizontal line in the middle of the figure represents a small

section of this sequence, blown-up to show the individual numbers in the

sequence as ticks on the horizontal line. Notice how each packet

consumes exactly four random numbers, represented by the small arcs

straddling four ticks.

as generated by the PRNG. The

second horizontal line in the middle of the figure represents a small

section of this sequence, blown-up to show the individual numbers in the

sequence as ticks on the horizontal line. Notice how each packet

consumes exactly four random numbers, represented by the small arcs

straddling four ticks.

Only a small fraction of packets generated at the infectee reach the telescope. Figure 10(b) shows four such packets. By cranking forward from the PRNG's state at the first packet until the PRNG reaches the state at the second packet, we can determine the precise number of calls to the rand function in the intervening period. In other words, if we start from the state corresponding to the first packet and apply Eqn 1 repeatedly, we will eventually (though see below) reach the state corresponding to the second packet, and counting the number of times Eqn 1 was applied gives us the precise number of random numbers generated between the departure of these two packets from the infectee. Note that since each packet consumes four random numbers (the inner loop of lines 2-7 in Fig. 2), the number of random numbers will be a multiple of four.

However, sometimes

we find the state for a packet received at the telescope does not

lie within a reasonable number of steps (300,000 calls to the PRNG) from

the state of the preceding packet from the same infectee. This signifies

a potential reseeding event: the worm finished its batch of 20,000

packets and attempted to open a disk to overwrite a random block.

Recall that there are two possibilities: the random disk picked by the

worm exists, in which case it overwrites a random block and (regardless

of the success of that attempted overwrite) reseeds the PRNG, jumping

to an arbitrary location in the permutation space (control flowing

through lines 8 ![]() 9

9 ![]() 10

10 ![]() 1

1 ![]() 2 in Fig. 2); or the disk

does not exist, in which case the worm continues for another 20,000

packets without reseeding (control flowing through lines 8

2 in Fig. 2); or the disk

does not exist, in which case the worm continues for another 20,000

packets without reseeding (control flowing through lines 8 ![]() 11

11 ![]() 2 in Fig. 2). Note that in

either case the worm consumes a random number in picking the disk.

2 in Fig. 2). Note that in

either case the worm consumes a random number in picking the disk.

Thus, every time

the worm finishes a batch of 20,000 packets, we will see a discontinuity

in the usual pattern of ![]() random numbers between observed

packets. We will instead either find that the packets correspond to

random numbers between observed

packets. We will instead either find that the packets correspond to ![]() random numbers between them (disk open failed, no

reseeding); or that they have no discernible correspondence (disk open

succeeded, PRNG reseeded and now generating from a different point in

the permutation space).

random numbers between them (disk open failed, no

reseeding); or that they have no discernible correspondence (disk open

succeeded, PRNG reseeded and now generating from a different point in

the permutation space).

This gives us the ability to identify intervals within which either failed disk writes occurred, or reseeding events occurred. Consider the interval straddled by the first failed disk write after a successful reseeding. Since the worm attempts disk writes every 20,000 packets, this interval translated back by 20,000 packets (80,000 calls to the PRNG) must straddle the seed. In other words, the beginning of this special interval must lie no more than 20,000 packets away from the reseeding event, and its end must lie no less than that distance away. This gives us upper and lower bounds on where the reseeding must have occurred. A key point is that these bounds are in addition to the bounds we obtain from observing that the worm reseeded. Similarly, if the worm fails at its next disk write attempt too, the interval straddling that failed write, when translated backwards by 40,000 packets (160,000 calls to the PRNG), gives us another pair of lower and upper bounds on where the seed must lie. Continuing this chain of reasoning, we can find multiple upper and lower bounds. We then take the max of all lower bounds and the min of all upper bounds to get the tightest bounds, per Figure 10(c).

A geometric algorithm to detect the seeds. Given this procedure, for each reseeding event we can find a limited range of potential in the permutation space wherein the new seed must lie. (I.e., the possible seeds are consecutive over a range in the permutation space of the consecutive 32-bit random numbers as produced by the LC PRNG; they are not consecutive 32-bit integers.) Note, however, that this may still include hundreds or thousands of candidates, scattered over the full range of 32-bit integers.

Which is the

correct one? We proceed by leveraging two key points: (i) for

most sources we can find numerous reseeding events, and (ii) the

actual seeds at each event are strongly related to one another by the amount

of time that elapsed between the events, since the seeds are clock

readings. Regarding this second point, recall that the seeds are

read off a counter that tracks the number of milliseconds since system

boot-up. Clearly, this value increases linearly with time. So if we

observe two reseeding events with timestamps (at the telescope) of ![]() and

and ![]() , with corresponding seeds

, with corresponding seeds ![]() and

and ![]() , then because clocks progress linearly

with time,

, then because clocks progress linearly

with time, ![]() . In other words, if the infectee

reseeded twice, then the value of the seeds must differ by approximately

the same amount as the difference in milliseconds in the timestamps of

the two packets seen immediately after these reseedings at the

telescope. Extending this reasoning to

. In other words, if the infectee

reseeded twice, then the value of the seeds must differ by approximately

the same amount as the difference in milliseconds in the timestamps of

the two packets seen immediately after these reseedings at the

telescope. Extending this reasoning to ![]() reseeding events,

we get

reseeding events,

we get ![]() ,

, ![]() . This implies that the

. This implies that the ![]() points

points ![]() should (approximately)

lie along a straight line with slope 1 (angle of

should (approximately)

lie along a straight line with slope 1 (angle of ![]() ) when plotting potential seed value against time.

) when plotting potential seed value against time.

We now describe a

geometric algorithm to detect such a set of points in a 2-dimensional

plane. The key observation is that when ![]() points lie close

to a straight line of a given slope, then looking from any one of these

points along that slope, the remaining points should appear clustered in

a very narrow band. More formally, if we project an angular beam of

width

points lie close

to a straight line of a given slope, then looking from any one of these

points along that slope, the remaining points should appear clustered in

a very narrow band. More formally, if we project an angular beam of

width ![]() from any one of these points, then the

remaining points should lie within the beam, for reasonably small values

of

from any one of these points, then the

remaining points should lie within the beam, for reasonably small values

of ![]() . On the other hand, other, randomly

scattered points on the plane will see a very small number of other

points in the beam projected from them.

. On the other hand, other, randomly

scattered points on the plane will see a very small number of other

points in the beam projected from them.

The algorithm

follows directly from this observation. It proceeds in iterations.

Within an iteration, we project a beam of width ![]() along the

along the ![]() line from each point in the plane. The point is

assigned a score equal to the number of other points that lie in its

beam. Actual seeds are likely to get a high score because they would all

lie roughly along a

line from each point in the plane. The point is

assigned a score equal to the number of other points that lie in its

beam. Actual seeds are likely to get a high score because they would all

lie roughly along a ![]() line. At the end of the iteration, all points with

a score smaller than some threshold (say

line. At the end of the iteration, all points with

a score smaller than some threshold (say ![]() ) are

discarded. Repeating this process in subsequent iterations quickly

eliminates all but the

) are

discarded. Repeating this process in subsequent iterations quickly

eliminates all but the ![]() seeds, which keep supporting

high scores for each other in all iterations.

seeds, which keep supporting

high scores for each other in all iterations.

We find this

algorithm highly effective given enough reseeding events. Figure 11 presents the results of the computation of

system uptime of 784 machines in the infectee population. These

infectees were chosen from the set that contributed enough packets to

allow us to use our mechanism for estimating the seed. Since the

counter used by Witty to reseed its PRNG is only 32 bits wide, it will

wrap-around every ![]() milliseconds, which is approximately

49.7 days. The results could potentially be distorted due to this

effect (but see below).

milliseconds, which is approximately

49.7 days. The results could potentially be distorted due to this

effect (but see below).

There is a clear domination of short-lived machines, with approximately 47% having uptimes of less than five days. On the other hand, there are just five machines that had an uptime of more than 40 days. The sharp drop-off above 40 days leads us to conclude that the effects due to the wrapping-around of the counter are negligible.

The highest number of machines were booted on the same day as the spread of the worm. There are prominent troughs during the weekends -- recall that the worm was released on a Friday evening Pacific Time, so the nearest weekend had passed 5 days previously -- and heightened activity during the working days.

One feature that stands out is the presence of two modes, one at 29 days and the second at 36/37 days. On further investigation, we found that the machines in the first mode all belonged to a set of 135 infectees from the same /16 address block, and traceroutes revealed they were situated at a single US military installation. Similarly, machines in the second mode belonged to a group of 81 infectees from another /16 address block, belonging to an educational institution. However, while machines in the second group appeared at the telescope one-by-one throughout the infection period, 110 of the 135 machines in the first group appeared at the telescope within 10 seconds of Witty's onset. Since such a fast spread is not feasible by random scanning of the address space, the authors of [18] concluded that these machines were either part of a hit-list or were already compromised and under the control of the attacker. Because we can fit the actions of these infectees with running the full Witty code, including PRNG reseeding patterns that match the process of overwriting disk blocks, this provides evidence that these machines were not specially controlled by the attacker (unlike the Patient Zero machine), and thus we conclude that they likely constitute a hit-list. Returning then to the fact that these machines were all rebooted exactly 29 days before the onset of the worm, we speculate that the reboot was due to a facility-wide system upgrade; perhaps the installation of system software such as Microsoft updates (a critical update had been released on Feb. 10, about 10 days before the simultaneous system reboots), or perhaps the installation of the vulnerable ISS products themselves. We might then speculate that the attacker knew about the ISS installation at the site (thus enabling them to construct a hit-list), which, along with the attacker's rapid construction of the worm indicating they likely knew about the vulnerability in advance [21], suggests that the attacker was an ISS ``insider.''

|

Number of disks. Once we can recover the seed used at the beginning of a sequence of packets, we can use its value as an anchor to mark off the precise subsequent actions of the worm. Recall from Fig. 2 that the worm generates exactly 20,000 packets in its inner loop, using 80,000 random numbers in the process. After exiting the inner loop, the worm uses three bits from the next random number to decide which physical disk it will attempt to open. Starting from the seed, this is exactly the 80,001th number in the sequence generated by the PRNG. Thus, knowledge of the seed tells us exactly which disk the worm attempts to open. Furthermore, as discussed above we can tell whether this attempt succeeded based on whether the worm reseeds after the attempt. We can therefore estimate the number of disks on the infectee, based on which of the attempts for drives in the range 0 to 7 lead to a successful return from the open system call. Table 2 shows the number of disks for 100 infectees, calculated using this approach. The majority of infectees had just one or two disks, while we find a few with up to five disks. Since the installation of end-system firewall software was a prerequisite for infection by Witty, the infectee population is more likely to contain production servers with multiple disks.

Exploration of

infection graph. Knowledge of the precise seeds allows us to

reconstruct the complete list of packets sent by each infectee.

Additionally, the large size of our telescope allows us to detect an

infectee within the first few seconds (few hundred packets) of its

infection. Therefore if an infectee is first seen at a time ![]() , we can inspect the list of packets sent by all other

infectees active within a short preceding interval, say

, we can inspect the list of packets sent by all other

infectees active within a short preceding interval, say ![]() sec

sec![]() , to see which sent a packet

to the new infectee, and thus is the infectee's likely ``infector.'' to

select the most likely ``infector''.

, to see which sent a packet

to the new infectee, and thus is the infectee's likely ``infector.'' to

select the most likely ``infector''.

The probability of

more than one infectee sending a worm packet to the same new infectee at

the time of its infection is quite low. With about 11,000 pkts/sec seen

at a telescope with 1/256 of the entire Internet address space, and

suffering 30% losses due to congestion (§ 5),

the aggregate scanning rate of the worm comes out to around ![]() pkts/sec. With

more than

pkts/sec. With

more than ![]() addresses to scan, the probability that more than one infectee scans the

same address within the same 10 second interval is around 1%.

addresses to scan, the probability that more than one infectee scans the

same address within the same 10 second interval is around 1%.

Figure 12 shows scan packets from infected sources that

targeted other infectees seen at the telescope. The ![]() -coordinate

gives

-coordinate

gives ![]() , the packet's estimated sending

time, and the

, the packet's estimated sending

time, and the ![]() -coordinate gives the difference between

-coordinate gives the difference between ![]() , the time when the target

infectee first appeared at the telescope, and

, the time when the target

infectee first appeared at the telescope, and ![]() . A small positive value of

. A small positive value of ![]() raises strong suspicions that the given scan packet is responsible for

infecting the given target. Negative values mean the target was already

infected, while larger positive values imply the scan failed to infect

the target for some reason -- it was lost, (Recall that the effective

bandwidth of most infectees is much lower than the access bandwidth,

indicating heavy loss in their generated traffic.) or blocked due to the

random destination port it used, or simply the target was not connected

to the Internet at that time. (Note that the asymptotic curves at the

top and bottom correspond to truncation effects reflecting the upper and

lower bounds on infection times.)

raises strong suspicions that the given scan packet is responsible for

infecting the given target. Negative values mean the target was already

infected, while larger positive values imply the scan failed to infect

the target for some reason -- it was lost, (Recall that the effective

bandwidth of most infectees is much lower than the access bandwidth,

indicating heavy loss in their generated traffic.) or blocked due to the

random destination port it used, or simply the target was not connected

to the Internet at that time. (Note that the asymptotic curves at the

top and bottom correspond to truncation effects reflecting the upper and

lower bounds on infection times.)

The clusters at

extreme values of ![]() in Figure 12 mask a very sharp additional

cluster, even using the log-scaling. This lies in the region

in Figure 12 mask a very sharp additional

cluster, even using the log-scaling. This lies in the region ![]() .

In Figure 13, we plot the number of scans in

10 second buckets against

.

In Figure 13, we plot the number of scans in

10 second buckets against ![]() .

The very central sharp peak corresponds to the interval 0-to-10 seconds

-- a clear mark of the dispatch of a successful scan closely followed by

the appearance of the victim at the telescope. We plan to continue our

investigation of infector-infectee relationships, hoping to produce an

extensive ``lineage'' of infection chains for use in models of worm

propagation.

.

The very central sharp peak corresponds to the interval 0-to-10 seconds

-- a clear mark of the dispatch of a successful scan closely followed by

the appearance of the victim at the telescope. We plan to continue our

investigation of infector-infectee relationships, hoping to produce an

extensive ``lineage'' of infection chains for use in models of worm

propagation.

7 Discussion

While we have focused on the Witty worm in this paper, the key idea is much broader. Our analysis demonstrates the potential richness of information embedded in network telescope observations, ready to be revealed if we can frame a precise model of the underlying processes generating the observations. Here we discuss the breadth and limitations of our analysis, and examine general insights beyond the specific instance of the Witty worm.

Candidates for similar analysis. The binary code of all Internet worms is available by definition, making them candidates for disassembly and analysis. Similarly, copies of many scanning and flooding tools have been captured by white hat researchers, and traces observed at telescopes of probing or attack traffic (or backscatter) from the operation of such tools provide candidates for similar analysis. A preliminary assessment we performed of ten well-known DoS attack tools revealed that six of them use simple PRNGs with unsophisticated seeds, while the other four use no random number generation at all. Even with limited knowledge of the operation of such tools, we should in principle be able to analyze logs of their attack traffic or backscatter with a similar intent of reconstructing the sequence of events in the automation of the attack, potentially leading to information about the attacking hosts, their interaction with the network, and other forensic clues.

Diversity of PRNGs. Our analysis was greatly facilitated by the use of a linear congruential PRNG by Witty's author. Reverse-engineering the state of a more complex PRNG could be much more difficult. In the extreme, a worm using a cryptographically strong hash function with a well-chosen key as its PRNG would greatly resist such reverse engineering. However, there are several practical reasons that support the likelihood of many attackers using simpler PRNGs.

Implementing good

PRNGs is a complicated task [8],

especially when constrained by limits on code size and the difficulty

of incorporating linkable libraries. Large-scale worms benefit greatly

from as self-contained a design as possible, with few dependencies on

platform support, to maximize the set of potential victims. Worms have

also proven difficult to fully debug -- virtually all large-scale worms

have exhibited significant bugs -- which likewise argues for keeping

components as simple as possible. Historically, worm authors have

struggled to implement even the LC PRNG correctly. The initial version

of Code Red failed to seed the PRNG with any entropy, leading to all

copies of the worm scanning exactly the same sequence of addresses [2]. Slammer's PRNG

implementation had three serious errors, one where the author used a

value of the parameter ![]() in the LC equation (Eqn. 1) that was larger than the correct value by 1 due to

an incorrect 2's complement conversion, another where this value was

subtracted from instead of added to the term

in the LC equation (Eqn. 1) that was larger than the correct value by 1 due to

an incorrect 2's complement conversion, another where this value was

subtracted from instead of added to the term ![]() in Eqn 1, and finally the (mis)use of an OR instruction

rather than XOR to clear a key register [11]. In addition, sources

of local entropy at hosts are often limited to a few system variables,

complicating the task of seeding the PRNG in a fashion strong enough to

resist analysis. Thus it is conceivable that worm authors will have

difficulty implementing bug-free, compact versions of sophisticated

PRNGs.

in Eqn 1, and finally the (mis)use of an OR instruction

rather than XOR to clear a key register [11]. In addition, sources

of local entropy at hosts are often limited to a few system variables,

complicating the task of seeding the PRNG in a fashion strong enough to

resist analysis. Thus it is conceivable that worm authors will have

difficulty implementing bug-free, compact versions of sophisticated

PRNGs.

In addition, today's worm authors have little incentive to implement a complex PRNG. As long as their goals are confined to effectively scanning the IP address space and maximizing the worm's infection rate, simple PRNGs suffice. Hiding one's tracks while releasing a worm can already be accomplished by using a chain of compromised victims as stepping stones. Indeed, the fact that Witty's author left Patient Zero running with a separate program for spreading the worm was purely a mistake on his/her part. As discussed earlier, the code it ran scanned a very small subset of the IP address space, and did not manage to produce even one infection during scanning.

Thus, there are significant factors that may lead to the continued use by worms of simple PRNGs such as LC, which, along with the availability of disassembled code, will facilitate the development of structural models of worm behavior to use in conjunction with telescope observations for detailed reconstructions.

General observations from this work. Our study has leveraged the special conditions produced by a worm's release to measure numerous features of its victim population and the network over which it spread. While specific estimation tricks developed in this paper might not apply to other telescope observations in a ``cookbook'' manner, the insight that telescope observations carry rich information that can be heavily mined armed with a sufficiently detailed model of the underlying source processes is of major significance for the future study of such data.

Understanding the structure of the scanning techniques used by worms (and empirical data on hitherto unmeasured quantities such as distribution of access bandwidth) can be crucial for developing correct models of their spread -- a case made for example by our observation of the doubly-scanned and never-scanned portions of the address space, and their multi-factored impact on the worm's growth.

Finally, we would emphasize that the extraction of the features we have assessed was a labor-intensive process. Indeed, for many of them we did not initially apprehend even the possibility of analyzing them. This highlights not only the difficulty of such a forensic undertaking, but also its serendipitous nature. The latter holds promise that observations of other Internet-scale events in the future, even those of significantly different details or nature, will likely remain open to the possibility of such analysis.

8 Conclusions

A worm's propagation is a rare but spectacular event in today's networks. Apart from the obvious disruptions and damage, worms also stress the network in unique ways and at scales unmatched by any controlled measurement experiments. One could say that a worm's release illuminates, for a few moments, dark corners of the network just as supernovae illuminate dark and distant corners of the universe, providing rich observations to telescopes that gather a mere sliver of the enormous radiant flux. But within the overwhelming mass of observed data lies a very structured process that can be deciphered and understood -- if studied with the correct model.

We have shown how a fine-grained understanding of the exact control flow of a particular worm -- especially its seeding and use of a pseudo-random number generator -- when coupled with network telescope data enables a detailed reconstruction of nearly the entire chain of events that followed the worm's release. In the process we have unearthed measurements of quantities such as access bandwidth and system up-time that are otherwise unobservable to the ``naked eye'' of researchers studying systems from afar. These measurements have applicability to a number of modeling and simulation studies, both in particular to worm propagation analysis, and more generally as a source of rarely-available empirical data. Finally, we have demonstrated the forensic power that such analysis can provide, marshalling strong evidence that the Witty worm specifically targeted a US military base and was launched via an IP address corresponding to a European ISP. We investigated an alternate explanation that instead these machines were passively monitoring large address regions and hence were infected much more quickly, but can discount this possibility because a ``lineage'' analysis reveals that a significant number of the machines did not receive any infection packets on even their entire local /16 prior to their own scanning activity arriving at the telescope.

Acknowledgments

This work was supported by the National Science Foundation under the following grants: Collaborative Cybertrust NSF-0433702, ITR/ANI-0205519, NRT-0335290, and ANI-0238315, for which we are grateful. We thank Colleen Shannon and David Moore at CAIDA, and Paul Barford and Vinod Yegneswaran at the University of Wisconsin for providing access to the telescope traces and answering numerous questions about them, and our CCIED colleagues and Ellen Zegura for valuable feedback.

Support for the Witty Worm Dataset and the UCSD Network Telescope are provided by Cisco Systems, Limelight Networks, the US Dept. Homeland Security, the National Science Foundation, and CAIDA, DARPA, Digital Envoy, and CAIDA Members.

References

-

- 1

- Michael Bailey, Evan Cooke, Farnam Jahanian, Jose Nazario, and

David Watson.

The Internet motion sensor: A distributed blackhole monitoring system.

In Proc. NDSS, 2005. - 2

- CAIDA.

CAIDA Analysis of Code-Red, https://www.caida.org/analysis/security/code-red/ - 3

- CERT.

CERT Advisory CA-1999-04 Melissa Macro Virus, https://www.cert.org/advisories/CA-1999-04.html - 4

- Evan Cooke, Michael Bailey, Z. Morley Mao, David Watson, Farnam

Jahanian, and Danny McPherson.

Toward understanding distributed blackhole placement.

In Proc. ACM CCS Workshop on Rapid Malcode (WORM), October 2004. - 5

- Domas Mituzas.

FreeBSD Scalper Worm, https://www.dammit.lt/apache-worm/ - 6

- eEye Digital Security.

.ida ``Code Red'' Worm, https://www.eeye.com/html/Research/Advisories/AL20010717.html. - 7

- Mark Eichin and Jon Rochlis.

With microscope and tweezers: An analysis of the Internet virus of november 1988.

In Proc. IEEE Symposium on Research in Security and Privacy, 1989. - 8

- Donald E. Knuth.

The Art of Computer Programming, Second Edition, volume 2, Seminumerical Algorithms.

Addison-Wesley, 1981. - 9

- K. Kortchinsky.

Black Ice worm disassembly.

https://www.caida.org/analysis/security/witty/BlackIceWorm.html. - 10

- D. Moore, C. Shannon, G. Voelker, and S. Savage.

Network telescopes: Technical report.

Technical report, Cooperative Association for Internet Data Analysis (CAIDA), July 2004. - 11

- David Moore, Vern Paxson, Stefan Savage, Colleen Shannon, Stuart

Staniford, and Nicholas Weaver.

Inside the Slammer Worm.

IEEE Security & Privacy, pages 33-39, July/August 2003. - 12

- David Moore, Vern Paxson, Stefan Savage, Colleen Shannon, Stuart

Staniford, and Nicholas Weaver.

The Spread of the Sapphire/Slammer Worm, 2003. - 13

- David Moore, Colleen Shannon, and k claffy.

Code-Red: a Case Study on the Spread and Victims of an Internet Worm.

In Proceedings of the Second Internet Measurement Workshop, pages 273-284, November 2002. - 14

- David Moore, Geoffrey M. Voelker, and Stefan Savage.

Inferring Internet Denial-of-Service Activity.

In Proceedings of the 10th USENIX Security Symposium, pages 9-22. USENIX, August 2001. - 15

- Ruoming Pang, Vinod Yegneswaran, Paul Barford, Vern Paxson, and

Larry Peterson.

Characteristics of Internet background radiation.

In Proc. ACM Internet Measurement Conference, October 2004. - 16

- F secure Inc.

Global slapper worm information center, https://www.f-secure.com/slapper/

- 17

- C. Shannon and D. Moore.

The caida dataset on the witty worm, March 19-24 2004.

https://www.caida.org/passive/witty/. - 18

- C. Shannon and D. Moore.

The spread of the Witty worm.

IEEE Security and Privacy, 2(4):46-50, August 2004. - 19

- Eugene Spafford.

The Internet worm program: An analysis. purdue technical report csd-tr-823, 1988. - 20

- Stuart Staniford and Vern Paxson and Nicholas Weaver.

How to 0wn the Internet in Your Spare Time.

In Proceedings of the 11th USENIX Security Symposium. USENIX, August 2002. - 21

- Nicholas Weaver and Dan Ellis.

Reflections on Witty: Analyzing the attacker.

;login:, pages 34-37, June 2004. - 22

- V. Yegneswaran, P. Barford, and D. Plonka.

On the design and utility of Internet sinks for network abuse monitoring.

In Proc. of Symposium on Recent Advances in Intrusion Detection, September 2004. - 23

- Cliff Changchun Zou, Weibo Gong, and Don Towsley.

Code Red Worm Propagation Modeling and Analysis.

In Proceedings of the ACM CCS 2002 conference, November 2002.

|

Last changed: 22 Sept. 2005 aw |

![\includegraphics[scale=0.6]{plots/growthrate.eps}](img43.png)

![\includegraphics[scale=0.7]{plots/fullhr.eps}](img55.png)

![\includegraphics[scale=0.67]{plots/compare.eps}](img59.png)

![\includegraphics[scale=0.7]{plots/caidaextra2.eps}](img61.png)

![\includegraphics[scale=0.67]{plots/compcaidaextra.eps}](img62.png)

![\includegraphics[scale=0.67]{plots/pktrate2.eps}](img66.png)

![\includegraphics[scale=0.67]{plots/compextraboth.eps}](img68.png)

![\includegraphics[height=1.25in,width=3.0in]{plots/uptime784.eps}](img91.png)

![\includegraphics[scale=0.67]{plots/infect2.eps}](img97.png)

![\includegraphics[scale=0.67]{plots/diff.eps}](img98.png)