|

Our experiments thus far have assumed a lightly loaded Gigabit

Ethernet LAN. The observed round trip times on our LAN is very small

(![]() 1ms). In practice, the latency between the client and the server

can vary from a few milliseconds to tens of milliseconds

depending on the distance between the client and the server.

Consequently, in this section, we vary the network

latency between the two machines and study its impact on performance.

1ms). In practice, the latency between the client and the server

can vary from a few milliseconds to tens of milliseconds

depending on the distance between the client and the server.

Consequently, in this section, we vary the network

latency between the two machines and study its impact on performance.

We use the NISTNet package to introduce a latency between the client

and the server. NISTNet introduces a pre-configured delay for each

outgoing and incoming packet so as to simulate wide-area conditions.

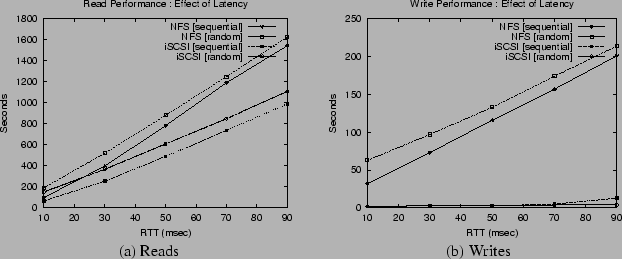

We vary the round-trip network latency from 10ms to 90ms and study its

impact on the sequential and random reads and writes. The experimental

setup is identical to that outlined in the previous section. Figure

6 plots the completion times for reading and writing a

128 MB file for NFS and iSCSI. As shown in Figure

6(a), the completion time increases with the network

latency for both systems. However, the increase is greater in NFS

than in iSCSI--the two systems are comparable at low latencies

(![]() 10ms) and the NFS performance degrades faster than iSCSI for

higher latencies. Even though NFS v3 runs over TCP, an Ethereal trace

reveals an increasing number of RPC retransmissions at higher latencies.

The Linux NFS client appears to time-out more frequently at higher latencies

and reissues the RPC request, even though the data is in transit, which in turn dregrades performance.

An implementation of NFS that exploits the error recovery at the TCP layer

will not have this drawback.

10ms) and the NFS performance degrades faster than iSCSI for

higher latencies. Even though NFS v3 runs over TCP, an Ethereal trace

reveals an increasing number of RPC retransmissions at higher latencies.

The Linux NFS client appears to time-out more frequently at higher latencies

and reissues the RPC request, even though the data is in transit, which in turn dregrades performance.

An implementation of NFS that exploits the error recovery at the TCP layer

will not have this drawback.

In case of writes, the iSCSI completion times are not affected by the network latency due to their asynchronous nature. The NFS performance is impacted by the pseudo-synchronous nature of writes in the Linux NFS implementation (see Section 4.5) and increases with the latency.