|

To better understand application-level effects of the baseline costs associated with different isolation techniques and with kernel plugins in particular, we next turn our attention to macro-benchmarks. We compare and contrast the overheads and service effects of two proposed isolation techniques for user-specific kernel extensions: placing extensible services in virtual machines, and implementing extensions as kernel plugins.

Modern services need to provide customizability while maintaining high levels of performance. Generally these two imperatives are in conflict. For our particular experiments, we chose to look at a web server, as it is a relatively simple, typical, and popular service with many available implementations. A well-known approach to building high-performance web servers is to run the service daemon within the OS kernel. While this eliminates many inefficiencies inherent to user-space and increases the performance of the server substantially, it has the unfortunate effect of discouraging extensibility due to safety concerns when running within the kernel. We propose to use kernel plugins to rectify this problem.

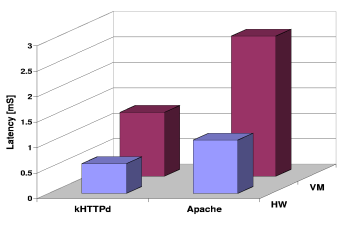

To quantify our assertions and to provide a better gauge for the expected performance of typical kernel- vs. user-space web servers, as well as different isolation techniques, we measured the server reply latency and reply throughput of popular web server implementations and report our findings in figures 6, 7, and 8.

The web servers ran on the machine described previously, while the test load was provided by a more powerful Dell Workstation 340 (2.2 GHz Pentium 4, 512 KB L2 cache, 512 MB of RDRAM-400) over an otherwise quiescent 100 Mbps Ethernet network. Results for these three figures were measured in user-space at the client workstation and include jitter induced by interrupt processing on both ends. Therefore, these are a good indicator of subjective performance for a client application in this environment.

Figure 6 provides a server reply latency comparison between kernel- vs. user-space based servers, as well as a measure of the cost of full virtualization (as measured on an industry standard product VMware 3.2.0). The figure shows that a typical kernel-space web server's latency (kHTTPd) is roughly half of a user-space server's latency (Apache). It also shows that full virtualization increases service latency 2-2.5 times. Thus, the addition of safe extensibility through virtual machine techniques likely cancels the performance benefits of employing kernel-based web servers.

Our proposed alternative, kernel plugins, compare favorably

latency-wise to the baseline case and the VM solution, as shown in

Figure 7. We measure an unmodified kernel web server

(baseline), the same server extended with a null kernel plugin

(invoked once per request from within kHTTPd), and another copy of the

server isolated in a VMware virtual machine. Kernel plugins add

minute latency overhead to the service being

extended. Figure 7 shows an average plugin overhead of

8 ![]() , though that value is inflated due to the well-known

significant variability that interrupt processing induces in

Linux [14]. A comparison between the averages of the top

10% timing samples provides a less variable and more accurate

estimate of the real plugin overhead (less than 1

, though that value is inflated due to the well-known

significant variability that interrupt processing induces in

Linux [14]. A comparison between the averages of the top

10% timing samples provides a less variable and more accurate

estimate of the real plugin overhead (less than 1 ![]() ), consistent

with the jitter-free results of Figure 5. The small cost

is striking compared to the larger latency overhead imposed by the VM

approach. The benefit obtained by using plugins over virtual machines

comes from achieving extensibility without complete

virtualization. Instead, only the isolation properties of

virtualization are really needed. Since kernel plugins are designed to

provide exactly that, they are able to avoid unnecessary overhead.

), consistent

with the jitter-free results of Figure 5. The small cost

is striking compared to the larger latency overhead imposed by the VM

approach. The benefit obtained by using plugins over virtual machines

comes from achieving extensibility without complete

virtualization. Instead, only the isolation properties of

virtualization are really needed. Since kernel plugins are designed to

provide exactly that, they are able to avoid unnecessary overhead.

The last macro-benchmark we consider explores the server's throughput degradation as a function of the isolation technique. We define throughput as the relation between the clients' request rate and a server's sustained reply rate. We measure throughput utilizing the well-known httperf benchmark [17].

Figure 8 shows a family of graphs describing the throughput performance of an unmodified kernel-based web server (baseline), a null-plugin modified server, and an unmodified server running in a virtual machine. While kernel plugins preserve the server's ability to handle high throughput almost untouched, the virtual machine-based server is saturated at a little more than half the throughput. Similarly, consistency and predictability inside a virtual machine are severely degraded. In contrast, the kernel plugin approach is remarkably stable and consistent. Again, the disparity is attributed to the many unnecessary non-isolation-related aspects of full virtualization.

We note that the inefficiencies inherent in virtualization schemes like VMware's are known and that there are promising alternatives like Xen [3]. However, Xen requires a host OS kernel to be ported to its specially defined abstract VM model, whereas plugins can be used with existing operating system kernels. The Xen approach, therefore, is complementary to our research.