|

Plugin Execution

Two metrics important to any server

application are latency and throughput. We measure the impact that

placing code in a kernel plugin has on these metrics. We define latency overhead as the amount of time passing between the first

instruction of a kernel plugin invocation and the execution of the

plugin's first instruction. Latency overhead thus defines the latency

cost of utilizing the kernel plugin facility. Similarly, we define

throughput overhead to be the execution time of a null

plugin. This represents the pure cost of the plugin abstraction. Since

plugins are executed directly on the underlying hardware, these

metrics are the only runtime costs incurred by kernel plugins.

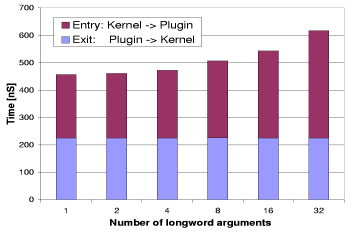

Micro-benchmark data displayed in Figure 5 depicts the execution time of a null kernel plugin (in nanoseconds), versus the number of long word arguments passed to it. Execution time is comprised of two parts: entry into the plugin and exit from the plugin. The first part characterizes the latency overhead experienced due to plugin use, whereas the second part represents the remaining cleanup overhead at exit time. It is easily observed from the graph that the entry latency is weakly linearly dependent on the number of plugin parameters, whereas the exit overhead is constant. It is important to realize that the measurements in Figure 5 are for plugin invocation from the kernel. Function invocation from within ring-1 is almost identical to a user-space function call, meaning that it is essentially `free'.

Results are obtained by timing 2001 runs, dropping the first one to avoid cold CPU cache effects and averaging the rest. Furthermore, interrupts are disabled during each individual benchmark run to shield measurements from the high timing variability that interrupts induce under Linux v2.4 [14]. The observed standard deviation for this plot is less than 1% from the mean, implying very high confidence in the data and predictability of the mechanism's performance.

The main result is that for a reasonable number of parameters, the

baseline cost of kernel plugins is between 0.45 ![]() and 0.62

and 0.62 ![]() for this hardware. Thus, our implementation's performance is on par

or better than similar schemes [8] (after adjusting for

our faster hardware) despite major differences in kernel architecture

(2.0.34 vs. 2.4.19) and implementation methodology.

for this hardware. Thus, our implementation's performance is on par

or better than similar schemes [8] (after adjusting for

our faster hardware) despite major differences in kernel architecture

(2.0.34 vs. 2.4.19) and implementation methodology.

Plugin Creation/Deletion

Since kernel plugins are intended to

be easily and frequently updated, it is important to characterize

their creation and deletion costs.

Our dynamic code generator uses subroutine-based techniques that do

not require invocation of an external compiler. It is fast and of time

complexity roughly linearly proportional to the source code size. For

the sample image-transcoding plugin used in our experiments the costs

for code generation, linking, and unlinking of the plugin are 4 ![]() ,

3.1

,

3.1 ![]() , and 1.6

, and 1.6 ![]() respectively.

respectively.