|

IMC '05 Paper

[IMC '05 Technical Program]

Joint Data Streaming and Sampling Techniques for Detection of Super Sources and Destinations

Qi (George) Zhao Abhishek Kumar Jun (Jim) Xu

College of Computing, Georgia Institute of Technology

Abstract:

Detecting the sources or destinations that have communicated with a

large number of distinct destinations or sources

during a small time interval

is an important problem in network measurement and security.

Previous detection approaches

are not able to deliver the desired accuracy

at high link speeds (10 to 40 Gbps). In

this work, we propose two novel algorithms that provide

accurate and efficient solutions to this problem. Their designs are

based on the insight that sampling and data streaming are often suitable

for capturing different and complementary regions of the information

spectrum, and a close collaboration between them is an excellent way to

recover the complete information. Our first solution builds on the

standard hash-based flow sampling algorithm.

Its main innovation is that the sampled traffic

is further filtered by a data streaming module

which allows for much higher sampling rate and hence much

higher accuracy.

Our second

solution is more sophisticated but offers higher accuracy. It

combines the power of data streaming in efficiently

estimating

quantities

associated with a given identity, and the power of sampling in

collecting a list of candidate identities. The performance of both

solutions are evaluated using both mathematical analysis and

trace-driven experiments on real-world Internet traffic.

1 Introduction

Measurement of flow-level statistics, such as total active flow count,

sizes and identities of large flows, per-flow traffic, and flow size

distribution are essential for network management and

security. Measuring such information on high-speed links (e.g., 10

Gbps) is challenging since the standard method of maintaining per-flow

state (e.g., using a hash table) for tracking various flow statistics

is prohibitively expensive. More specifically, at very high link

speeds, updates to the per-flow state for each and every incoming

packet would be feasible only through the use of very fast and

expensive memory (typically SRAM), while the size of such state is

very large [7] and hence too expensive to be held in SRAM.

Recently, the techniques for approximately measuring such statistics using

a much smaller state, based on a general methodology called

network data streaming, have been used to solve some of the

aforementioned problems

[5,6,12,11,22]. The main idea in

network data streaming is to use a small and fast memory to process

each and every incoming packet in real-time. Since it is impractical

to store all information in this small memory, the principle of data

streaming is to maintain only the information most pertinent to the

statistic to be measured.

In this work, we design data streaming algorithms that help detect super

sources and destinations.

A super source![[*]](footnote.png) is

a source that has a large fan-out (e.g., larger than a

predefined threshold) defined as the number of distinct destinations

it communicates with during a small time interval.

The concepts of super destination and fan-in can be defined

symmetrically. Our schemes in fact solve a strictly harder problem

than making a binary decision of whether a source/destination is a

super source/destination or not: They actually provide accurate

estimates of the fan-outs/fan-ins of potential super

sources/destinations.

In this work a source can be any combination of ``source'' fields

from a packet header such as source IP address, source port

number, or their combination, depending on target applications.

Similarly, a destination can be any combination of the

``destination'' fields from a packet header. We refer to the

source-destination pair of a packet as the flow label and use

these two terms interchangeably in the rest of this paper. is

a source that has a large fan-out (e.g., larger than a

predefined threshold) defined as the number of distinct destinations

it communicates with during a small time interval.

The concepts of super destination and fan-in can be defined

symmetrically. Our schemes in fact solve a strictly harder problem

than making a binary decision of whether a source/destination is a

super source/destination or not: They actually provide accurate

estimates of the fan-outs/fan-ins of potential super

sources/destinations.

In this work a source can be any combination of ``source'' fields

from a packet header such as source IP address, source port

number, or their combination, depending on target applications.

Similarly, a destination can be any combination of the

``destination'' fields from a packet header. We refer to the

source-destination pair of a packet as the flow label and use

these two terms interchangeably in the rest of this paper.

The problem of detecting super sources and destinations arises in many

applications of network monitoring and security. For example,

port-scans probe for the existence of vulnerable services

across the Internet by trying to connect to many different pairs of

destination IP address and port number. This is clearly a type of super source under our definition.

Similarly, in a DDoS (Distributed Denial of Service) attack, a large

number of zombie hosts flood packets to a destination. Thus the

problem of detecting the launch of DDoS attacks can be viewed as

detecting a super destination. This problem also arises in detecting

worm propagation and estimating their spreading rates. An infected host

often propagates the worm to a large number of destinations, and can

be viewed as a super source. Knowing its fan-out allows us to

estimate the rate at which the worm may spread. Another possible

instance lies in peer-to-peer and content distribution networks, where

a few servers or peers might attract a larger number of requests (for

content) than they can handle while most of others in the network are

relatively idle. Being able to detect such ``hot spots'' (a type of

super destination) in real-time helps balance the workload and improve

the overall performance of the network. A number of other variations

of the above applications, such as detecting flash crowds

[9] and reflector attacks [15], also motivate

this problem.

Techniques proposed in the literature for solving this problem

typically maintain per-flow state, and cannot scale to high link

speeds of 10 or 40 Gbps. For example, to detect port-scans, the

widely deployed Intrusion Detection System (IDS) Snort

[19] maintains a hash table of the distinct

source-destination pairs to count the destinations each source talks

to. A similar technique is used in FlowScan [17] for

detecting DDoS attacks.

The inefficiency in such an approach stems from the fact that most of

the source-destination pairs are not a part of port scans or DDoS

attacks. Yet, they result in a large number of source-destination

pairs that can be accommodated only in DRAM, which cannot support the

high access rates required for updates at line speed.

More recent work [20] has offered solutions based on

hash-based flow sampling technique. However, its accuracy is limited

due to the typically low sampling rate imposed by some inherent

limitations of the hash-based flow sampling technique discussed later

in Section 3. A more comprehensive survey of related

work is provided in Section 7.

In this paper we propose two efficient and accurate data streaming

algorithms for detecting the set of super sources by estimating the

fan-outs of the collected sources.

These algorithms can be easily adapted symmetrically for detecting the

super destinations. Their designs are based on the insight that

(flow) sampling and data streaming are often suitable for capturing

different and complementary regions of the information spectrum, and a

close collaboration between them is an excellent way to recover the

complete information. This insight leads to two novel methodologies

of combing the power of streaming and sampling, namely, ``filtering

after sampling'' and ``separation of counting and identity

gathering''. Our two solutions are built upon these two methodologies

respectively.

Our first solution, referred to as the simple scheme, is based

on the methodology of ``filtering after sampling''. It enhances the

traditional hash-based flow sampling algorithm to approximately count

the fan-outs of the sampled sources. As suggested by its name, the

design of this solution is very simple. Its main innovation is that

the sampled traffic is further filtered by a simple data streaming

module (a bit array), which guarantees that at most one packet from

each flow is processed. This allows for much higher sampling rate

(hence much higher accuracy) than achievable with traditional

hash-based flow sampling. Our second solution, referred to as the

advanced scheme, is more sophisticated than the simple scheme

but offers even higher accuracy. Its design is based on the

methodology of ``separation of counting and identity gathering'',

which combines the power of streaming in efficiently estimating

quantities (e.g., fan-out) associated with a given identity, and the

power of sampling in generating a list of candidate identities (e.g.,

sources). Through rigorous theoretical analysis and extensive

trace-driven experiments on real-world Internet traffic, we

demonstrate these two algorithms produce very accurate fan-out

estimations.

We also extend our advanced scheme for detecting the sources that have

large outstanding fan-outs, defined as the number of distinct

destinations it has contacted but has not obtained acknowledgments

(TCP ACK) from.

This extension has several important applications. One example is that

in port-scans, the probing packets, which target a large number of

destinations, will receive acknowledgments from only a small

percentage of them. Another example is distributed TCP SYN attacks.

In this case, the victim's TCP acknowledgments (SYN/ACK packets) to a

large number of hosts for completing the TCP handshake (the second

step) are not acknowledged. Our evaluation on bidirectional traffic

collected simultaneously on a link shows that our solution estimates

outstanding fanout with high accuracy.

The rest of this paper is organized as follows. In the next section,

we present our design methodologies and provide an overview of the

proposed solutions. Sections 3 and 4

describe the design of the two schemes in detail respectively and

provide a theoretical analysis of their complexity and

accuracy. Section 5 presents an extension of our scheme

for estimating outstanding fan-outs. We evaluate our solutions in

Section 6 using packet header traces of real-world

Internet traffic. We discuss the related work in Section 7

before concluding in Section 8.

2 Overview of our schemes

As we mentioned above, accurate measurement and monitoring in high

speed networks are challenging because the traditional per-flow schemes

cannot scale to high link speeds. As a stop-gap solution, packet

sampling has been used to keep up with high link speeds. In packet

sampling, a small fraction  of traffic is sampled and

processed. Since the sampled packets constitute a much lower volume

than the original traffic, a per-flow table stored in relatively

inexpensive DRAM can handle all the updates triggered by the sampled

packets in real-time [14]. Thus we can typically obtain

complete information contained in the sampled traffic. The statistics

of the original traffic are then inferred from that of the sampled

traffic by ``inverting'' the sampling process, i.e., by compensating

for the effects of sampling. However the accuracy of such

sampling-based estimations is usually low, because the error is scaled

by of traffic is sampled and

processed. Since the sampled packets constitute a much lower volume

than the original traffic, a per-flow table stored in relatively

inexpensive DRAM can handle all the updates triggered by the sampled

packets in real-time [14]. Thus we can typically obtain

complete information contained in the sampled traffic. The statistics

of the original traffic are then inferred from that of the sampled

traffic by ``inverting'' the sampling process, i.e., by compensating

for the effects of sampling. However the accuracy of such

sampling-based estimations is usually low, because the error is scaled

by  and and  is typically small (e.g., 1/500) to make the sampling

operation computationally

affordable [4,11,8]. In other words, although

the sampling-based approach allows for 100% accurate digesting of

information on sampled traffic, a large amount of information may be

lost during the sampling process. is typically small (e.g., 1/500) to make the sampling

operation computationally

affordable [4,11,8]. In other words, although

the sampling-based approach allows for 100% accurate digesting of

information on sampled traffic, a large amount of information may be

lost during the sampling process.

Network data streaming![[*]](footnote.png) has begun to be recognized as a better alternative to

sampling for measurement and monitoring of high-speed

links [10].

Contrary to sampling, a network data streaming algorithm will process

each and every packet passing through a high-speed link to glean

the most important information for answering a specific type of query,

using a small yet well-organized data structure. This data structure

is small enough to be fit into fast (yet expensive) SRAM, allowing it

to keep up with high link speeds. The challenge is to design this

data structure in such a way that it encodes the information we need,

for answering the query, in a succinct manner. Data streaming

algorithms, if available, typically offer much more accurate

estimations than sampling for measuring network flow statistics. This

is because, intuitively the sampling throws away a large percentage of

information up front, while data streaming, which processes each and

every packet, is often able to retain most of the most important

information inside a small SRAM module. has begun to be recognized as a better alternative to

sampling for measurement and monitoring of high-speed

links [10].

Contrary to sampling, a network data streaming algorithm will process

each and every packet passing through a high-speed link to glean

the most important information for answering a specific type of query,

using a small yet well-organized data structure. This data structure

is small enough to be fit into fast (yet expensive) SRAM, allowing it

to keep up with high link speeds. The challenge is to design this

data structure in such a way that it encodes the information we need,

for answering the query, in a succinct manner. Data streaming

algorithms, if available, typically offer much more accurate

estimations than sampling for measuring network flow statistics. This

is because, intuitively the sampling throws away a large percentage of

information up front, while data streaming, which processes each and

every packet, is often able to retain most of the most important

information inside a small SRAM module.

In our context of detecting super sources, however, both sampling and

data streaming are valuable for capturing different and complementary

regions of the information spectrum, and a close collaboration between

them is used to recover the complete information.

There are two parts of information that we would like to

know in finding super sources: one is the identities (e.g., IP

addresses) of the sources that may be super sources. The other is the

fan-out associated with each source identity.

We observe that data

streaming algorithms can encode the fan-outs of various

sources into a very succinct data structure. Such a data structure,

however, typically only provides a lookup interface. In other words,

if we obtain a source identity through other means, we are able to

look up the data structure to obtain its (approximate) fan-out, but

the data structure itself cannot produce any

identities and is undecodable without such identities being supplied

externally. On the other hand, sampling is an excellent way of

gathering source identities though it is not a great counting device

as we described earlier.

The above observation leads to one of the two aforementioned design

methodologies, i.e., separating identity gathering and counting.

The idea is to use a streaming data structure as a counting device and

use sampling

to gather the identities of potential super sources. Then we look up

the streaming data structure using the gathered identities to obtain the

corresponding counts. This methodology is used in our advanced scheme

that employs a 2-dimensional bit array as the counting device, in

parallel with an identity gathering module that adopts an enhanced

form of sampling. We show that our

sampling module has vanishingly small probability of missing the

identity of any actual super sources and the estimation

module produces highly accurate estimates of the fan-out of the potential

super sources. This scheme is especially suitable for very

high link speeds of 10 Gbps and above. We describe this scheme in

Section 4.

We also explore another way of combing sampling and streaming,

i.e., ``filtering after sampling''. Its idea is to employ a data

streaming module between the sampling operation and the final processing

procedure to efficiently encode whether a flow has been seen

before.

A careful design of this module guarantees that at most one packet in

each flow needs to be processed. This allows us to achieve

much higher sampling rate and hence much higher accuracy than the

traditional flow sampling scheme.

This solution works very well for relatively lower link speeds

(e.g., 10 Gbps and below).

We describe this scheme in detail in Section 3.

3 The simple scheme

In this section we present a relatively simple scheme for detecting

super sources. It builds upon the traditional hash-based flow sampling

technique but can achieve a much higher sampling rate, and hence more

accurate estimation. We begin with a discussion of some limitations of

the traditional hash-based sampling approach, and then describe our

solution that alleviates these limitations. We also present an

analysis of the complexity and accuracy of the scheme.

1 Limitations of traditional hash-based flow sampling

There are two generic sampling approaches for network measurement:

packet sampling and flow sampling. In the former approach, each packet

is sampled independently with a certain probability, while in the

latter, the sampling decision is made at the granularity of flows

(i.e., all packets belonging to sampled flows are sampled). In the

following, we only consider flow sampling since packet sampling is not

suitable for our context of detecting super sources.

![[*]](footnote.png)

A traditional flow sampling algorithm that estimates the fan-outs of

sources works

as follows. The algorithm randomly samples a certain percentage (say

) of source-destination pairs using a hashing technique (described

next). The fan-out of each source in the sampled pairs is counted and

then scaled by ) of source-destination pairs using a hashing technique (described

next). The fan-out of each source in the sampled pairs is counted and

then scaled by  to obtain an estimate of the fan-out of the

source in the original traffic (i.e., before sampling). This counting

process is typically performed using a hash table that

stores the fan-out values (after sampling) of all sources seen

in the sampled traffic so far, and a newly sampled flow will

increment the fan-out counter of the corresponding hash node (or

trigger the creation of a new node). Since the estimation error is

also scaled by to obtain an estimate of the fan-out of the

source in the original traffic (i.e., before sampling). This counting

process is typically performed using a hash table that

stores the fan-out values (after sampling) of all sources seen

in the sampled traffic so far, and a newly sampled flow will

increment the fan-out counter of the corresponding hash node (or

trigger the creation of a new node). Since the estimation error is

also scaled by  , it is desirable to make the sampling rate , it is desirable to make the sampling rate  as

high as possible. However, we will show that, at high link speeds,

the traditional hash-based flow sampling approach may prevent us from

achieving high sampling rate needed for accurate estimation. as

high as possible. However, we will show that, at high link speeds,

the traditional hash-based flow sampling approach may prevent us from

achieving high sampling rate needed for accurate estimation.

Flow sampling is commonly implemented using a simple hashing technique

[3] as follows. First a hash function  that maps

a flow label to a value uniformly distributed in that maps

a flow label to a value uniformly distributed in  is fixed.

When a packet arrives, its flow label is hashed by is fixed.

When a packet arrives, its flow label is hashed by  . Given a

sampling probability . Given a

sampling probability  , the flow is sampled if and only if the

hashing result is no more than , the flow is sampled if and only if the

hashing result is no more than  . Recall that the purpose of flow

sampling is to reduce the amount of traffic that needs to be processed

by the aforementioned hash table which performs the counting.

Clearly, it is desirable that a hash table that runs slightly faster

than . Recall that the purpose of flow

sampling is to reduce the amount of traffic that needs to be processed

by the aforementioned hash table which performs the counting.

Clearly, it is desirable that a hash table that runs slightly faster

than  times the link speed, can keep up with the incoming rate of

the sampled traffic (with rate times the link speed, can keep up with the incoming rate of

the sampled traffic (with rate  ). For example, we would like a

hash table (in DRAM) that is able to process a packet in ). For example, we would like a

hash table (in DRAM) that is able to process a packet in  to

handle all traffic sampled from a link with 10 million packets per

second (i.e., one packet arrival per to

handle all traffic sampled from a link with 10 million packets per

second (i.e., one packet arrival per  on the average) with

slightly less than 25% sampling rate. Unfortunately, we cannot

achieve this goal with the current hash-based flow sampling approach

for the following reason. on the average) with

slightly less than 25% sampling rate. Unfortunately, we cannot

achieve this goal with the current hash-based flow sampling approach

for the following reason.

With hash-based flow sampling, if a flow is

sampled, all packets belonging to the flow need to be processed by the

hash table. Internet traffic is very bursty in the sense that the

packets belonging to a flow tend to arrive in bursts and do not

interleave well with packets from other flows and is also known to

exhibit the following characteristic [5]: a small

number of elephant flows contain most of the overall traffic while the

vast majority of the flows are small. If a few elephant flows are

sampled, their packets could generate a long burst of sampled traffic

that has much higher rate than that can be handled by the hash

table![[*]](footnote.png) . Therefore,

with hash-based flow sampling, the sampling rate . Therefore,

with hash-based flow sampling, the sampling rate  has to be much

smaller than the ratio between the operating speed of the hash table

and the arrival rate of traffic, thus leading to large estimation

errors as discussed before.

In the following subsection, we present an efficient yet simple

solution to this problem, allowing the sampling rate to reach or even

well exceed this ratio. has to be much

smaller than the ratio between the operating speed of the hash table

and the arrival rate of traffic, thus leading to large estimation

errors as discussed before.

In the following subsection, we present an efficient yet simple

solution to this problem, allowing the sampling rate to reach or even

well exceed this ratio.

In [20] the authors propose a

one-level filtering algorithm which uses the

hash-based flow sampling approach described above, in conjunction

with a hash table for counting the fan-out values. It does

not specify whether DRAM or SRAM will be used to implement the hash

table. If DRAM were used, it will not be able to achieve a high

sampling rate as discussed before. If SRAM were used, the memory

cost is expected to be prohibitive when the sampling rate is high.

This algorithm appears to be effective and

accurate for monitoring lower link speeds, but cannot deliver a high

estimation accuracy when operating at high link speeds such as 10Gbps (the

target link speeds are not mentioned in

[20]).

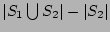

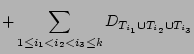

Figure 1:

Traditional flow sampling vs. filtering after sampling

|

2 Our scheme

We design a filtering technique that completely

solves the aforementioned problem. It allows the sampling rate to be

very close to the ratio between the hash table speed and the link

speed in the worst-case and well exceed the ratio otherwise.

Its conceptual design is shown

in Figure 1. Compared with the traditional flow

sampling approach, our approach places a data streaming module between

the hash-based flow sampling module and the hash table (for counting).

This streaming module guarantees that at most one packet from each

sampled flow needs to be processed by the hash table. This will

completely smooth out the aforementioned traffic bursts in the

flow-sampled traffic, since such bursts are caused by highly bursty

arrivals from one or a small number of elephant flows and now only the

first packets of these flows may trigger updates to the hash table.

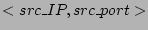

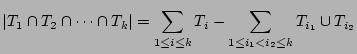

Figure 2:

Algorithm of updating data streaming module.

|

|

The data structure and algorithm of the data streaming module are shown

in Figure 2. Its basic idea is to use a bit

array  to remember whether a flow label,

a source-destination pair in our context, has been

processed by the hash table.

Let the size of the array be to remember whether a flow label,

a source-destination pair in our context, has been

processed by the hash table.

Let the size of the array be  bits. We fix a hash function bits. We fix a hash function  that maps

a flow label to a value uniformly distributed in that maps

a flow label to a value uniformly distributed in ![$ [1, w]$](img24.png) .

The array is initialized to all ``0''s at the beginning of a measurement epoch.

Upon the arrival of a packet .

The array is initialized to all ``0''s at the beginning of a measurement epoch.

Upon the arrival of a packet  , we hash its flow label ( , we hash its flow label (

) using ) using  and the result and the result  is treated

as an index into the array is treated

as an index into the array  . If . If ![$ G[r]$](img28.png) is equal to 1, our algorithm

concludes that a packet with this flow label has been processed

earlier, and takes no further action. Otherwise (i.e., is equal to 1, our algorithm

concludes that a packet with this flow label has been processed

earlier, and takes no further action. Otherwise (i.e., ![$ G[r]$](img28.png) is 0), this

flow label will be processed to update the corresponding counter is 0), this

flow label will be processed to update the corresponding counter

maintained in a hash table maintained in a hash table  .

Then .

Then ![$ G[r]$](img28.png) is set to 1 to remember the fact that a packet with this

flow has been seen and processed. This method clearly ensures that at

most one packet from each sampled flow is processed by is set to 1 to remember the fact that a packet with this

flow has been seen and processed. This method clearly ensures that at

most one packet from each sampled flow is processed by  . However,

due to hash collisions, some sampled flows may not be processed at all

since their corresponding bits in . However,

due to hash collisions, some sampled flows may not be processed at all

since their corresponding bits in  would be set by their colliding

counterparts. would be set by their colliding

counterparts.![[*]](footnote.png) The update procedure of the hash table The update procedure of the hash table

, described next, statistically compensates for such collisions. , described next, statistically compensates for such collisions.

Now we explain our statistical estimator, which is the computation

result of the hash table update procedure shown in

Figure 2 (line 11). Suppose the number of ``0''

entries in  (with size (with size  ) is ) is  right before a packet right before a packet  with

source with

source  arrives ( arrives (

in line 10). Assume in line 10). Assume  belongs

to a new flow and its flow label hashes to an index belongs

to a new flow and its flow label hashes to an index  . The value of . The value of

![$ G[r]$](img28.png) has value 0 with probability has value 0 with probability

. Therefore to obtain an

unbiased estimator . Therefore to obtain an

unbiased estimator

of the fan-out of the source of the fan-out of the source  on

the sampled traffic, we should statistically compensate for the fact

that with probability on

the sampled traffic, we should statistically compensate for the fact

that with probability

, the bit , the bit ![$ G[r]$](img28.png) has value 1 and has value 1 and

will miss the update to will miss the update to  due to aforementioned hash

collisions. It is intuitive that if we add due to aforementioned hash

collisions. It is intuitive that if we add

to to

, the resulting estimator is unbiased. To be more

precise, suppose in a measurement epoch, the hash table is updated by

altogether , the resulting estimator is unbiased. To be more

precise, suppose in a measurement epoch, the hash table is updated by

altogether  packets { packets { , ,

} from a source } from a source  ,

whose flow labels hash to locations ,

whose flow labels hash to locations  's where 's where

![$ G[r_j] = 0$](img42.png) , and

there are , and

there are  0's in 0's in  right before right before  arrives, respectively.

The output of the hash table arrives, respectively.

The output of the hash table  , which is an unbiased estimator of

the fan-out of , which is an unbiased estimator of

the fan-out of  on the sampled traffic, is on the sampled traffic, is

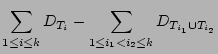

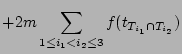

|

(1) |

We show in the following lemma that this is an unbiased estimator of  and its proof can be found in [24].

and its proof can be found in [24].

Lemma 1

is an unbiased estimator of is an unbiased estimator of  , i.e., , i.e.,

![$ E[\widehat{N_s}]=N_s$](img46.png) . .

Then an unbiased estimator of the fan-out  of source of source  is

given by scaling is

given by scaling

by by  , i.e., , i.e.,

where  is the sampling rate used in the flow sampling. We

show in the following theorem that the estimator is the sampling rate used in the flow sampling. We

show in the following theorem that the estimator

is

unbiased. Its proof uses Lemma 1 and is also provided in

[24]. is

unbiased. Its proof uses Lemma 1 and is also provided in

[24].

Theorem 1

is an unbiased estimator of is an unbiased estimator of  , i.e., , i.e.,

![$ E[\widehat{F_s}]=F_s$](img49.png) . .

We now demonstrate that this solution will completely smooth out the

aforementioned problem of traffic bursts

, and allow the sampling rate

to be close to the ratio between the hash table speed and the link

rate, the theoretical upper limit in the worst case. The worst case

for our scheme is that each flow contains only one packet (e.g., in

the case of DDoS attacks)![[*]](footnote.png) . Even in this

worst case, the update times to the hash table (viewed as a random

process) is very close to Poisson . Even in this

worst case, the update times to the hash table (viewed as a random

process) is very close to Poisson![[*]](footnote.png) (nonhomogeneous as the value of (nonhomogeneous as the value of  varies over

time) since each new flow is sampled independently. Due to the

``benign'' nature of this arrival process, by employing a tiny SRAM

buffer (e.g., holding 20 flow labels of 64 varies over

time) since each new flow is sampled independently. Due to the

``benign'' nature of this arrival process, by employing a tiny SRAM

buffer (e.g., holding 20 flow labels of 64  100 bits each), a hash

table that operates slightly faster than the average rate of this

process will only miss a negligible fraction of updates due to buffer

overflow. This process can be faithfully modeled as a Markov chain for

rigorous analysis. We elaborate it with a numerical example in

[24] and omit it here due to lack of space. 100 bits each), a hash

table that operates slightly faster than the average rate of this

process will only miss a negligible fraction of updates due to buffer

overflow. This process can be faithfully modeled as a Markov chain for

rigorous analysis. We elaborate it with a numerical example in

[24] and omit it here due to lack of space.

Notice that in Figure 2 the variable  , the number

of ``0'' entries in , the number

of ``0'' entries in  , decreases as more and more sampled flows are

processed. When more and more packets pass through the data streaming

module, , decreases as more and more sampled flows are

processed. When more and more packets pass through the data streaming

module,  becomes small and hence the probability for a new flow to be

recorded, becomes small and hence the probability for a new flow to be

recorded,

, decreases. Thereby the estimation error

will increase.

To maintain high accuracy, we specify a minimum value , decreases. Thereby the estimation error

will increase.

To maintain high accuracy, we specify a minimum value  for for

. Once the value of . Once the value of  drops below drops below  , the estimation

procedure will use a new array (set to all ``0''s initially) and start

a new measurement epoch (with an empty hash table). Two sets of

arrays and hash tables will be operated in an alternating manner so

that the measurement can be performed without interruption. The

parameter , the estimation

procedure will use a new array (set to all ``0''s initially) and start

a new measurement epoch (with an empty hash table). Two sets of

arrays and hash tables will be operated in an alternating manner so

that the measurement can be performed without interruption. The

parameter  is typically set to around is typically set to around  (i.e., ``half full''). (i.e., ``half full'').

3 Complexity analysis

The above scheme has extremely low storage (SRAM) complexity and

allows for very high streaming speed.

Memory (SRAM) consumption. Each processed flow

only consumes a little more than one bit in SRAM.

Thus a reasonable amount of SRAM can support very high link speeds.

For example, assuming the average flow size of 10 packets [11],

512KB SRAM is enough to support a measurement epoch which

is slightly longer than 2 seconds for a link with 10 million packets

per second even without performing any flow sampling. With 25% flow

sampling which is typically set for OC-192 links the SRAM requirement is

even brought down to 128KB.![[*]](footnote.png)

Streaming speed. Our algorithm in

Figure 2 has two branches to deal with the packets

arriving at the data streaming module. If the corresponding bit is ``1'',

the packets only require one hash function computation and one read to

SRAM. Otherwise they require one hash function computation, one read

and one write (at the same location) to SRAM and an update to the hash

table. Using efficient hardware implementation of hash function

[18] and  SRAM, all operations in the data streaming

module can be finished in 10's of SRAM, all operations in the data streaming

module can be finished in 10's of  in both cases. in both cases.

4 Accuracy analysis

Now we establish the following theorem to characterize the variance of

the estimator

in Formula 2. Its proof can be

found in [24] in Formula 2. Its proof can be

found in [24]

Remark: The above variance consists of two terms. The

first term corresponds to the variance of the error term in estimating

the sampled fan-out, scaled by

(to compensate for

sampling), and the second term corresponds to the variance of the

error term in inverting flow sampling process. Since these two errors

are approximately orthogonal to each other, their total variance is

the sum of their individual variances. (to compensate for

sampling), and the second term corresponds to the variance of the

error term in inverting flow sampling process. Since these two errors

are approximately orthogonal to each other, their total variance is

the sum of their individual variances.

4 The advanced scheme

In this section we propose the advanced scheme that is more sophisticated

than the simple scheme but can offer more accurate fan-out

estimations. It is based on

the aforementioned design methodology of separating identity gathering from

counting.

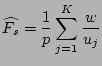

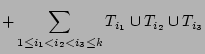

Its system model is shown in

Figure 3. There are two parallel modules processing

the incoming packet stream. The data streaming module encodes the

fan-out information for each and every source (arc 1 in

Figure 3) into a very compact data structure, and the

identity sampling module captures the candidate source identities which have

potential to be super sources (arc 2). These source identities are

then used by an estimation algorithm to look up the data

structure (arc 3) produced by the data streaming module (arc 4) to get their

corresponding fan-out

estimates. The design of these modules are described in the following

subsections.

Figure 3:

System model of the advanced scheme

|

Figure 4:

An instance of online streaming module

|

Figure 5:

Algorithm of online streaming module

|

|

The data structure used in the online streaming module is quite simple:

an

2-dimensional bit array 2-dimensional bit array  . The bits in . The bits in  are set

to all ``0''s at the beginning of each measurement epoch. The

algorithm of updating are set

to all ``0''s at the beginning of each measurement epoch. The

algorithm of updating  is shown in

Figure 5. Upon the arrival of a packet is shown in

Figure 5. Upon the arrival of a packet  , ,

is hashed by is hashed by  independent independent![[*]](footnote.png) hash functions hash functions

with range with range ![$ [1..n]$](img67.png) . The hashing results . The hashing results

, ,

, ..., , ...,

are viewed as

column indices into are viewed as

column indices into  . In our scheme, . In our scheme,  is set to 3, and the

rationale behind it will be discussed in Section 4.3. Then,

the flow label is set to 3, and the

rationale behind it will be discussed in Section 4.3. Then,

the flow label

is hashed by another independent

hash function is hashed by another independent

hash function  (with range (with range ![$ [1..m]$](img72.png) ) to generate a row index of ) to generate a row index of

. Finally, the . Finally, the  bits located at the intersections of the

selected row and columns are set to ``1''. An example is shown in

Figure 4, in which the three intersection bits (circled)

are set to ``1''s. When bits located at the intersections of the

selected row and columns are set to ``1''. An example is shown in

Figure 4, in which the three intersection bits (circled)

are set to ``1''s. When  is filled (by ``1'') to a threshold

percentage we terminate the current measurement epoch and start the

decoding process is filled (by ``1'') to a threshold

percentage we terminate the current measurement epoch and start the

decoding process![[*]](footnote.png) .

In Section 4.2, we show that the above process

produces a very compact and accurate (statistical) encoding of the

fan-outs of the sources, and present the corresponding decoding

algorithm. .

In Section 4.2, we show that the above process

produces a very compact and accurate (statistical) encoding of the

fan-outs of the sources, and present the corresponding decoding

algorithm.

Readers may feel that the above 2D bit array  is a variant of

Bloom filters [1]. This is not the case since although its

encoding algorithm is similar to that of a Bloom filter (flipping some

bits to ``1"s), we decode the 2D bit array for a different kind of

information (the fan-out count) than if we really use it as a Bloom

filter (check if a particular source-destination pair has

appeared). Our encoding algorithm is not well engineered for being used

as a Bloom filter. And our decoding algorithm, shown next, is also

fundamentally different from that of a Bloom filter. is a variant of

Bloom filters [1]. This is not the case since although its

encoding algorithm is similar to that of a Bloom filter (flipping some

bits to ``1"s), we decode the 2D bit array for a different kind of

information (the fan-out count) than if we really use it as a Bloom

filter (check if a particular source-destination pair has

appeared). Our encoding algorithm is not well engineered for being used

as a Bloom filter. And our decoding algorithm, shown next, is also

fundamentally different from that of a Bloom filter.

The proposed online streaming module has very low memory consumption

and high streaming speed:

Memory (SRAM) consumption. Our scheme is extremely

memory-efficient. Each source-destination pair (flow) will set 3 bits

in the bit vectors to ``1''s and consume a little more than 3 bits of SRAM

storage![[*]](footnote.png) . We will show that

the scheme provides very high accuracy using reasonable amount of

SRAM (e.g., 128KB) in Section 6. . We will show that

the scheme provides very high accuracy using reasonable amount of

SRAM (e.g., 128KB) in Section 6.

Streaming speed. Each update requires only  hash

function computations and hash

function computations and  writes to the SRAM.

We require that these four hash functions

are independent and amendable to hardware implementation.

They can be chosen from the writes to the SRAM.

We require that these four hash functions

are independent and amendable to hardware implementation.

They can be chosen from the  hash function family [2,18],

which, with hardware implementation, can produce a hash output within

a few nanoseconds. Then with commodity hash function family [2,18],

which, with hardware implementation, can produce a hash output within

a few nanoseconds. Then with commodity  SRAM our scheme would allow around

SRAM our scheme would allow around  million packets per second,

thereby supporting million packets per second,

thereby supporting  Gbps traffic stream assuming a conservative

average packet size of 1,000 bits. Gbps traffic stream assuming a conservative

average packet size of 1,000 bits.

2 Estimation module

For each source identity recorded by the sampling module (described

later), the estimation module decodes its approximate fan-out from the

2D bit array  , the output of the data streaming module.

In this section, we describe this decoding algorithm in detail. , the output of the data streaming module.

In this section, we describe this decoding algorithm in detail.

When we would like to know  , the fan-out of the source , the fan-out of the source  , ,  is hashed by the hash functions

is hashed by the hash functions  , ,  , ,  , which are

defined and used in the online streaming module, to obtain , which are

defined and used in the online streaming module, to obtain  column

indices. Let column

indices. Let  , ,  , 2, , 2,  , ,  , be the corresponding

columns (viewed as bit vectors). In the following, we derive, step by

step, an accurate and almost unbiased estimator of , be the corresponding

columns (viewed as bit vectors). In the following, we derive, step by

step, an accurate and almost unbiased estimator of  , as a

function of , as a

function of  , ,  , 2, , 2,  , ,  . .

Let the set of packets hashed into column  during the

corresponding measurement epoch be during the

corresponding measurement epoch be  and the number of bits in and the number of bits in

that are ``0''s be that are ``0''s be  . Note that the value . Note that the value  is a

part of our observation since we can obtain is a

part of our observation since we can obtain  from from  through simple counting, although the notation itself contains

through simple counting, although the notation itself contains  ,

the size of which we would like to estimate. Recall the size of the

column vector is ,

the size of which we would like to estimate. Recall the size of the

column vector is  . A fairly accurate estimator of . A fairly accurate estimator of  , the

number of packets of , the

number of packets of  , adapted from [21], is , adapted from [21], is

Note that  , the fan-out of the source , the fan-out of the source  , is equal to , is equal to

, if during the measurement epoch, no other

sources are hashed to the same , if during the measurement epoch, no other

sources are hashed to the same  columns columns

.

Otherwise .

Otherwise

is the sum of the

fan-outs of all (more than 1) the sources that are hashed into is the sum of the

fan-outs of all (more than 1) the sources that are hashed into

. We show in the next section, that the

probability with which the latter case happens is very small when . We show in the next section, that the

probability with which the latter case happens is very small when  .

We obtain the following estimator of .

We obtain the following estimator of  , which is in fact derived

as an estimator for , which is in fact derived

as an estimator for

. .

Here

, is defined as , is defined as

, where , where

denotes the number of ``0''s in the bit vector denotes the number of ``0''s in the bit vector

which is the result of hashing the set of packets which is the result of hashing the set of packets

into a single empty bit vector. The bit

vector into a single empty bit vector. The bit

vector

is computed as the bitwise-OR of is computed as the bitwise-OR of

,..., ,...,  . One can easily verify the correctness of this

computation with respect to the semantics of . One can easily verify the correctness of this

computation with respect to the semantics of

. .

We need to show that the RHS of Formula 4 is a fairly

good estimator of

. Note that . Note that

by the principle of inclusion and exclusion. Since

is a fairly good estimator of is a fairly good estimator of

according to [21], we obtain the RHS of

Formula 4 by replacing the terms according to [21], we obtain the RHS of

Formula 4 by replacing the terms

in Formula 5 by in Formula 5 by

, ,

. Note that it is not correct to directly use the

bitwise-AND of . Note that it is not correct to directly use the

bitwise-AND of

for estimating for estimating

using Formula 3, because the bit vector

corresponding to the result of hashing the set of packets using Formula 3, because the bit vector

corresponding to the result of hashing the set of packets

into an empty bit vector, is not equivalent to

the bitwise-AND of into an empty bit vector, is not equivalent to

the bitwise-AND of

. .

The estimator in Formula 4 generalizes the result

in [21] which is

developed for the special case  . We will show that our scheme only needs to use the special case of . We will show that our scheme only needs to use the special case of

, which is , which is

The computational complexity of estimating the fan-out of a source is

dominated by  bitwise-OR operations among bitwise-OR operations among  column vectors.

Such vectors can be encoded as one or more unsigned integers so that

the bit-parallelism can significantly reduce the execution time.

Since column vectors.

Such vectors can be encoded as one or more unsigned integers so that

the bit-parallelism can significantly reduce the execution time.

Since  is typically 64 bits in our scheme, the whole vector can be

held in two 32-bit integers. Therefore, in our scheme where is typically 64 bits in our scheme, the whole vector can be

held in two 32-bit integers. Therefore, in our scheme where  ,

estimation of the fan-out of each source only needs 8

bitwise-OR operations between 32-bit integers.

We also need to count the number of ``0''s in a vector (to get ,

estimation of the fan-out of each source only needs 8

bitwise-OR operations between 32-bit integers.

We also need to count the number of ``0''s in a vector (to get  values). This can be sped up significantly by using a pre-computed

table (in SRAM) of size 262,144 (

values). This can be sped up significantly by using a pre-computed

table (in SRAM) of size 262,144 (

) bits that stores

the number of ``0''s

in all 16-bit numbers. Our estimation of the execution time shows

that our scheme is fast enough to support OC-768 operations. ) bits that stores

the number of ``0''s

in all 16-bit numbers. Our estimation of the execution time shows

that our scheme is fast enough to support OC-768 operations.

3 Accuracy analysis

In this section we first briefly explain the rationale behind setting  to

to  in the estimator and then analyze the accuracy of our estimator

rigorously.

We set in the estimator and then analyze the accuracy of our estimator

rigorously.

We set  (the number of ``column'' hash functions) to 3 due to the

following two considerations.

First, we mentioned before that if two sources (the number of ``column'' hash functions) to 3 due to the

following two considerations.

First, we mentioned before that if two sources  and and  both

are hashed to the same both

are hashed to the same  columns, our decoding algorithm will give

us an estimate of their total fan-out, when we use columns, our decoding algorithm will give

us an estimate of their total fan-out, when we use

or or  to lookup the 2D array. We certainly would like the

probability with which

this scenario occurs to be as small as possible. This can be achieved

by making to lookup the 2D array. We certainly would like the

probability with which

this scenario occurs to be as small as possible. This can be achieved

by making  as large as possible. However, larger as large as possible. However, larger  implies

larger computational and storage complexities at the online streaming

module. We will show that implies

larger computational and storage complexities at the online streaming

module. We will show that  makes the probability of the

aforementioned hash collision very small, and at the same time keeps

the computational and storage complexities of our scheme modest. makes the probability of the

aforementioned hash collision very small, and at the same time keeps

the computational and storage complexities of our scheme modest.

Now we derive  , the probability that at least two sources

happen to hash to the same set of , the probability that at least two sources

happen to hash to the same set of  columns by columns by  , ,

, ..., , ...,  . It is not hard to show, using straightforward

combinatorial arguments, that . It is not hard to show, using straightforward

combinatorial arguments, that

, where , where  is the total number of the distinct sources

during the measurement epoch. We observe that, given typical values for is the total number of the distinct sources

during the measurement epoch. We observe that, given typical values for

and and  , ,  is quite large when is quite large when  , but drops to a very low

value when , but drops to a very low

value when  . For example, when . For example, when  K and K and  , ,  is close to

is close to  when when  , but drops to , but drops to  when when  . .

The following theorem characterizes the variance of the estimator in

Formula 6, which is also its approximate mean square

error (MSE), since the estimator is almost unbiased and the impact of

(discussed above) on the estimation error is very small when (discussed above) on the estimation error is very small when

. Its proof can be found in [24]. This is an extension

of our previous variance analysis in [23] which is derived

for the special case . Its proof can be found in [24]. This is an extension

of our previous variance analysis in [23] which is derived

for the special case  .

Let .

Let  denote denote  , which is the load factor of the bit

vector(of size , which is the load factor of the bit

vector(of size  ) when the corresponding set ) when the corresponding set  of

source-destination pairs are hashed into it, in the following theorem. of

source-destination pairs are hashed into it, in the following theorem.

Theorem 3

The variance of

is given by

where is given by

where

. .

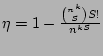

Figure 6:

Distribution of the estimation from Monte-Carlo simulation ( b, b,  and and  ). ).  is the standard deviation computed from Theorem 3 is the standard deviation computed from Theorem 3

|

An example distribution of

with respect to the actual

value with respect to the actual

value  is shown in Figure 6. We obtained this

distribution with 20,000 runs of the Monte-Carlo simulations with the

following parameters. In this empirical distribution, the actual

fan-out is shown in Figure 6. We obtained this

distribution with 20,000 runs of the Monte-Carlo simulations with the

following parameters. In this empirical distribution, the actual

fan-out  is 50, the size of the column vector is 50, the size of the column vector  is 64 bits.

The load factor is set to is 64 bits.

The load factor is set to

for for

. Also,

since the sets . Also,

since the sets  , ,  , and , and  have 50 flows in common, we

set have 50 flows in common, we

set

, for , for

. Here we implicitly assume that pairs of them do not have any

flows in common other than these 50 flows, which happens with very

high probability ( . Here we implicitly assume that pairs of them do not have any

flows in common other than these 50 flows, which happens with very

high probability (

) anyway given a

large ) anyway given a

large  (e.g., 16K).

In this example the standard deviation (e.g., 16K).

In this example the standard deviation  is around is around  as

computed from Theorem 3. We observe that the size of

the tail that falls outside 2 standard deviations from the mean is

very small ( as

computed from Theorem 3. We observe that the size of

the tail that falls outside 2 standard deviations from the mean is

very small ( ). This shows that, with high probability, our

estimator will not deviate too much from the actual value. In

Section 6, the trace-driven experiments on the

real-world Internet traffic will further validate this. ). This shows that, with high probability, our

estimator will not deviate too much from the actual value. In

Section 6, the trace-driven experiments on the

real-world Internet traffic will further validate this.![[*]](footnote.png)

Note that given the size of  , our scheme is only able to accurately

estimate fan-out values up to , our scheme is only able to accurately

estimate fan-out values up to

, because if the actual

fan-out , because if the actual

fan-out  is much larger than that, we will see all 1's in the

corresponding column vectors with high probability (due to the result

of the ``coupon collector's problem'' [13]). In this

case, the only information we can obtain about is much larger than that, we will see all 1's in the

corresponding column vectors with high probability (due to the result

of the ``coupon collector's problem'' [13]). In this

case, the only information we can obtain about  is that it is no

smaller than is that it is no

smaller than  . Fortunately, for the purpose of detecting

super sources, this information is good enough for us to declare . Fortunately, for the purpose of detecting

super sources, this information is good enough for us to declare  a

super source, as long as the threshold for super sources is much

smaller than a

super source, as long as the threshold for super sources is much

smaller than  . However, in some applications (e.g.,

estimating the spreading speed of a worm), we may also want to know

the approximate fan-out value. This can be achieved using a

multi-resolution extension of our advanced scheme. The methodology

of multi-resolution is quite standard and has been used in

several recent works [6,12,11]. The extension in

our context is straightforward. We omit its detailed specifications

here in interest of space. . However, in some applications (e.g.,

estimating the spreading speed of a worm), we may also want to know

the approximate fan-out value. This can be achieved using a

multi-resolution extension of our advanced scheme. The methodology

of multi-resolution is quite standard and has been used in

several recent works [6,12,11]. The extension in

our context is straightforward. We omit its detailed specifications

here in interest of space.

4 Identity sampling module

The purpose of this module is to capture the identities of

potential super sources

that will be used to look up the 2D array to get their

fan-out estimations. The filtering after sampling technique

proposed in Section 3 is adopted here with a slightly

different recording strategy. Instead of constructing a hash table to

record the sources and their fan-out estimation, here we only record

the source identities sequentially in the DRAM. Since this strategy

avoids expensive hash table operations and sequential writes to DRAM

can be made very fast (using burst mode), very high sampling rate can

be achieved.

With commodity  SRAM and SRAM and  DRAM, this recording strategy

will be able to process more than 12.5 million packets per second. At

this speed, we can record 100% and 25% flow labels for OC-192 and

OC-768 links respectively. With such a high sampling rate, the

probability that the identity of a real super source misses sampling is

very low. For example, given 25% sampling rate the probability that

a source with fan-out 50 fails to be recorded is only DRAM, this recording strategy

will be able to process more than 12.5 million packets per second. At

this speed, we can record 100% and 25% flow labels for OC-192 and

OC-768 links respectively. With such a high sampling rate, the

probability that the identity of a real super source misses sampling is

very low. For example, given 25% sampling rate the probability that

a source with fan-out 50 fails to be recorded is only

(= (=

). ).

5 Estimating outstanding fan-outs

In this section we describe how to extend the advanced scheme to

detect the sources that have contacted but have not obtained

acknowledgments from a large number of distinct destinations (i.e.,

the sources with large outstanding fan-outs).

Although both of our schemes have the potential to support this

extension we focus on the advanced scheme in this work and leave the

extension of the simple scheme for future research. In the following

sections we show how to slightly modify the operations of the online

streaming module and the estimation module of the advanced scheme for

estimating outstanding fan-outs. The sampling module does not need to

be modified.

Figure 7:

Algorithm for updating the 2D bit array  to record ACK packets to record ACK packets

|

|

The online streaming module employs two 2D bit arrays  and and

of identical size and shape.

The array of identical size and shape.

The array  encodes the fan-outs of sources in traffic in one

direction (called ``outbound'') in the same way as in the advanced

scheme (shown in Figure 5). The array encodes the fan-outs of sources in traffic in one

direction (called ``outbound'') in the same way as in the advanced

scheme (shown in Figure 5). The array  encodes the

fan-ins of the destinations of the acknowledgment packets in the

opposite direction (called ``inbound'').

Its encoding algorithm is shown in Figure 7. It

is a transposed version of the algorithm shown in

Figure 5 in the sense that all occurrences of

`` encodes the

fan-ins of the destinations of the acknowledgment packets in the

opposite direction (called ``inbound'').

Its encoding algorithm is shown in Figure 7. It

is a transposed version of the algorithm shown in

Figure 5 in the sense that all occurrences of

`` '' are replaced with with `` '' are replaced with with `` '' and `` '' and `` ''

with `` ''

with `` ''. This transposition is needed since a source in

the outbound traffic appears in the inbound acknowledgment traffic as

a destination, and after transposing two packets that belong to a flow

and its ``acknowledgment flow'' respectively will result in a write of

``1'' to the same bit locations in ''. This transposition is needed since a source in

the outbound traffic appears in the inbound acknowledgment traffic as

a destination, and after transposing two packets that belong to a flow

and its ``acknowledgment flow'' respectively will result in a write of

``1'' to the same bit locations in  and and  respectively. This

allows us to essentially take a ``difference'' between respectively. This

allows us to essentially take a ``difference'' between  and and  to

obtain the decoding of outstanding fan-outs of various sources, shown

next. to

obtain the decoding of outstanding fan-outs of various sources, shown

next.

We compute the

bitwise-OR

of  and and  , denoted as , denoted as  . For each source . For each source

, we

decode its fan-out from , we

decode its fan-out from  using the same decoding algorithm as

described in Section 4.2. Similarly, we decode its

fan-in in the acknowledgment traffic from using the same decoding algorithm as

described in Section 4.2. Similarly, we decode its

fan-in in the acknowledgment traffic from  . Our estimator of

the outstanding fan-out of . Our estimator of

the outstanding fan-out of  is simply the former subtracted by the latter. is simply the former subtracted by the latter.

Now we explain why this estimator will provide an accurate estimate of

the outstanding fan-out of a source  . Let . Let  be the set of

flows whose source is `` be the set of

flows whose source is `` '' in the outbound traffic. Let '' in the outbound traffic. Let  be

the set of flows whose destination is `` be

the set of flows whose destination is `` '' in the inbound

acknowledgment traffic. Clearly the quantity we would like to

estimate is simply '' in the inbound

acknowledgment traffic. Clearly the quantity we would like to

estimate is simply

. The correctness of our estimator is

evident from the following three facts: (a) . The correctness of our estimator is

evident from the following three facts: (a)

is equal to is equal to

; (b) decoding from ; (b) decoding from  will result

in a fairly accurate estimate of will result

in a fairly accurate estimate of

and (c) decoding

from and (c) decoding

from  will result in a fairly accurate estimate of will result in a fairly accurate estimate of  . .

6 Evaluation

Table 1:

Traces used in our evaluation. Note that source is

, destination is , destination is  and flow label is the distinct source-destination pair. and flow label is the distinct source-destination pair.

|

Trace |

# of sources |

# of flows |

# of packets |

IPKS |

119,444 |

151,260 |

1,459,394 |

IPKS |

96,330 |

125,126 |

1,655,992 |

|

USC |

84,880 |

106,626 |

1,500,000 |

|

UNC |

55,111 |

101,398 |

1,495,701 |

|

In this section, we evaluate the proposed schemes using real-world

Internet traffic traces. Our experiments are grouped into three parts

corresponding to the three algorithms presented: the simple scheme,

the advanced scheme, and its extension to estimate outstanding

fan-outs. The experimental results show that our schemes allow for

accurate estimation of fan-outs and hence the precise detection of super

sources.

1 Traffic Traces and Flow definitions

Figure 8:

Actual vs. estimated fan-outs of sources by the simple scheme given the flow sampling rate 1/4. Notice both axes are on logscale.

[trace IPKS+]

![\includegraphics[scale=0.4]{compare_mve_0.25.IPKS+.eps}](img159.png)

[trace UNC]

![\includegraphics[scale=0.4]{compare_mve_0.25.UNC.eps}](img160.png)

[trace USC]

![\includegraphics[scale=0.4]{compare_mve_0.25.USC.eps}](img161.png)

|

Trying to make the experimental data as

representative as possible, we use packet header traces gathered at

three different locations of the Internet, namely, University of North

Carolina (UNC), University of Southern California (USC), and

NLANR. The trace form UNC was collected on a 1 Gbps access link

connecting the campus to the rest of the Internet, on Thursday, April

24, 2003 at 11:00 am. The trace from USC was collected at their Los

Nettos tracing facility on Feb. 2, 2004. We also use a pair of

unidirectional traces from NLANR:  and and  , collected

simultaneously on both directions of an OC192c link on June 1,

2004. The link connects Indianapolis (IPLS) to Kansas City (KSCY)

using Packet-over-SONET. This pair of traces is especially valuable

to evaluate the extended advanced scheme for estimating outstanding

fan-outs. All the above traces are either publicly available or

available for research purposes upon request. Table 1

summarizes all the traces used in the evaluation. We will use , collected

simultaneously on both directions of an OC192c link on June 1,

2004. The link connects Indianapolis (IPLS) to Kansas City (KSCY)

using Packet-over-SONET. This pair of traces is especially valuable

to evaluate the extended advanced scheme for estimating outstanding

fan-outs. All the above traces are either publicly available or

available for research purposes upon request. Table 1

summarizes all the traces used in the evaluation. We will use  , ,

and and  to evaluate our simple scheme and advanced scheme

and use the concurrent traces to evaluate our simple scheme and advanced scheme

and use the concurrent traces  and and  to evaluate the

extension. to evaluate the

extension.

As mentioned before, a source/destination label can be any combination

of source/destination fields from the IP header. Two different

definitions of source and destination labels are used in our

experiments, targeting different applications. In the first

definition, source label is the tuple  src_IP, src_port src_IP, src_port and

destination label is and

destination label is  dst_IP dst_IP . This definition targets

applications such as detecting worm propagation and locating popular

web servers. The flow statistics displayed in Table 1

use this definition. In the second definition, Second, source label

is . This definition targets

applications such as detecting worm propagation and locating popular

web servers. The flow statistics displayed in Table 1

use this definition. In the second definition, Second, source label

is  src_IP src_IP and destination label is the tuple and destination label is the tuple  dst_IP,

dst_port dst_IP,

dst_port . This definition targets applications such as detecting

infected sources that conduct port scans. The experimental results

presented in this section use the first definition of source and

destination labels unless noted otherwise. . This definition targets applications such as detecting

infected sources that conduct port scans. The experimental results

presented in this section use the first definition of source and

destination labels unless noted otherwise.

In this section, we evaluate the accuracy of the simple scheme in

estimating the fan-outs of sources and in detecting super sources.

Figure 8 compares the fan-outs of the sources

estimated using our simple scheme with the their actual fan-outs in

traces  , ,  , and , and  respectively. In these experiments,

a flow sampling rate of respectively. In these experiments,

a flow sampling rate of  and a bit array of size and a bit array of size  bits is

used. The figure only plots the points whose actual fan-out values

are above 15 since lower values (i.e., bits is

used. The figure only plots the points whose actual fan-out values

are above 15 since lower values (i.e.,  ) are not interesting for

finding super sources.

The solid diagonal line in each figure denotes perfect estimation,

while the dashed lines denote an estimation error of ) are not interesting for

finding super sources.

The solid diagonal line in each figure denotes perfect estimation,

while the dashed lines denote an estimation error of  . The

dashed lines are parallel to the diagonal line since both x-axis and

y-axis are on the log scale. Clearly the closer the points cluster

around the diagonal, the more accurate the estimation is. We observe that

the simple scheme achieves reasonable accuracy for relatively large

fan-outs in all three traces. Figure 8 also

reflects the false positives and negatives in detecting super sources.

For a given threshold 50, the points that fall in ``Area I''

corresponds to false positives, i.e., the sources whose actual

fan-outs are less than the threshold but the estimated fan-outs are

larger than the threshold. Similarly, the points that fall in ``Area

II'' corresponds to false negatives, i.e., the sources whose

actual fan-outs are larger than the threshold but the estimated

fan-outs are smaller than the threshold. We observe that in

Figure 8, points rarely fall into Areas I and II (i.e., very few false

positives and negatives . The

dashed lines are parallel to the diagonal line since both x-axis and

y-axis are on the log scale. Clearly the closer the points cluster

around the diagonal, the more accurate the estimation is. We observe that

the simple scheme achieves reasonable accuracy for relatively large

fan-outs in all three traces. Figure 8 also

reflects the false positives and negatives in detecting super sources.

For a given threshold 50, the points that fall in ``Area I''

corresponds to false positives, i.e., the sources whose actual

fan-outs are less than the threshold but the estimated fan-outs are

larger than the threshold. Similarly, the points that fall in ``Area

II'' corresponds to false negatives, i.e., the sources whose

actual fan-outs are larger than the threshold but the estimated

fan-outs are smaller than the threshold. We observe that in

Figure 8, points rarely fall into Areas I and II (i.e., very few false

positives and negatives![[*]](footnote.png) ). ).

While this scheme works well with 1/4 sampling rate, it cannot produce

good estimations with smaller sampling rates (e.g., 1/16. We omit the

experimental results here due to lack of space.).

However such lower sampling rates might be necessary to keep up

with very high link speeds such as 40 Gbps (OC-768).

We repeat the above experiment under the aforementioned second

definition of source and destination, in which the source label is

src_IP src_IP and destination label is and destination label is  dst_IP, dst_port dst_IP, dst_port .

Figure 9 plots the estimated fan-outs of

sources in trace IPKS+. With this definition the trace .

Figure 9 plots the estimated fan-outs of

sources in trace IPKS+. With this definition the trace  has

9,359 sources and 140,140 distinct source-destination pairs. We can

see from the figure that our estimation is also quite accurate with

this second definition of source and destination. has

9,359 sources and 140,140 distinct source-destination pairs. We can

see from the figure that our estimation is also quite accurate with

this second definition of source and destination.

Figure 9:

Actual vs. estimated fan-out of

sources for trace  with flow sampling rate 1/4. The

aforementioned second definition of source and destination labels is

used here. Note that both x-axis and y-axis are on logscale. with flow sampling rate 1/4. The

aforementioned second definition of source and destination labels is

used here. Note that both x-axis and y-axis are on logscale.

|

3 Accuracy of the advanced scheme

In this section we evaluate the accuracy of the advanced scheme using

both trace-driven simulation and theoretical analysis. The estimation

accuracy of the advanced scheme is a function of the various design

parameters, including the size and shape of the 2D bit array  (i.e., the number of rows

(i.e., the number of rows  and columns and columns  ) and the number of hash

functions ( ) and the number of hash

functions ( ). ).

In the experiments we set the size of  to 128KB (64 rows to 128KB (64 rows

16,384 columns), 16,384 columns),  and the flow sampling rate to 1.

This configuration is very space-efficient. For example it only uses

7 bits per flow on the average for the trace and the flow sampling rate to 1.

This configuration is very space-efficient. For example it only uses

7 bits per flow on the average for the trace  . .

Figure 10:

Actual vs. estimated fan-out of sources by