Next: Effect of the Delayed

Up: Experimental Results

Previous: Playing the Trace at

Effect of Double Synchronous Writes

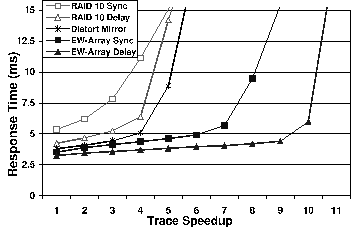

Figure 12:

Effect on TPC-C throughput as we^M

write to two disks synchronously. The total number of disks is a^M

constant (36). Labels that include the word ``Delay'' denote^M

experiments that propagate all but the first replicas in the^M

background. Labels that include the word ``Sync'' denote experiments^M

that write two replicas synchronously.

|

In the previous experiments, all but the first replicas are propagated

in the background.

To raise the degree of reliability, one may desire

to have two copies physically on disk before a

write request is allowed to return.

We now study the effect of

increasing foreground writes on three alternative configurations: a

RAID-10, a Doubly Distorted Mirror (DDM), and a

EW-Array. The DDM performs two synchronous eager-writes and moves one

of the two copies to a fixed location in the background. We note that

the system that we have called the DDM is in fact a highly optimized

implementation that is based on the MimdRAID disk location prediction

mechanism and the eager-writing scheduling algorithms, features not

detailed in the words of ``write anywhere'' in the simple original

simulation-based study [19]. (We do not consider SR-Arrays

because the pure form of an SR-Array involves only intra-disk

replication which does not increase the reliability of the system.)

As expected, extra foreground write slows down

both the RAID-10 and the EW-Array.

However, for a given request arrival rate that does

not cause performance collapse, the response time degradation

experienced by the RAID-10 is more pronounced than that seen on the

EW-Array. This is because the cost of an extra update-in-place

foreground write is relatively greater than that of an extra

foreground eager-write. The performance of the DDM lies in between

, because the two foreground writes enjoy some performance benefit of

eager-writing but the extra update-in-place write becomes costly,

especially when the request arrival rate is high. One of the purposes

of this third update-in-place write is to restore data locality that

might have been destroyed by the eager-writes. This is useful for

workloads that exhibit both greater burstiness and locality.

Unfortunately, the TPC-C workload is such that it does not benefit

from this data reorganization.

EW-Array. The DDM performs two synchronous eager-writes and moves one

of the two copies to a fixed location in the background. We note that

the system that we have called the DDM is in fact a highly optimized

implementation that is based on the MimdRAID disk location prediction

mechanism and the eager-writing scheduling algorithms, features not

detailed in the words of ``write anywhere'' in the simple original

simulation-based study [19]. (We do not consider SR-Arrays

because the pure form of an SR-Array involves only intra-disk

replication which does not increase the reliability of the system.)

As expected, extra foreground write slows down

both the RAID-10 and the EW-Array.

However, for a given request arrival rate that does

not cause performance collapse, the response time degradation

experienced by the RAID-10 is more pronounced than that seen on the

EW-Array. This is because the cost of an extra update-in-place

foreground write is relatively greater than that of an extra

foreground eager-write. The performance of the DDM lies in between

, because the two foreground writes enjoy some performance benefit of

eager-writing but the extra update-in-place write becomes costly,

especially when the request arrival rate is high. One of the purposes

of this third update-in-place write is to restore data locality that

might have been destroyed by the eager-writes. This is useful for

workloads that exhibit both greater burstiness and locality.

Unfortunately, the TPC-C workload is such that it does not benefit

from this data reorganization.

Next: Effect of the Delayed

Up: Experimental Results

Previous: Playing the Trace at

Chi Zhang

2001-11-16