|

|

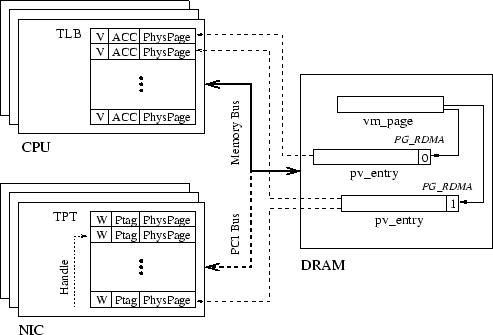

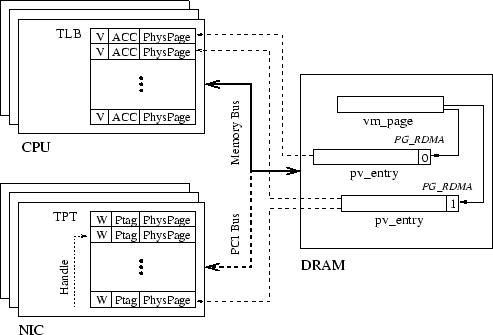

Memory-to-memory NIC store virtual-to-physical address translations and access rights for all user and kernel memory regions directly addressable and accessible by the NIC. Figure 2 shows a system combining both CPU and NIC memory management hardware: Main CPU use their on-chip translation lookaside buffer (TLB) to translate virtual to physical addresses. A typical TLB page entry includes a number of bits such as V and ACC signifying whether the page translation is valid, and what the access rights to the page are, along with the physical page number. A miss on a TLB lookup requires a page table lookup in main memory. NIC on the PCI (or other I/O) bus have their own translation and protection (TPT) [5] tables. Each entry in the TPT includes bits enabling RDMA Read or Write (i.e. the W bit in the diagram) operations on the page, the physical page number, and a Ptag value identifying the process that owns the pages (or the kernel). Whereas the TLB is a high-speed associative memory, the TPT is usually implemented as a DRAM module on the NIC board. To accelerate lookups on the TPT, remote memory access requests carry a Handle index that helps the NIC find the right TPT entry.

This section focuses on operating system support to integrate NIC memory management units in the FreeBSD VM system. The main benefits of this integration are

In FreeBSD (and other systems deriving from 4.4BSD), a physical page is represented by a vm_page structure and an address space by a vm_map structure. A page may be mapped on one or more vm_maps with each mapping represented by a pv_entry structure. Figure 2 shows a vm_page with two associated pv_entry structures in main memory.

In our design, the VM system maintains the NIC MMU via the OS-NIC interface. The NIC accesses VM structures via the NIC-OS interface.

OS-NIC Interface. The OS needs to interact with the NIC to add, remove and modify VM mappings stored on its TPT. A mapping of a VM page expected to be used in RDMA has to be registered with the NIC. Registering the mapping with the NIC happens in pmap, right after the CPU mapping with a vm_map is established. The NIC exports low-level VM primitives (Table 3) for use by pmap to add, remove and modify TPT entries. NIC mappings may later be deregistered (when the original mapping is removed/invalidated), or have their protection changed.

To keep an account of VM mappings that have been registered with the NIC, we add a PG_RDMA bit in the pv_entry structure to be set whenever a pv_entry has a NIC as well as a CPU mapping. In Figure 2, the pv_entry with the PG_RDMA bit set has both a CPU and a NIC mapping. In all pmap operations on VM pages, the pmap module interacts with the NIC only if the PG_RDMA flag is set on the pv_entry.

| Function | Description |

| tpt_init() | Initialize |

| tpt_enter() | Insert mapping |

| tpt_remove() | Remove mapping |

| tpt_protect() | Protect mapping |

Higher-level code can trigger registration of a virtual memory region with the NIC by propagating appropriate flags from higher to lower-level interfaces and eventually to the pmap. For example, the DAFS server sets an IO_RDMA bit in the ioflags parameter of the vnode interface (Table 2) when planning to use the buffer for RDMA. This eventually translates into a VM_RDMA flag in the pmap_enter() interface that results in mappings being registered with the NIC.

A problem with invalidating pv_entry mappings that have also been registered with the NIC is that NIC invalidations may need to be delayed for as long as RDMA transfers using the mappings are in progress. Pmap_remove_all() is complicated by this fact as (for atomicity) it has to try to remove pv_entry structures with NIC mappings first and may eventually fail if NIC invalidations are not possible within a reasonable amount of time.

Another problem is with VM system policies that are often based on architectural

assumptions that do not hold with NIC characteristics. For example, the

FreeBSD VM system unmaps pages from process page tables when moving them

from an active to inactive or cached state. This is because the VM system

is willing to take a reasonable number of reactivation faults to determine

how active a page actually is, based on the assumption that reactivation

faults are relatively inexpensive [7].

NIC reactivation faults are significantly more expensive compared to CPU

faults due to lack of integration between the NIC and the host memory system.

To reduce that cost, it would make sense to apply the deactivation policy

only to CPU mappings, leaving NIC mappings intact for as long as VM pages

are memory resident. However, full integration of the NIC memory management

unit into the VM system argues for this policy to be equally applied to

NIC page accesses.

NIC-OS Interface. The NIC initiates interaction with the VM system in the following occasions using the interface of Table 4.