|

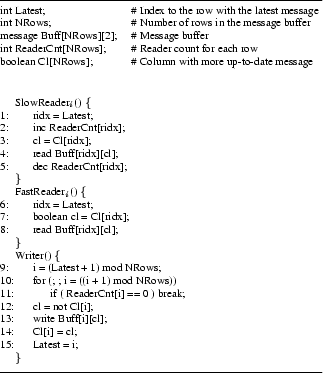

We now present a simple algorithm for partitioning the reader set into fast and slow readers, optimizing for minimum memory usage. The algorithm is shown in Figure 9, and can be used with any single-writer, multiple-reader IPC scheme improved with our transformation by simply changing a few constants to match the algorithm.

The algorithm initially sets all reader tasks to be slow readers. It keeps the tasks sorted by non-decreasing order of their NMax values, computed as with the NBW protocol (Section 2.2). It tries to move one task at a time from the slow reader set to the fast reader set, and recomputes the number of buffers needed, (S + F), where S is the requirement for the slow readers, and F for the fast readers. By keeping track of the setting with lowest memory use so far, after a single pass through all of the tasks, we obtain the Splitpoint, which indicates the last fast reader. All tasks with lower NMax values are also part of the fast reader set.

This partitioning of the reader set is optimal with respect to the number of message buffers. This is easy to show: take a partitioning that is space-optimal, and let task i be the fast reader with the largest NMax value. Now, all tasks with lower NMax values than task i must also be part of the fast reader set (otherwise, we can move them to the fast reader set; they will not affect the number of buffers needed for the fast readers (i.e., largest NMax value), but will reduce the slow reader set's buffer requirements, and the optimality assumption would be invalid). Since the above algorithm considers all partitions in which all tasks with less than a particular NMax value are in the fast reader set, the optimal partition will be found by the algorithm.

The partitioning algorithm uses certain constants that depend on the specific IPC mechanism used. For the initialization, Splitpoint is always set to NULL and F always set to 0, but MinNumBuff and S are both set to the number of buffers needed assuming that all tasks are slow readers. For the Improved Chen's algorithm, this is (P + 2), and for the Improved Double Buffer, it is 2(P + 1), where P is the number of tasks. Additionally, V is the number of buffers used for each additional slow reader, and is set to 1 and 2, for Chen's and the Double Buffer mechanisms, respectively.

We illustrate the partitioning algorithm using the sample task set in

Figure 10, which indicates the writer's period and

relative deadline, as well as the readers' periods (relative deadlines) and

computation times. RMax and NMax values, assuming CR is

negligible and the readers' relative deadlines are equal to their periods,

are also shown. Assuming Double Buffer algorithm, initially S = MinNumBuff = 16, F = 0, and all readers are in the slow reader set.

Tasks are moved one at a time according to their NMax values, so

first, Reader 0 is moved to the fast reader set. Now S = 14 and F = 3,

so (F + S) is not the lowest value seen, and Splitpoint is not

changed. We continue with Reader 1, resulting in S = 12 and F = 3, so

![]() MinNumBuff holds. Splitpoint is updated to Reader

1, and MinNumBuff is set to S + F. We repeat this with all of the

readers, in order. By the end, Splitpoint points to Reader 4, and

MinNumBuff = 10. So, with the first five readers as fast readers, we

achieve the minimum number of buffers required for this example, a 37.5%

reduction from the original algorithm.

MinNumBuff holds. Splitpoint is updated to Reader

1, and MinNumBuff is set to S + F. We repeat this with all of the

readers, in order. By the end, Splitpoint points to Reader 4, and

MinNumBuff = 10. So, with the first five readers as fast readers, we

achieve the minimum number of buffers required for this example, a 37.5%

reduction from the original algorithm.