Dandelion: Cooperative Content

Distribution with Robust Incentives

Michael

Sirivianos Jong

Han

Park Xiaowei

Yang Stanislaw

Jarecki

Department of Computer

Science

University of California, Irvine

Abstract:

Content distribution via the Internet is becoming increasingly

popular. To be cost-effective, commercial content providers are

considering the use of peer-to-peer (P2P) protocols such as BitTorrent

to save on bandwidth costs and to handle peak demands. However, when an

online content provider uses a P2P protocol, it faces a crucial issue:

how to incentivize its clients to upload to their peers.

This paper presents Dandelion, a system designed to address

this

issue in the case of paid content distribution. Unlike previous

solutions, most notably BitTorrent,

Dandelion provides robust (provably non-manipulable) incentives for

clients to upload to others. In addition, unlike systems with

tit-for-tat-based incentives, a client is motivated to upload to its

peers even if the peers do not have content that interests the client.

A client that honestly uploads to its peers is rewarded with credit,

which can be redeemed for various types of rewards, such as discounts

on paid content.

In designing Dandelion, we trade scalability for the ability to

provide robust incentives. The evaluation of our prototype system on

PlanetLab demonstrates the viability of our approach. A Dandelion

server that runs on commodity hardware with a moderate access link is

capable of supporting up to a few thousand clients. These clients can

download content at rates comparable to those of BitTorrent clients.

2 Introduction

Content distribution via the Internet is becoming increasingly popular

among the entertainment industry and the consumers alike. A survey

showed that

Apple's iTunes music store sold more music than Tower Records and

Borders in the US in the summer of 2005 [10]. A number

of key content producers, such as Universal, are now

launching download to own services [15].

However, the increasing demand for digital content is overwhelming the

infrastructure of online content providers [13].

An attractive approach for commercial online content distribution is

the use

of peer-to-peer (P2P) protocols. This approach does not

require a content provider to overprovision its bandwidth to handle

peak demands, nor does it require the provider to purchase service

from a third-party such as Akamai. Instead, a P2P protocol such as

BitTorrent [26]

harnesses its

clients' bandwidth for file distribution, and saves the bandwidth and

computing resources of a content provider. Leading content providers

such as Warner Bros [16]

and 20th Century Fox [11]

have now partnered with BitTorrent, Inc. EMI [12] has announced

a plan to launch a P2P music distribution service.

This recent trend indicates that P2P protocols enable a site to

cost-effectively distribute content.

When an online content provider uses a peer-to-peer protocol, it

faces a crucial issue: how to motivate clients that possess content to

upload to others. This issue is of paramount importance because the

performance of a P2P network is highly dependent on the users'

willingness to contribute their uplink

bandwidth. However, selfish (rational) users tend not to share their

bandwidth without external incentives [36].

Although the popular BitTorrent protocol has incorporated the

rate-based tit-for-tat

incentive mechanism for users to upload static content, this mechanism

bears two

weaknesses. First, it does not encourage clients to seed, i.e. to

upload to other peers after completing the file download. Second, it is

vulnerable to

manipulation [49,37,44,50,45],

allowing modified clients to free-ride and still achieve a better

downloading rate than compliant clients (Section 2.2).

The purpose of this work is to explore the design space of a

P2P content distribution protocol that addresses this issue. We present

the design and implementation of Dandelion, a cooperative

paid content distribution protocol that uses non-manipulable

virtual-currency-based incentives to encourage uploading and to address

free-riding.

Our protocol guarantees strict fair exchange of content uploads for

virtual currency (credit). A client cannot download content from selfish

peers (i.e. peers that do not upload unless they expect to be rewarded)

without paying credit, neither it can obtain credit for uploads it did

not perform. This protocol property provides robust incentives for

selfish peers to contribute

their bandwidth in the following two ways. First, credit can be

redeemed at a content provider for a discount on the content, or for

other types of monetary awards. Given appropriate pricing schemes, a

selfish client is motivated to serve content to its peers regardless of

whether its peers possess content that interests it. Second, the

protocol

prevents free-riding, because, provably, the only way a client can

obtain valid content from selfish peers or can earn credit is by paying

credit or uploading valid content, respectively.

Hence, we believe that our protocol can increase the aggregate upload

bandwidth of a P2P content distribution system and improve downloading

times.

The use of virtual currency for incentives has been proposed in several

P2P content distribution systems [7,3,52,29,8,53,17],

but a key challenge, how to make the virtual-currency-based system

efficient

and practical while robust to manipulation, is left unaddressed

(Sections 2.1

and 2.4).

We address this challenge based on the insight that in the problem

domain of online content distribution, the content provider itself is a

trusted third party and can mediate the content exchange between its

clients. Based on this observation, we design a protocol in which

clients exchange data for credit and the server mediates

this exchange. The server uses only efficient symmetric cryptography on

critical data paths and sends only short

messages to its clients.

Our work makes the following contributions:

1) An efficient cryptographic fair exchange scheme for trading data

uploads for virtual currency, which is suitable for P2P content

distribution. Our scheme is based on symmetric key cryptography, and is

provably robust to client cheating. A client that does not upload or

uploads garbage to its peers cannot claim credit. A client cannot

download correct

content from selfish peers without the client being charged and the

peers

rewarded.

2) The design and implementation of Dandelion. To the best of our

knowledge,

Dandelion is the first implemented P2P static content distribution

protocol that uses symmetric cryptography in order to provide robust

incentives for clients to upload paid

content to their peers. Our system's evaluation on PlanetLab [24]

identifies the scalability limits of our incentive mechanism and

demonstrates the plausibility of our approach.

In this paper, we use a BitTorrent-like terminology. A seeder

refers to a client that uploads to its peers despite not being

interested in the content being distributed (e.g. a client that

uploads although it has completed its file download). A leecher

refers to a client that is interested in the content

being distributed (e.g. a client that has not completed its file

download). A free-rider refers to a client that

only downloads from others but does not upload. A

swarm refers to all

clients that actively participate in the

protocol for a given content item.

The rest of this paper is organized as

follows. Section 2

describes existing incentive

mechanisms in P2P protocols, and cryptographic fair exchange schemes.

Section 3

describes the design of Dandelion. Section

4 analyzes the

security of our

design. Sections 5

and 6 present

our system's implementation and experimental evaluation, respectively.

We conclude in Section 7.

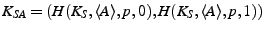

Figure 1:

Mean download completion times of

~200 CTorrent 1.3.4

leechers as a function of file size, for 1 initial seeder and

10 initial seeders. The mean file download completion times are

extracted

over 10 runs. Error bars correspond to 95% confidence intervals.

|

![\includegraphics[scale=0.70]{figures/filecompletiontime.vs.numseeders.vs.filesize/filecompletiontime.BitTorrent.vs.initialnumseeders.vs.filesize.eps}](img2.png) |

3 Background and Related

Work

In this section we motivate the design of Dandelion by

describing existing P2P content distribution incentive mechanisms and

their weaknesses. In addition, we discuss previous work on

cryptographic fair exchange.

3.1

Impact of Seeding

The popular BitTorrent protocol employs the rate-based tit-for-tat

incentive mechanism [26]. A

client unchokes (i.e. uploads to) at most four to ten clients for a

given file,

in parallel. Most of the unchoked peers are the peers that upload

useful parts of the file to the client at the fastest rates and are

interested in the client's content. The client also optimistically

unchokes one or two peers that are not among the fastest uploaders, in

expectation of future reciprocation.

The list of unchoked peers is typically revised every 10 seconds.

This mechanism mitigates free-riding but does not provide explicit

incentives for

seeding. Although several BitTorrent deployments rely on clients to

honestly report their uploading history [17], and

use this history

to decide which clients can join a swarm, practice has shown that

clients can fake their upload history reports [4].

In contrast, Dandelion's non-manipulable and centrally maintained

virtual currency enables a content distributor to reliably keep

record of the amount of content a selfish client has uploaded to its

peers.

The distributor can use this record to provide robust incentives for a

selfish client to upload to its peers regardless of whether the peers

have content that interests the client.

Seeders can substantially improve download completion times,

because they increase the file availability and the aggregate upload

bandwidth. Figure 1

shows the impact of seeders. We run two BitTorrent experiments on

PlanetLab, with one and ten initial seeders, respectively. Initial

seeders

are clients that have the complete file prior to the start of the

distribution.

In each experiment, we run  200 CTorrent 1.3.4 [2]

leechers on distinct PlanetLab nodes to simultaneously download a file.

Upon completion of their download, leechers remain online seeding the

file. As can be seen, the mean file download completion time decreases

considerably when there are ten initial seeders, especially for small

files of a few MB.

200 CTorrent 1.3.4 [2]

leechers on distinct PlanetLab nodes to simultaneously download a file.

Upon completion of their download, leechers remain online seeding the

file. As can be seen, the mean file download completion time decreases

considerably when there are ten initial seeders, especially for small

files of a few MB.

3.2

Free-riding in BitTorrent

A general observation is that since BitTorrent's tit-for-tat incentives

reward cooperative clients with improved download times, clients are

always incented to upload. Therefore, free-riding should not

be an issue in BitTorrent networks. This observation relies

on the assumption that users aim only at maximizing their

download rates. However, in practice, several BitTorrent users

can be reluctant to upload even if uploading improves their download

times.

For example, users with access providers that impose quotas on outgoing

traffic or users with limited uplink bandwidth (e.g. 1.5Mbps/128Kbps

ADSL)

may wish to save their uplink for other critical tasks.

Considering the tradeoff between performance and susceptibility to

free-riding

[31],

BitTorrent purposely does not implement a strict tit-for-tat (TFT)

strategy. In particular, it employs rate-based instead of chunk-level

TFT, and BitTorrent clients optimistically unchoke peers for relatively

long periods of time (10 to 30 seconds). Furthemore, BitTorrent seeders

select peers to upload to regardless of whether those peers upload to

others.

Based on the above observations and previous work on BitTorrent

exploitation

[37,44,49], in [50],

we modify a CTorrent-1.3.4 client to employ the "large view'' exploit

to

free-ride. The client obtains a larger than normal view of the swarm,

either

by repeatedly requesting partial views from the BitTorrent tracker or

by exchanging

views with its peers [6,9].

Subsequently, it connects to all peers in its view, while it does not

upload any content. Using this exploit in a sufficiently large swarm, a

modified client can substantially increase the frequency with which it

becomes optimistically unchoked, comparing to a compliant client, which

typically connects to 50-100 peers. It can also find more seeders,

which do not employ tit-for-tat.

In particular, we show that our modified free-rider client

is able to download faster than its tit-for-tat compliant counterpart

in 12 out of 15 randomly selected public torrents, for file sizes

between 500MB to 2 GB and swarm sizes of 50 to 1000 peers.

We also experiment with PlanetLab residing swarms that comprise of

300 leechers that are rate-limited at 30KB/sec and one initial seeder

that is rate-limited at 120KB/sec. When compliant clients comprise

90% of the PlanetLab-residing swarm, free-riders download faster than

compliant clients in their swarm and slightly worse than compliant

clients in a swarm with no free-riders.

300 leechers that are rate-limited at 30KB/sec and one initial seeder

that is rate-limited at 120KB/sec. When compliant clients comprise

90% of the PlanetLab-residing swarm, free-riders download faster than

compliant clients in their swarm and slightly worse than compliant

clients in a swarm with no free-riders.

The same weakness of BitTorrent's incentives is experimentally

demonstrated in a recent work by Locher et al. [45],

which was almost concurrent with ours.

Drawing from the above observations, we believe that

the "large view'' exploit has the potential to be widely adopted and

could lead to system-wide performance degradation in BitTorrent swarms.

Dandelion explicitly addresses this issue, because

its provably non-manipulable incentives enable a content distributor to

reliably track the amount of content a client has downloaded from

selfish peers, and charge the client accordingly.

In P2P content distribution protocols that employ pairwise virtual

currency (credit) for incentives, clients maintain

distinct credit balances for each of their peers. In this context,

credit refers to any metric of a peer's cooperativeness.

An eMule [7]

client rewards cooperative peers by reducing the time the peers have to

wait until they are served by

the client. Swift [52]

introduces a pairwise

credit-based trading mechanism for peer-to-peer file sharing

networks and examines the available peer strategies.

In [37],

the authors suggest tackling free-riding

in BitTorrent by employing chunk-level tit-for-tat, which is similar to

pairwise credit incentives. These pairwise credit-based incentive

mechanisms bear

weaknesses that are similar to the ones of rate-based tit-for-tat: a)

they provide no explicit incentives for seeding; and b) they can be

manipulated by free-riders that obtain a ``large view'' of the network,

and initiate short-lived sessions with numerous peers to exploit the

initial offers in pairwise transactions.

Scrivener [29]

combines pairwise credit

balances with a transitive trading mechanism, which is based

on a flavor of distributed reputation. MNet [8] uses a combination

of pairwise balances and tokens

that can be cashed in a central broker. When the debt during pairwise

transactions exceeds a specified threshold, the side with the negative

balance transfers a credit token to the other by contacting a broker.

Since both Scrivener and MNet do not provide

strong fair exchange guarantees of content uploads for credit, they can

be manipulated in a way similar to the ``large view'' exploit.

Keidar et al. [38]

present the design of a P2P multicast protocol, which is formally

proven to enforce cooperation among selfish leechers. To prove

cooperation, the authors assumed that selfish leechers abide by a

predetermined strategy, which specifies how many peers a leecher can

have. However, the recent work on BitTorrent exploitation [50,45],

which has partly motivated our system's design, has demonstrated that

this assumption may be too restrictive.

BAR Gossip [42]

is

suitable for P2P streaming of live content.

Owing to its cryptographic fair exchange mechanism and its verifiable

peer selection, the system is robust to clients that attempt to

free-ride by obtaining a "large view." However, its verifiable peer

selection technique assumes that no client can join the network

after the streaming session starts. Since BAR Gossip is designed for

P2P streaming, it does not

need to provide incentives for seeding. Therefore, it ensures the fair

exchange of content uploads between clients that are interested in the

same live broadcast. On the other hand, Dandelion, which needs to

incent seeding for static content distribution or video on demand,

guarantees fair exchange of content uploads for virtual currency.

3.4

Global Currency as Incentives

It has been widely proposed to use global virtual

currency to provide incentives in P2P content distribution

systems. This is the basis of the incentive mechanism employed by

Dandelion: for each client, the system maintains a credit

balance, which is used to track the bandwidth that the client has

contributed to

the network.

Karma [53]

employs a global credit bank and certified-mail-based [48] fair

exchange of content for reception proofs. It distributes credit

management among multiple nodes. Karma's distributed credit management

improves scalability. However, it does not guarantee the integrity of

the global currency when the majority of the nodes that comprise the

distributed credit bank are malicious or in a highly dynamic network.

In contrast, Dandelion's centrally maintained global currency is

non-manipulable by clients. Thus, it enables a server to offer monetary

rewards based on client

credit balances, providing strong incentives for clients to cooperate.

Horne et al. [35]

proposed an encryption- and erasure-code-based fair exchange scheme for

exchange of content

for proofs of service, but did not provide an experimental evaluation.

Their scheme detects cheating with probabilistic guarantees, whereas

Dandelion deterministically detects and punishes cheaters.

Li et al. [43]

proposed a scheme for incentives in P2P environments that uses fair

exchange of proof of service with chunks of content. The selfish client

encrypts a chunk and sends it to its peer, the peer

responds with a public-key cryptographic proof of service, and the

client completes the transaction

by sending the decryption key. A trusted third party (TTP) is involved

only in

the following cases: a) the selfish client presents the proofs of

service to obtain credit; b) the peer complains for receiving an

invalid chunk; and c) the peer complains

for not receiving the decryption key from the selfish client. However,

unless the server incurs the high cost of frequently renewing the

public key certificates of each client, the credit system is vulnerable

to clients that obtain content from selfish peers, despite those

clients not having sufficient credit. In contrast,

in Dandelion, the TTP mediates every chunk exchange, effectively

preventing a client from obtaining any chunks from selfish peers

without having sufficient credit.

PPay [55]

and WhoPay [54]

are recent micropayments proposals that employ

public key cryptography and are

designed for the P2P content distribution case. These systems

do not guarantee fair exchange of content for payment.

Free-riders may establish short-lived sessions to many peers, download

portions of content from them or obtain payments, and thereby obtain a

substantial amount of content or credit

without paying or uploading.

There are two main classes of solutions for the classic

cryptographic fair exchange problem. One uses simultaneous exchange by

interleaving the sending of the message with the sending of the

receipt [22,25,27,47,30]. These

protocols rely on the assumption of equal computational and bandwidth

capacity, which does not suit

the heterogeneous P2P setting.

The other class relies on the use of a trusted

[18,57,19,56] or

semi-trusted [32,33]

third party

(TTP). The main differences of our scheme are as follows: 1) In

[18,57,19] the TTP cannot

decide

whether a party has misbehaved, but can only complete the transaction

itself if presented with proof that the parties initially intended to

perform the transaction. They assume that the cost of sending the data

is small and can be repeated by the TTP. However in Dandelion,

transmission of data is the most expensive resource and our scheme aims

at the fair exchange of this resource; 2) Unlike [32]

and [33],

our scheme does not rely on untrusted

clients to become semi-TTP; 3) Unlike [56], our scheme

does not use public key cryptography for encryption and for committing

to messages, and only requires one client rather than two to contact

the TTP for each transaction. The technique they use to determine

whether a message originates from a party is similar to the one used

by our complaint mechanism, but our work also addresses the specifics

of determining the validity of the message.

4 Design

In this section we describe the system model and the design

of Dandelion.

4.1

Overview

Our design is based on the premise that a low cost server does not have

sufficient network I/O resources to directly serve content to its

clients under a flash crowd event [14].

It may however, have sufficient CPU,

memory, and memory/disk/network I/O resources to execute many symmetric

cryptography operations, to maintain TCP connection and protocol state

for many clients, to access its client's protocol state,

and to receive and send short messages [34].

However, CPU, memory and I/O are still limited resources. Therefore we

aim at making the design as efficient as

possible.

Under normal workload, A Dandelion server behaves similar to a web/ftp,

streaming

or video on demand server, i.e. it directly serves content to its

clients. When a server is overloaded, it enters a peer-serving mode. Upon

receiving a

request, the server redirects the client to other clients that are

able to serve the requests for content. In the peer-serving mode, a

Dandelion system is reminiscent of BitTorrent, in the sense that a

server splits content into verifiable chunks, and

clients exchange carefully selected chunks. As is the case with

BitTorrent, the content is split into multiple chunks in order to

enable clients to upload as soon as they receive and verify a small

portion of the content. It is also split in order to increase the

entropy of content in the network, facilitating chunk exchanges among

peers. We discuss the tradeoffs in selecting a chunk size in the case

of static content distribution in Section 6.3.1.

However, our protocol uses a different incentive mechanism. The server

maintains a virtual economy and associates each client with its credit

balance. It entices selfish clients to upload to others by explicitly

rewarding them with

virtual credit, while it charges clients that download content from

selfish peers.

4.2

System Model

We describe the system model under which Dandelion is designed to

operate. We assume three types of clients, which we define as follows:

Malicious clients

aim at harming the system.

They misbehave as follows: a) they may attempt to cause other clients

to be blacklisted or charged for chunks they did not obtain; b) they

may attempt to perform a Denial of

Service (DoS) attack against the server or selected clients

(this attack would involve only protocol messages, as we consider

bandwidth or connection flooding attacks outside the scope of this

work);

and c) they may upload invalid chunks aiming at disrupting the

distribution

of content.

Malicious clients

aim at harming the system.

They misbehave as follows: a) they may attempt to cause other clients

to be blacklisted or charged for chunks they did not obtain; b) they

may attempt to perform a Denial of

Service (DoS) attack against the server or selected clients

(this attack would involve only protocol messages, as we consider

bandwidth or connection flooding attacks outside the scope of this

work);

and c) they may upload invalid chunks aiming at disrupting the

distribution

of content.

Selfish (rational)

clients share a utility function. This function describes the cost they

incur when they upload a chunk to their peers and when they pay virtual

currency to download a chunk. It also describes the benefit they gain

when they are rewarded in virtual

currency for correct chunks they upload and when they obtain chunks

they wish to download. A selfish client aims at maximizing its utility.

We assume that the content provider prices a peer's accumulated virtual

currency appropriately: the benefit that a selfish client gains from

acquiring virtual currency for content it uploads exceeds the cost of

utilizing its uplink to upload it.

Selfish (rational)

clients share a utility function. This function describes the cost they

incur when they upload a chunk to their peers and when they pay virtual

currency to download a chunk. It also describes the benefit they gain

when they are rewarded in virtual

currency for correct chunks they upload and when they obtain chunks

they wish to download. A selfish client aims at maximizing its utility.

We assume that the content provider prices a peer's accumulated virtual

currency appropriately: the benefit that a selfish client gains from

acquiring virtual currency for content it uploads exceeds the cost of

utilizing its uplink to upload it.

A selfish client may consider manipulating the credit system in order

to maximize its utility by misbehaving as follows: a) it may not upload

chunks to a peer, and

yet claim credit for them; b) it may upload garbage either on purpose

or due to communication failure, and yet claim credit; c) it may obtain

chunks from selfish clients, and

yet attempt to avoid being charged; d) it may attempt

to download content from selfish peers without having sufficient

credit; and e) it may attempt to boost its credit by colluding with

other clients or by opening multiple Dandelion accounts.

Altruistic clients

upload correct content to their peers regardless of the cost they incur

and they do not expect to be rewarded.

Altruistic clients

upload correct content to their peers regardless of the cost they incur

and they do not expect to be rewarded.

We assume weak security of the IP network, meaning that a malicious or

a selfish client cannot interfere with the routing and forwarding

function, and cannot corrupt messages, but it can eavesdrop messages.

In addition, we assume that communication errors may occur during

message transmissions.

In the rest of this section we describe the design of Dandelion, which

explicitly addresses the challenges posed by selfish and malicious

clients, as well as the challenges posed by the communication channel.

4.3

Credit Management

Dandelion's incentive mechanism creates a virtual economy, which

enables a variety of application scenarios. A client spends

credit

units for each chunk it downloads from a selfish client and a

selfish client earns

credit

units for each chunk it downloads from a selfish client and a

selfish client earns  credit units for

each chunk it uploads to a client. A client can acquire a chunk only if

its credit is greater than

credit units for

each chunk it uploads to a client. A client can acquire a chunk only if

its credit is greater than  . We set

. We set  , so that two colluding clients cannot increase the sum of their

credit, by falsely claiming that they upload to each other.

, so that two colluding clients cannot increase the sum of their

credit, by falsely claiming that they upload to each other.

Our protocol is intended for the case in which users maintain paid

accounts with the content provider, such as in iTunes. A client is

awarded sufficient initial credit to download the complete paid content

from the server. The content provider may redeem a client's credit for

monetary rewards, such as discounts on content prices or service

membership fees, similar to the mileage programs of airline companies.

This incents a client to upload to others and earn credit. A user

cannot boost its credit by presenting multiple IDs (Sybil attack [28]) and

claiming to have uploaded to some of its registered IDs. This is

because each user maintains an authenticated paid account with the

provider. The user essentially purchases its initial credit, and the

net sum in an upload-download transaction between any two IDs is zero.

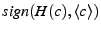

Figure 2:

![\includegraphics[scale=0.80]{figures/TheProtocol.color.eps}](img8.png)

|

4.4

Robust Incentives

This section describes Dandelion's cryptographic fair-exchange-based

protocol.

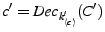

4.4.1 Setting

By  we denote the description of an entity or object,

e.g.

we denote the description of an entity or object,

e.g.  denotes a client

denotes a client  's Dandelion ID.

's Dandelion ID.  is

is  's master

secret key,

's master

secret key,  is a cryptographic hash function

such as SHA-1,

is a cryptographic hash function

such as SHA-1,  is

a Message Authentication Code such as HMAC [20], and

is

a Message Authentication Code such as HMAC [20], and

refers to a time

period. By

refers to a time

period. By  we denote

we denote  at client or server

at client or server  .

.

Due to host mobility and NATs, we do not use Internet address (IP or

IP/source-port) to associate credit and other persistent

protocol information with clients. Instead, each user applies for a

Dandelion account and is associated with a persistent ID. The server  associates each client with its authentication information (client ID

and password), the content (e.g. a file)

associates each client with its authentication information (client ID

and password), the content (e.g. a file)

it currently

downloads or seeds, its credit balance, and the content it can access.

The clients and the server maintain loosely synchronized clocks.

it currently

downloads or seeds, its credit balance, and the content it can access.

The clients and the server maintain loosely synchronized clocks.

Every client  that wishes to join the network

must establish a

transport layer secure session with the server

that wishes to join the network

must establish a

transport layer secure session with the server  , e.g. using TLS

[1]. A client sends

its ID and password over the secure channel. The server

, e.g. using TLS

[1]. A client sends

its ID and password over the secure channel. The server  generates a secret key and symmetric

encryption initialization vector pair, denoted

generates a secret key and symmetric

encryption initialization vector pair, denoted  , which is

shared with

, which is

shared with  .

.  is efficiently

computed as

is efficiently

computed as

.

.

is also sent

over the secure channel.

This key is used both for symmetric encryption and for computing a

MAC. For MAC computation, we use only the secret key portion of

is also sent

over the secure channel.

This key is used both for symmetric encryption and for computing a

MAC. For MAC computation, we use only the secret key portion of  .

The rest of the messages that are exchanged between the server and the

clients are sent over an insecure communication channel (e.g. plain

TCP), which must originate from the same IP as the secure session.

Similarly, all messages between clients are sent over an insecure

communication

channel.

.

The rest of the messages that are exchanged between the server and the

clients are sent over an insecure communication channel (e.g. plain

TCP), which must originate from the same IP as the secure session.

Similarly, all messages between clients are sent over an insecure

communication

channel.

Each client  exchanges only short messages with

the server. To prevent forgery of the message source and replay

attacks, and to ensure the integrity of the message, each message

includes a sequence number and a digital signature. The signature is

computed as the MAC of the message, keyed with the secret key

exchanges only short messages with

the server. To prevent forgery of the message source and replay

attacks, and to ensure the integrity of the message, each message

includes a sequence number and a digital signature. The signature is

computed as the MAC of the message, keyed with the secret key  that

that  shares with the server. Each time a

client or the server receive a message from each other, they check

whether the sequence number succeeds the sequence number of the

previously received message and whether the MAC-generated signature

verifies. If either of the two conditions is not satisfied, the message

is discarded. The sequence number is reset when time period

shares with the server. Each time a

client or the server receive a message from each other, they check

whether the sequence number succeeds the sequence number of the

previously received message and whether the MAC-generated signature

verifies. If either of the two conditions is not satisfied, the message

is discarded. The sequence number is reset when time period  changes.

changes.

The server initiates re-establishment of shared keys  with the clients upon

with the clients upon  change in order to: a) prevent attackers from inferring

change in order to: a) prevent attackers from inferring  by examining the encrypted content and the MACs used by the protocol;

and b) allow the reuse of message sequence numbers once the numbers

reach a high threshold, while preventing attackers from replaying

previously signed and sent messages.

by examining the encrypted content and the MACs used by the protocol;

and b) allow the reuse of message sequence numbers once the numbers

reach a high threshold, while preventing attackers from replaying

previously signed and sent messages.  tolerates some lag in the

tolerates some lag in the  assumed by a client.

assumed by a client.

4.4.2 Protocol Description

To provide robust incentives for cooperation under the model described

in Section 3.2,

Dandelion employs a cryptographic fair-exchange mechanism. Our

fair-exchange protocol involves only efficient

symmetric cryptographic operations. The server acts as the trusted

third party (TTP) mediating the exchanges of content for credit among

its clients. When a client  uploads to a client

uploads to a client  , it sends encrypted content

to client

, it sends encrypted content

to client  . To decrypt,

. To decrypt,  must request the decryption key

from the server. The requests for keys serve as the proof that

must request the decryption key

from the server. The requests for keys serve as the proof that  has uploaded some content to

has uploaded some content to  . Thus, when the server receives a key request, it credits

. Thus, when the server receives a key request, it credits  for uploading content to

for uploading content to  , and charges

, and charges  for downloading

content.

for downloading

content.

When a client  sends invalid content to

sends invalid content to

,

,  can determine that the content is invalid only after receiving

the decryption key and being charged. To address this problem, our

design includes a non-repudiable complaint mechanism. If

can determine that the content is invalid only after receiving

the decryption key and being charged. To address this problem, our

design includes a non-repudiable complaint mechanism. If  intentionally sends garbage to

intentionally sends garbage to  ,

,  cannot deny that it did. In

addition,

cannot deny that it did. In

addition,  is prevented from falsely claiming

that

is prevented from falsely claiming

that  has sent it

garbage.

has sent it

garbage.

The following description omits the sequence number and the signature

in the messages between clients and the server. Figure 2 depicts the message

flow in our protocol.

Step 1:

The protocol starts with the client  sending a request for the content item

sending a request for the content item

to

to  .

.

:

:

![$ {[\rm content~request]}~\descr {F}$](img24.png)

Step 2: If  has access to

has access to  ,

,  chooses a short list of clients

chooses a short list of clients

,

which are currently in the swarm for

,

which are currently in the swarm for

. The policy

with which the server selects the list

. The policy

with which the server selects the list

depends

on the specifics of the content distribution system. Each list entry,

besides the ID of the client, also contains the client's inbound

Internet address. For every client in

depends

on the specifics of the content distribution system. Each list entry,

besides the ID of the client, also contains the client's inbound

Internet address. For every client in

,

,

sends a ticket

sends a ticket

![$ T_{SA}=MAC_{K_{SA}}[\descr {A},\descr {B},\descr {F},\ts ]$](img26.png) to

to

.

.

is a timestamp,

and

is a timestamp,

and  is a client in

is a client in  . The tickets

. The tickets  are only valid

for a certain amount of time

are only valid

for a certain amount of time  (considering clock skew between

(considering clock skew between  and

and  ) and allow

) and allow  to request

chunks of the content

to request

chunks of the content  from client

from client  . When

. When  expires and

expires and  still wishes to download from

still wishes to download from  , it requests a new

, it requests a new  from

from

.

.

To ensure integrity in the case of static content or video on demand,

also sends to

also sends to  the SHA-1 hash

the SHA-1 hash  for all chunks

for all chunks  of

of  . For the case of live streaming content, the content provider augments

the

chunks it generates with his public key signature on their hash and ID,

as

. For the case of live streaming content, the content provider augments

the

chunks it generates with his public key signature on their hash and ID,

as  . Clients append this signature to all the chunks they upload.

. Clients append this signature to all the chunks they upload.

:

:

![$ {[\rm content~response]}~T_{SA},\descr {A}_{\rm list},H(c)_{\rm list},\descr {F},\ts ,\epoch _S$](img35.png)

Step 3: The

client  forwards this request to each

forwards this request to each

.

.

:

:

![$ {[\rm content~request]}~T_{SA},\descr {F},\ts ,\epoch _S$](img38.png)

Step 4: If current-time  and

and  is not in

is not in  's cache,

's cache,  verifies if

verifies if

![$ T_{SA}=MAC_{K_{SA}}[\descr {A},\descr {B},\descr {F},\ts ]$](img26.png) .

The purpose of this check is to mitigate DoS attacks against

.

The purpose of this check is to mitigate DoS attacks against  ; it allows

; it allows  to filter out requests from clients

that are not authorized to retrieve the content or from clients that

became blacklisted. As long as

to filter out requests from clients

that are not authorized to retrieve the content or from clients that

became blacklisted. As long as  remains connected to

remains connected to  , it periodically renews its

, it periodically renews its  tickets by requesting them from

tickets by requesting them from  . If the verification fails,

. If the verification fails,  drops this request. Also, if

drops this request. Also, if  is greater than

is greater than  's current epoch

's current epoch  ,

,  learns that it should renew its key

with

learns that it should renew its key

with  soon. Otherwise,

soon. Otherwise,  caches

caches  and periodically

sends the chunk announcement message described below,

for as long as the timestamp

and periodically

sends the chunk announcement message described below,

for as long as the timestamp  is fresh. This message contains a list of chunks that

is fresh. This message contains a list of chunks that  owns,

owns,  .

.  also does so in separate chunk

announcement messages. The specifics

of which chunks are announced and how frequently depend on the type of

content

distribution. For example, in the case of static content distribution,

the initial chunk announcement would contain the IDs of all the chunks

also does so in separate chunk

announcement messages. The specifics

of which chunks are announced and how frequently depend on the type of

content

distribution. For example, in the case of static content distribution,

the initial chunk announcement would contain the IDs of all the chunks  owns, while the periodically sent announcement would contain the IDs of

newly acquired chunks.

owns, while the periodically sent announcement would contain the IDs of

newly acquired chunks.

:

:

![$ {[\rm chunk~announcement]}~\descr {c}_{\rm list}$](img44.png)

Step 5:  and

and  determine which chunks to download

from each

other according to a chunk selection policy; BitTorrent's

locally-rarest-first is suitable for static content dissemination,

while for streaming content or video on demand

other policies are appropriate [42,23].

determine which chunks to download

from each

other according to a chunk selection policy; BitTorrent's

locally-rarest-first is suitable for static content dissemination,

while for streaming content or video on demand

other policies are appropriate [42,23].

can request chunks from

can request chunks from  , after it requests and

retrieves

, after it requests and

retrieves  from

from  .

.  sends a request for the missing

chunk

sends a request for the missing

chunk  to

to  .

.

:

:

![$ {[\rm chunk~request]}~T_{SA},\descr {F},\descr {c},\ts ,\epoch _S$](img46.png)

Step 6:  's chunk requests are served by

's chunk requests are served by  as long as the timestamp

as long as the timestamp  is fresh, and

is fresh, and  is cached or

is cached or  verifies. If

verifies. If  is altruistic, it sends the chunk

is altruistic, it sends the chunk  to

to  in plaintext and the per-chunk

transaction ends here. Otherwise,

in plaintext and the per-chunk

transaction ends here. Otherwise,  encrypts

encrypts  using a symmetric encryption

algorithm

using a symmetric encryption

algorithm  , as

, as  .

.

is

a random secret key and random symmetric encryption initialization

vector pair. This pair is distinct for each chunk.

is

a random secret key and random symmetric encryption initialization

vector pair. This pair is distinct for each chunk.  encrypts the random key with

encrypts the random key with  , as

, as  .

Next,

.

Next,  hashes the ciphertext

hashes the ciphertext  as

as  . Subsequently, it computes

its commitment to the encrypted chunk and the encrypted key as

. Subsequently, it computes

its commitment to the encrypted chunk and the encrypted key as

![$ T_{AS}=MAC_{K_{SA}}[\descr {A},\descr {B},\descr {F},\descr {c},e,H(C),\ts ]$](img54.png) and

sends the following to

and

sends the following to  .

.

:

:

![$ {[\rm chunk~response]}T_{AS},\descr {F},\descr {c},e,C,\ts ,\epoch _A$](img55.png)

Step 7: To

retrieve  ,

,  needs to request it from the

server. As soon as

needs to request it from the

server. As soon as  receives the encrypted chunk,

receives the encrypted chunk,  computes its own hash over the received ciphertext

computes its own hash over the received ciphertext  and forwards the following to

and forwards the following to  .

.

:

:

![$ {[\rm decryption~key~request]}~\descr {A},\descr {F},\descr {c},e,H(C^\prime), \\

\ts , T_{AS}, \epoch _A$](img57.png)

Step 8: If

timestamp  is fresh enough,

and

is fresh enough,

and  is not too much

off,

is not too much

off,  checks if

checks if

![$ T_{AS}=MAC_{K_{SA}}[\descr {A},\descr {B},\descr {F},\descr {c},e,H(C^\prime),\ts ]$](img58.png) .

The time-stamp

.

The time-stamp  freshness

requirement

forces

freshness

requirement

forces  to expedite paying for decrypting

the encrypted chunks. This fact allows

to expedite paying for decrypting

the encrypted chunks. This fact allows  to promply acquire credit for its service.

The ticket

to promply acquire credit for its service.

The ticket  verification may

fail either because

verification may

fail either because  due to

transmission error in step

due to

transmission error in step  or because

or because  or

or  are

misbehaving. Since

are

misbehaving. Since  is unable to determine which is the case, it

punishes neither

is unable to determine which is the case, it

punishes neither  or

or  and does not update their credit.

It does not send the decryption key to

and does not update their credit.

It does not send the decryption key to  but it still notifies

but it still notifies  of the discrepancy. In this case,

of the discrepancy. In this case,  is expected to disconnect from

is expected to disconnect from  and blacklist it in case

and blacklist it in case  repeatedly

sends invalid chunk response messages. If

repeatedly

sends invalid chunk response messages. If  keeps sending invalid decryption key

requests,

keeps sending invalid decryption key

requests,  penalizes him. If the verification

succeeds,

penalizes him. If the verification

succeeds,  checks whether

checks whether  has sufficient credit to purchase the chunk

has sufficient credit to purchase the chunk  .

It also checks again whether

.

It also checks again whether  has access to the content

has access to the content  . If

. If  is

approved, it charges

is

approved, it charges  and rewards

and rewards  with

with  credit units.

Subsequently,

credit units.

Subsequently,  decrypts

decrypts

,

and sends it to

,

and sends it to  .

.

:

:

![$ {[\rm decryption~key~response]}~\descr {A},\descr {F},

\descr {c},k_{\descr {c}}^\prime$](img64.png)

uses

uses

to decrypt the

chunk as

to decrypt the

chunk as

.

Next, we explain the complaint mechanism.

.

Next, we explain the complaint mechanism.

Step 9: If the

decryption fails or if

(step

(step  ),

),  complains to

complains to  by sending the following message.

by sending the following message.

:

:

![$ {[\rm complaint]}~\descr {A},\descr {F},

\descr {c},T_{AS},e,H(C^\prime),\ts ,\epoch _A$](img69.png)

ignores this message if current-time

ignores this message if current-time

,

where

,

where  .

.  should be greater than the time needed for

should be greater than the time needed for  to receive a decryption key response, decrypt the chunk and send a

complaint to the server. With this condition, a misbehaving client

to receive a decryption key response, decrypt the chunk and send a

complaint to the server. With this condition, a misbehaving client  cannot avoid having complaints ruled against it, even if

cannot avoid having complaints ruled against it, even if  ensures that the time elapsed between the moment

ensures that the time elapsed between the moment  commits

to the encrypted chunk and the moment the encrypted chunk is received

by

commits

to the encrypted chunk and the moment the encrypted chunk is received

by  is slightly less than

is slightly less than  .

.

also ignores the complaint message

if a complaint for the same

also ignores the complaint message

if a complaint for the same  and

and  is in a cache of recent complaints

that

is in a cache of recent complaints

that  maintains for each client

maintains for each client  .

Complaints are evicted from this cache once current-time

.

Complaints are evicted from this cache once current-time

.

If

.

If

![$ T_{AS}{\neq}MAC_{K_{SA}}[\descr {A},\descr {B},\descr {F},\descr {c},

e,H(C^\prime),\ts ]$](img73.png) ,

,

punishes

punishes  . This is because

. This is because  has already notified

has already notified  in step

in step  that

that  is invalid. If

is invalid. If  verifies,

verifies,  caches this complaint, recomputes

caches this complaint, recomputes

as before,

decrypts

as before,

decrypts

once

again, retrieves

once

again, retrieves  from its storage, and encrypts

from its storage, and encrypts  himself using

himself using

,

,

.

If the hash of the ciphertext

.

If the hash of the ciphertext

is equal to the

value

is equal to the

value

that

that

sent to

sent to  ,

,  decides that

decides that  has acted

correctly and

has acted

correctly and  's complaint is unjustified. Subsequently,

's complaint is unjustified. Subsequently,  drops the

complaint request and blacklists

drops the

complaint request and blacklists  . It also notifies

. It also notifies  , which disconnects from

, which disconnects from  and blacklists it. Otherwise, if

and blacklists it. Otherwise, if

,

,

decides that

decides that  was cheated by

was cheated by  , removes

, removes  from its set of active clients,

blacklists

from its set of active clients,

blacklists  , and revokes the corresponding credit charge on

, and revokes the corresponding credit charge on  . Similarly,

. Similarly,  disconnects from

disconnects from  and blacklists it.

and blacklists it.

The server disconnects from a blacklisted client  , marks it as blacklisted in the credit file and denies access to

, marks it as blacklisted in the credit file and denies access to  if it attempts to login. Future complaints concerning a blacklisted

client

if it attempts to login. Future complaints concerning a blacklisted

client  and for which

and for which  verifies, are ruled against

verifies, are ruled against  without further processing.

without further processing.

Since a verdict on a complaint can adversely affect a client, each

client needs to ensure that the commitments it generates are correct

even in the rare case of a disk read error. Therefore, a client always

verifies the read chunk against its hash before it encrypts the chunk

and generates its commitment.

4.5

Other Protocol Issues

A content provider may be more concerned with scalability than it is

with the free-riding problem presented in Section 2. In such case, it can

deploy clients that use tit-for-tat incentives if their peers have

content that

interests them, i.e. the clients would upload plaintext content

to peers that reciprocate with plaintext content. These clients would

fall back to Dandelion's cryptographic fair-exchange mechanism when

their peers do not have content that interests them. For example,

selfish seeders would always upload encrypted content to their peers.

In case a client is unable to timely retrieve a missing chunk

from

its peers, it resorts to requesting the chunk from the server. If the

server is not busy, it replies with the plaintext chunk. If it is

moderately busy, it charges an appropriately large amount of credit

,

sends the chunk and indicates that it is preferable for the client

not to download chunks from the server. If the server is overloaded, it

replies with an error message. Clients always download the content from

the server in chunks, so that the system can seamlessly switch to the

peer-serving mode when

the server becomes busy.

,

sends the chunk and indicates that it is preferable for the client

not to download chunks from the server. If the server is overloaded, it

replies with an error message. Clients always download the content from

the server in chunks, so that the system can seamlessly switch to the

peer-serving mode when

the server becomes busy.

Typically, a content distributor would deploy, in addition to the

server, at least one client that possesses the complete content

(initial seeder). In this

way, the distributor ensures that the complete content is made

available, even if the server is too busy to serve chunks.

5 Security Analysis

This section briefly lists the security properties of

our design. For simplicity of presentation, we omit proofs on why

these properties hold. They can be found

in the Appendix of [51].

Lemma 4.1 If

the server  charges a client

charges a client

credit units for

a chunk

credit units for

a chunk  received from a selfish client

received from a selfish client  ,

,  must have received the correct

must have received the correct  , regardless of the actions taken by

, regardless of the actions taken by  .

.

Lemma 4.2 If

a selfish client  always encrypts chunk

always encrypts chunk  anew when servicing a request and if

anew when servicing a request and if  gets correct

gets correct  from

from

, then

, then  is awarded

is awarded  credit units

from

credit units

from  , and

, and  is charged

is charged  credit units

from

credit units

from  .

.

Lemma 4.3 A

selfish or a malicious client cannot assume another authorized client's

identity and issue messages under

identity and issue messages under  , aiming at obtaining service at the expense of

, aiming at obtaining service at the expense of  , charging

, charging  for service it did not obtain or

causing

for service it did not obtain or

causing  to be blacklisted. In addition, it

cannot issue a valid

to be blacklisted. In addition, it

cannot issue a valid  for

an invalid chunk that it sends to a client

for

an invalid chunk that it sends to a client  and cause

and cause  to produce a complaint message that

would result in a verdict against

to produce a complaint message that

would result in a verdict against  .

.

Lemma 4.4 A

malicious client cannot replay previously sent valid requests to the

server or generate decryption key requests or complaints under  's ID, aiming at

's ID, aiming at  being charged for service it did not obtain or being blacklisted

because of invalid or duplicate complaints.

being charged for service it did not obtain or being blacklisted

because of invalid or duplicate complaints.

Observation 4.5 A

client cannot download chunks

from a selfish peer if it does not have sufficient credit.

Our design choice to involve the server in every transaction, instead

of using the fair exchange technique proposed in [43],

enables the

server to check a client's credit balance before the client retrieves

the decryption

key of a chunk.

Observation 4.6 To

maintain an efficient content distribution pipeline, a client needs to

relay a received chunk to its peers as soon as it receives it. However,

the chunk may be invalid due to communication error or due to peer

misbehavior. The performance of the system would be severely degraded

if

peers wasted bandwidth to relay invalid content. To address this issue,

Dandelion

clients send a decryption key request to the server immediately upon

receiving the encrypted chunk. This design choice enables clients to

promptly retrieve the chunk in its non-encrypted form. Thus, they can

verify the chunk's integrity prior to uploading the chunk to their

peers.

Observation 4.7 A

malicious client cannot DoS attack the server by

sending invalid content to other clients or repeatedly sending invalid

complaints aiming at causing the server to perform the relatively

expensive

complaint validation. This is because it becomes blacklisted

by both the server and its peers the moment the invalid complaint is

ruled against it.

In addition, a malicious client cannot attack the server by sending

valid signed

messages with redundant valid complaints. Our protocol detects

duplicate complaints through

the use of time-stamps and caching of recent complaints.

Observation 4.8 A

malicious client  can always abandon any instance of the protocol.

In such case,

can always abandon any instance of the protocol.

In such case,  does not receive any credit, as

argued in Lemmas 4.1 to 4.3, even though

does not receive any credit, as

argued in Lemmas 4.1 to 4.3, even though  may have consumed

may have consumed  's resources.

This is a denial of service attack against

's resources.

This is a denial of service attack against  . Note that this attack would require that the malicious client

. Note that this attack would require that the malicious client  expends resources proportional to the resources of the victim

expends resources proportional to the resources of the victim  , therefore it is not particularly practical. Nevertheless, we prevent

blacklisted clients or clients that do not maintain paid accounts with

the content provider from launching such attack by having

, therefore it is not particularly practical. Nevertheless, we prevent

blacklisted clients or clients that do not maintain paid accounts with

the content provider from launching such attack by having  issue a short-lived ticket

issue a short-lived ticket  to authorized clients only.

to authorized clients only.  is checked for validity by

is checked for validity by  (steps 4 and 6 in Section 3.4.2). In

addition,

(steps 4 and 6 in Section 3.4.2). In

addition,  may charge an authorized

may charge an authorized  for the issuance of tickets

for the issuance of tickets  effectively deterring

effectively deterring  from maliciously expending both

from maliciously expending both  's and the server's resources.

's and the server's resources.

Owing to properties 4.1, 4.2, 4.3 and 4.5, and given that the content

provider employs appropriate pricing schemes, Dandelion ensures that

selfish (rational) clients increase their utility when they upload

correct chunks and obtain virtual currency, while misbehaving clients

cannot increase their utility. Consequently, Dandelion entices selfish

clients to upload to their peers, resulting in a Nash equilibrium of

cooperation.

6 Implementation

This section describes a prototype C implementation of Dandelion that

is suitable for cooperative content distribution of static content.

It uses the openssl [5] library for

cryptographic operations and standard file I/O system calls to

efficiently manage credit information, which is stored in a simple

file.

6.1

Server Implementation

The server and the credit base are logical modules and could be

distributed over a cluster to improve scalability. For simplicity, our

current implementation combines the content provider and the credit

base

at a single server.

The server implementation is single-threaded and

event-driven. The network operations are asynchronous, and data are

transmitted over TCP.

Each client is assigned an entry in a credit file, which stores

the credit as well as authentication and file access control

information.

Each entry has the same size and the client ID determines the offset of

the entry of each client in the file, thus each entry can be

efficiently accessed for both queries and updates.

The server queries and updates a client's

credit from and to the credit file upon every transaction, Yet, it does

not force commitment of the update to persistent storage.

Instead, it relies on the OS to perform the commitment. If the server

application crashes, the update will still be copied

from the kernel buffer to persistent storage. Still, the OS may crash

or the server may lose power before the updated data have been

committed. However, in practice, a typical Dandelion deployment would

run a stable operating system and use backup power supply.

In addition, the server could mirror the credit base on multiple

machines using high speed IP/Ethernet I/O. In addition, transactions

would not involve very large amounts of money per user. Hence, we

believe it is preferable not to incur the high cost of committing the

credit updates to non-volatile memory after every transaction or after

a few transactions (operations 12 and 13 in Table 1).

6.2

Client Implementation

The client side is also single-threaded and event-driven. A client may

leech or seed multiple files at a time. A client can be decomposed

into two logical modules: a) the connection management

module;

and b) the peer-serving module.

The connection management module performs peering

and uploader discovery. With peering, each client

obtains a random partial

swarm view from the server and strives to connect to  peers,

where

peers,

where  is the number of nodes in the

Dandelion swarm, as

communicated to the node by the server. As a result, the swarm

approximates a random graph with logarithmic out-degree, which

has been shown to have high connectivity[21]. With

uploader discovery, a client attempts to remain connected to a minimum

number of uploading peers. If the number of recent uploaders drops

below a threshold, a client requests from the server a new swarm view

and connects to the peers in the new view.

is the number of nodes in the

Dandelion swarm, as

communicated to the node by the server. As a result, the swarm

approximates a random graph with logarithmic out-degree, which

has been shown to have high connectivity[21]. With

uploader discovery, a client attempts to remain connected to a minimum

number of uploading peers. If the number of recent uploaders drops

below a threshold, a client requests from the server a new swarm view

and connects to the peers in the new view.

The peer-serving module performs content reconciliation

and downloader selection. Content reconciliation

refers to the client functionality for announcing recently received

chunks, requesting missing chunks, requesting decryption keys for

received encrypted chunks, and replying to chunk requests. Our

implementation employs locally-rarest-random [39]

scheduling in requesting missing chunks from clients. To efficiently

utilize their downlink bandwidth using TCP, clients strive to at all

times keep a specified number of outstanding chunk requests [26,40],

which have been sent to a peer and have not been responded to.

With downloader selection, the system aims at making chunks available

to

the network as soon as possible. In the following description,  denotes the number of parallel downloaders. Dandelion's downloader

selection algorithm is similar to the seeder

choking algorithm used in the ``vanilla'' BitTorrent 4.0.2, as

documented in [41].

The algorithm is executed

by each client every 10 seconds. It is also executed when a when a peer

that is selected to be downloader disconnects. The algorithm proceeds

as follows: a) peers that are interested in the client's content are

ranked based on the time they were last selected to be downloaders

(most recent first); b) the client selects as downloaders the

denotes the number of parallel downloaders. Dandelion's downloader

selection algorithm is similar to the seeder

choking algorithm used in the ``vanilla'' BitTorrent 4.0.2, as

documented in [41].

The algorithm is executed

by each client every 10 seconds. It is also executed when a when a peer

that is selected to be downloader disconnects. The algorithm proceeds

as follows: a) peers that are interested in the client's content are

ranked based on the time they were last selected to be downloaders

(most recent first); b) the client selects as downloaders the  top ranked peers; c) in case of a tie, the peer with the highest

download rate from the client is ranked higher; and d) the client

randomly selects an additional downloader from the non-selected nodes

that are interested in the client's content. Step (d) is performed in

expectation of discovering a fast downloader and to jumpstart peers

that recently joined the swarm.

top ranked peers; c) in case of a tie, the peer with the highest

download rate from the client is ranked higher; and d) the client

randomly selects an additional downloader from the non-selected nodes

that are interested in the client's content. Step (d) is performed in

expectation of discovering a fast downloader and to jumpstart peers

that recently joined the swarm.

This downloader selection algorithm aims at reducing the amount of

duplicate data a client needs to upload, before it has uploaded a full

copy of its content to the swarm. Downloader selection improves the

system's performance in the following additional ways. First, it limits

the number of peers a client concurrently uploads to, such that

complete chunks are made available to other peers and relayed by them

at faster rates. Second, given that all clients are connected to

roughly the same number of peers, it also limits the number of peers a

client concurrently downloads from to approximately

. As a result, the rate with which

the client downloads complete chunks increases. Last, by limiting the

number of connections over which clients upload, it avoids the

inefficiency and unfairness that is observed when many TCP flows share

a bottleneck link [46].

. As a result, the rate with which

the client downloads complete chunks increases. Last, by limiting the

number of connections over which clients upload, it avoids the

inefficiency and unfairness that is observed when many TCP flows share

a bottleneck link [46].

The number of peers that download from a client in parallel depends on

the client's upload bandwidth. We have empirically determined that a

good value for this parameter in the PlanetLab environment is 10.

7 Evaluation

The goal of our experimental evaluation is to demonstrate the viability

and to identify the

scalability limits of Dandelion's centralized and non-manipulable

virtual-currency-based incentives.

7.1

Dandelion Profiling

We profile the cost of operations performed by the server aiming at

identifying the performance bottlenecks of our design.

The measurements are performed on a dual

Pentium D 2.8GHZ/1MB CPU with 1GB RAM and 250GB/7200RPM HDD

running Linux 2.6.5-1.358smp.

Table 1:

Timings of per chunk transaction Dandelion operations.

Timings for operations 1-6 are obtained using

getrusage(RUSAGE SELF) over 10000 executions to obtain 1

usec precision. Timings for operations 7-9 are approximated

according to our application layer rate-limiting for

1Mb/s uplink and 1Mb/s downlink. They are provided as reference

for comparison with CPU-centric and credit management

operations. Timings for operations 10-13 are approximated using

gettimeofday() over 10000 executions. Operations 3 and 5 use

8-byte-block Blowfish-CBC with 128-bit key and 128-bit initialization

vector.1, 2 and 4 use HMAC-SHA1 with 128-bit key. Operation 6

uses SHA-1. Operations 10-12 are performed on a credit file with

10000 44-byte entries. For committing to disk in operations 12 and

13, we use fsync() and we disable HDD caching.

Table

1: Timings

of per chunk transaction Dandelion operations. Timings for operations

1-6 are obtained using getrusage(RUSAGE_SELF) over

10000 executions to obtain 1 usec precision.

Timings for operations 7-9 are approximated according to our

application layer rate-limiting for 1Mb/s uplink and 1Mb/s downlink.

They are provided as reference for comparison with CPU-centric and

credit management operations.

Timings for operations 10-13 are approximated using gettimeofday() over

10000 executions. Operations 3 and 5 use 8-byte-block

Blowfish-CBC with 128-bit key and 128-bit initialization vector.1, 2

and 4 use HMAC-SHA1 with 128-bit key. Operation 6 uses

SHA-1. Operations 10-12 are performed on a credit file with

10000 44-byte entries. For committing

to disk in operations 12 and 13, we use fsync() and we disable HDD

caching.

|

|

Table 1

lists the cost of per chunk

Dandelion operations. In a flash crowd event, the main task of a

Dandelion server is to: a) receive the decryption key request

(operation 7);

b) authenticate the request by computing an HMAC (operation 1); c)

verify the ticket by computing an HMAC (operation 2); d) decrypt the

encrypted decryption key (operation 3); e) query and update the credit

of the two clients involved (operations 10 and 11);

f) sign the decryption key response (operation 4); and g) send the

decryption key response (operation 8).

As can be seen in the table, the per chunk cryptographic

operations of the server (operations 1-4) are highly efficient

(total 109 usec), as only symmetric cryptography is employed. The

credit management operations (10 and 11) are also efficient (total 24

usec). On the other hand, the communication costs clearly dominate the

processing costs, indicating that for 1Mb/s uplink and downlink, the

downlink is the bottleneck.

The cost of a complaint is higher because in addition to verifying a

ticket, it involves reading a chunk, encrypting it with the sender

client's key (operation 5), and hashing

the encrypted chunk (operation 6).

Note that the profiling of the server repeats the same operation

multiple times.

It does not consider the parallel processing of I/O and CPU operations.

In addition, it does not include the cost of system calls and the cost

of TCP/IP stack processing. Therefore, we refrain from deriving

conclusions on the throughput of the server. Such conclusions are

derived in the subsequent evaluation.

7.2

Server Performance

A Dandelion server mediates the chunk exchanges between its

clients.

The download throughput of clients in our system is bound by

how fast a server can process their decryption key requests.

Both the server's computational resources and bandwidth may become the

performance

bottleneck.

We deploy a Dandelion server that runs on the same machine

as the one used for Dandelion profiling. We also deploy  200 clients that run on distinct

PlanetLab hosts. The server machine shares a 100Mb/s Ethernet II link.

To mitigate bandwidth variability in the shared link and to emulate a

low cost server with an uplink and downlink that ranges from 1Mb/s to

5Mb/s, we rate-limit the server at the application layer.

200 clients that run on distinct

PlanetLab hosts. The server machine shares a 100Mb/s Ethernet II link.

To mitigate bandwidth variability in the shared link and to emulate a

low cost server with an uplink and downlink that ranges from 1Mb/s to

5Mb/s, we rate-limit the server at the application layer.

In each experiment, the clients send requests for decryption

keys to the server and we measure the aggregate rate with which all

clients receive key responses. The server always queries and updates

the credit base from and to the credit file without forcing commitment

to disk. The specified per client request rate varies from 1 to 14

requests per second. For each request rate, the experiment duration was

10 minutes and the results were averaged over 10 runs. As the request

rate increases and the server's receiver buffers become full, clients

do not send new requests at the specified rate, due to TCP's flow

control. When the request rate increases to the point that the server's

resources become saturated, the

key response rate from the server decreases.

Figure 3(a)

depicts the server's decryption

key throughput for various server bandwidth capacities. As the

bandwidth

increases from 1Mb/s to 3Mb/s, the server's decryption key response

throughput increases. This indicates that for 1Mb/s to 3Mb/s access

links, the bottleneck is the bandwidth. When the bandwidth limit is